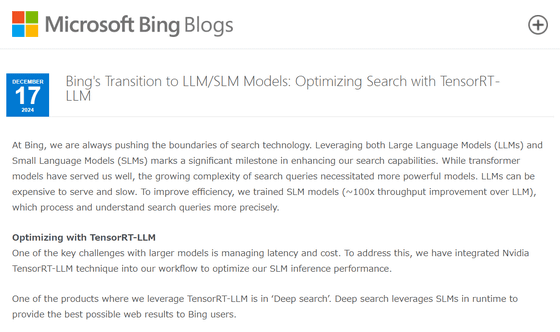

Microsoft's search engine Bing transitions from Transformer to a combination of LLM and SLM & announces integration of TensorRT-LLM

Microsoft has been using the machine learning model ' Transformer ' developed by Google for its search engine Bing. However, Microsoft has announced that it will move to a combination of large language models (LLM) and small language models (SLM) as Transformer has reached its limits. In addition, Microsoft has announced that it will optimize searches by integrating ' TensorRT-LLM ' developed by NVIDIA into its workflow.

Bing's Transition to LLM/SLM Models: Optimizing Search with TensorRT-LLM

https://blogs.bing.com/search-quality-insights/December-2024/Bing-s-Transition-to-LLM-SLM-Models-Optimizing-Search-with-TensorRT-LLM

Transformer is a neural network that learns context and therefore meaning by tracking the relationships between consecutive data, such as the words in a sentence. Transformer has also been used by Microsoft's Bing, but as search queries have become more complex, more powerful models are needed.

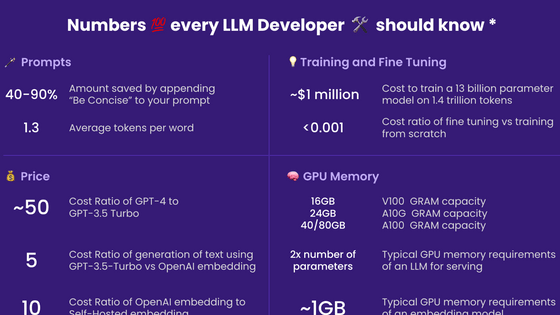

Therefore, Microsoft announced that it would move from Transformer to a combination of LLM and SLM. Microsoft explained that 'LLM tends to be expensive to provide and slow, so we combined it with SLM, which can more accurately process and understand search queries, to improve efficiency.'

In addition, to address the main challenges of LLM, namely latency and cost management, the company has also announced that it has optimized the inference performance of SLM by integrating NVIDIA's TensorRT-LLM into its workflow. One of the features that utilizes TensorRT-LLM is Bing's ' Deep Search '. Deep Search is a feature that uses the large-scale language model GPT-4 to expand search queries posed by users to Bing and provide several answers related to the question. Deep Search uses SLM to provide Bing users with the best possible web search results.

Deep Search Experience - YouTube

This experience involves several steps, such as understanding the intent of the user's query and ensuring the relevance and quality of web search results. Because SLM takes time to perform multiple steps, it needs to be accelerated to display search results as quickly as possible. According to Microsoft, by leveraging TensorRT-LLM, it is possible to reduce the inference time of the model without sacrificing the quality of the results, resulting in reduced latency for the end-to-end experience.

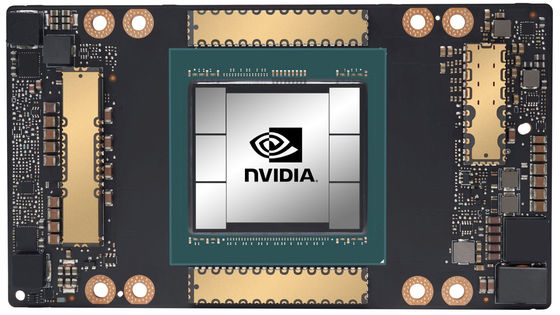

TensorRT-LLM hosts and runs LLM on NVIDIA A100 . Before optimization with TensorRT-LLM, the original Transformer model had a 95th percentile latency of 4.76 seconds per batch and a throughput of 4.2 queries per second per instance, with each batch consisting of 20 queries. By contrast, after integrating TensorRT-LLM, the 95th percentile latency was reduced to 3.03 seconds per batch and the throughput per instance increased to 6.6 queries per second. This not only resulted in faster search results and a better user experience, but also reduced the operational costs of running LLM by 57%.

TensorRT-LLM employs a method called SmoothQuant to perform inference using INT8 for both activations and weights while maintaining the accuracy of the network.

The benefits of migrating to TensorRT-LLM include:

Faster search results: With optimized inference, users enjoy faster response times, making their search experience more seamless and efficient.

Improved accuracy: SLM's enhancements provide more accurate, contextual search results, helping users find the information they need more effectively.

Cost-effective: Reducing the costs of hosting and running LLM allows us to continue investing in further innovation and improvements, ensuring Bing remains at the forefront of search technology.

Looking ahead, Microsoft said, 'We continue to innovate and improve our search technology while focusing on providing our users with the best experience possible. The transition to LLM and SLM and the integration of TensorRT LLM are just the beginning. We're excited about the possibilities for the future and look forward to sharing further progress with you.'

Related Posts:

in Software, Posted by logu_ii