OpenAI reports that malicious actors are trying to disrupt elections using ChatGPT

OpenAI released a comprehensive analysis of AI influence and misuse report on Wednesday, October 9, 2024. In the report, OpenAI stated that 'malicious actors are using ChatGPT to try to influence elections and political activities.'

Influence and cyber operations: an update OpenAI

(PDF file)

OpenAI says more cyber actors using its platform to disrupt elections

https://www.cnbc.com/2024/10/09/openai-says-more-cyber-actors-using-its-platform-to-disrupt-elections.html

OpenAI points out that while AI can be useful in security defenses, attackers are primarily using it at the intermediate stages -- after they have gained internet access, email addresses, and social media accounts, but before they can deploy malware or social media posts on a widespread scale.

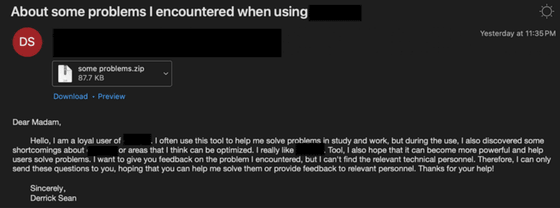

For example, a China-based threat actor called 'SweetSpecter' attempted a spear-phishing attack targeting OpenAI employees. SweetSpecter posed as a ChatGPT user and sent malicious attachments, and also attempted to use AI for reconnaissance, vulnerability investigation, scripting assistance, etc., but was blocked by OpenAI's security system.

Additionally, in August 2024, an Iranian threat actor known as 'STORM-0817' was attempting to use AI to develop malware targeting Android devices and to create social media scraping tools.

According to OpenAI, there is no evidence that the use of AI has significantly improved attackers' capabilities or enabled attacks with significant impact, which is consistent with assessments that GPT-4o and other tools have not significantly improved the capabilities of those seeking to exploit security. OpenAI claims that.

The report also touches on the misuse of AI in relation to elections and politics.

For example, a US-based threat actor known as 'A2Z' has been known to post political comments on Twitter and Facebook, using ChatGPT to generate the political comments it posts, with a particular focus on comments praising Azerbaijan.

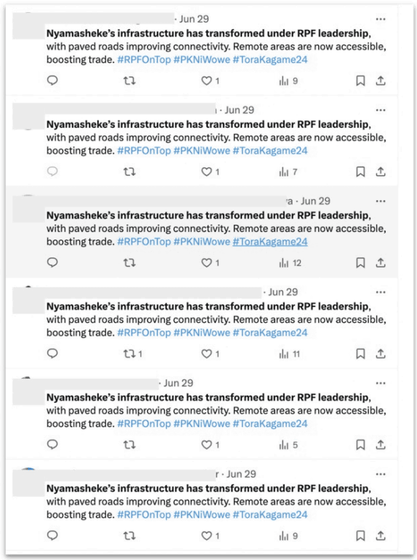

In addition, in July 2024, in the run-up to the Rwandan elections, a group was generating a large number of posts emphasizing the benefits of

However, OpenAI assessed that while many of these activities were conducted across multiple platforms, their impact was limited: In many cases, the content generated received little attention and low engagement, and in all cases, OpenAI said it was able to address the issue within 24 hours.

The US presidential election is scheduled for November 2024, and in Japan, the House of Representatives was dissolved on October 9, 2024, and a general election will be held on October 27, so there are many election events around the world in the second half of 2024. OpenAI announced its efforts to prevent the misuse of AI in elections in January 2024.

OpenAI announces measures to prevent AI from being misused in the 2024 election rush - GIGAZINE

In addition, in February 2024, many companies, including OpenAI, Microsoft, Google, Adobe, X, and Amazon, announced an agreement to combat election interference through deep fakes.

Adobe, Amazon, Google, IBM, Meta, Microsoft, OpenAI, TikTok, X and 11 other companies sign voluntary agreement to fight deep fakes that intentionally deceive voters in elections - GIGAZINE

OpenAI said AI companies could potentially provide complementary insights both upstream, such as from email and internet service providers, and downstream, such as from social media, but noted that having the right detection and investigation capabilities is key to this.

Related Posts:

in Note, Posted by log1i_yk