Adobe, Amazon, Google, IBM, Meta, Microsoft, OpenAI, TikTok, X and 11 other companies sign voluntary agreement to combat deepfakes that intentionally deceive voters in elections

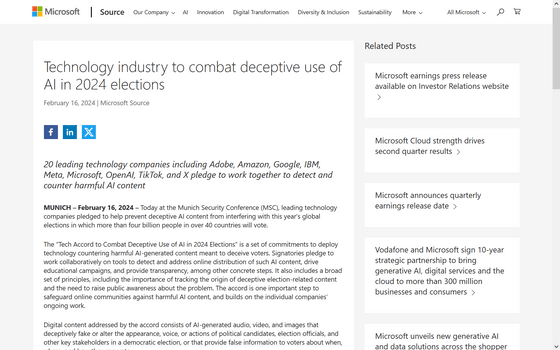

On February 16, 2024, the ``Technology Agreement to Combat Deceptive Use of AI in 2024 Elections'' was announced, in which Adobe and many other companies participate. The purpose is to counter content that misrepresents the appearance, voice, or actions of politicians, candidates, election officials, etc.

Technology industry to combat deceptive use of AI in 2024 elections - Stories

Meeting the moment: combating AI deepfakes in elections through today's new tech accord - Microsoft On the Issues

Want Gemini and ChatGPT to Write Political Campaigns? Just Gaslight Them

https://gizmodo.com/want-gemini-chatgpt-write-political-campaigns-1851265399

The most important purpose of the agreement announced this time is to prevent the spread of ``deepfakes,'' content that uses AI to alter parts of videos, images, and audio. The participating companies were 20 major technology companies, including Adobe, Amazon, Microsoft, and OpenAI, and were asked to unite across company boundaries.

Specific measures to deal with deepfakes include, in addition to each company's own efforts to counter fake information, the development of tools to detect and deal with AI content, the promotion of educational campaigns, and the transparency of policies. It is suggested that companies cooperate in securing such services.

The agreement pledged to address fraud through eight specific initiatives:

・Develop and implement technology, including open source tools, as necessary, to reduce risks associated with AI-based fake election content.

・Evaluate the models covered by this agreement and understand the risks associated with deceptive AI election content.

・Efforts to detect the distribution of such content on your own platform

・Efforts to appropriately deal with the content detected on your platform

・Cultivate cross-industry recovery measures against deceptive AI election content

Providing transparency to the public about how companies respond

・Continue to collaborate with diverse global civil society organizations and academic experts.

・Support initiatives that foster public awareness, media literacy, and the resilience of society as a whole.

'Deceptive AI election content' as defined in the above item means 'deceptively falsifying or altering the appearance, voice, or behavior of politicians, candidates, election administrators, or other important stakeholders in the election.' 'or any persuasive AI-generated audio, video, or image that provides false information to voters about when, where, or how they can legally vote.' The main purpose of this agreement is to prevent the spread of such content.

In joining the agreement, Microsoft said, ``This is an important step toward protecting online communities from harmful AI content, and it builds on the continued efforts of each company.'' Ambassador Christopher Heusgen, Chairman of the Munich Security Council, who brokered the deal, said: ``The Technology Agreement to Combat Deceptive Use of AI in the 2024 (U.S. Presidential) Election will promote electoral integrity and support social recovery. 'This is a critical step in building stronger capabilities and creating trusted technology practices.'

On the other hand, it has been pointed out that the AI chat services 'Gemini' and 'ChatGPT' provided by Google and OpenAI, which participated in the agreement, can easily generate deceptive AI election content. OpenAI and other companies have policies that prohibit the use of their services for political activities targeting specific groups, but according to Gizmode, it is possible to generate multiple fake campaign speeches imitating specific politicians. It seems that the following sentences were able to be generated.

Related Posts:

in Software, Posted by log1p_kr