GAZEploit, an attack that tracks users' gazes with Apple Vision Pro and steals passwords, is discovered

Apple's first spatial computing device,

[2409.08122] GAZEploit: Remote Keystroke Inference Attack by Gaze Estimation from Avatar Views in VR/MR Devices

https://arxiv.org/abs/2409.08122

GAZEploit

https://sites.google.com/view/Gazeploit/

Apple Patches Vision Pro Vulnerability to Prevent GAZEploit Attacks - SecurityWeek

https://www.securityweek.com/apple-patches-vision-pro-vulnerability-to-prevent-gazeploit-attacks/

You can get a good idea of what kind of device the 'Apple Vision Pro' is by looking at the unboxing article below.

Apple's first high-end MR headset 'Apple Vision Pro' for about 600,000 yen has finally been released in Japan, so I opened it and set it up - GIGAZINE

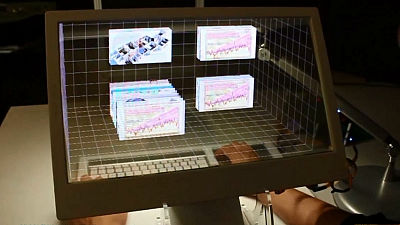

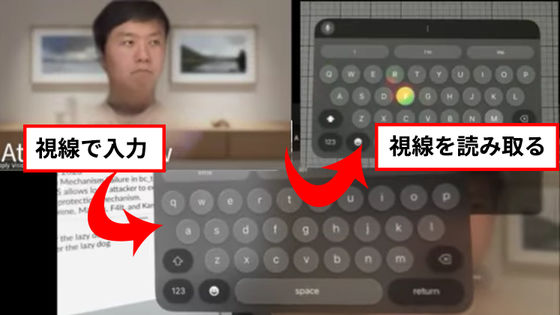

One of the features of Apple Vision Pro is that it is equipped with 'eye tracking' that uses your gaze instead of a cursor. For example, you can respond by seeing 'Yes' on the left and 'No' on the right, or you can type on a keyboard displayed in front of you just by looking at it. Input using eye tracking not only improves the user experience, but also has the advantage that it is less likely to reveal the contents of your password to people around you or the person you are video calling with, compared to 'hand gestures' or 'voice recognition' when entering a password.

However, a research team from the University of Florida and Texas Tech University announced that there was a vulnerability in the eye tracking function of Apple Vision Pro. Apple Vision Pro has a feature called 'Persona' that uses a realistic avatar based on the user's face during video calls and meetings. According to the researchers, a vulnerability was found that allows the movement of a person attempting to enter keys with their gaze to be captured and analyzed from Persona, allowing the entered keys to be reconstructed. The researchers call the attack (exploit) that uses this vulnerability to steal entered characters 'GAZEploit'.

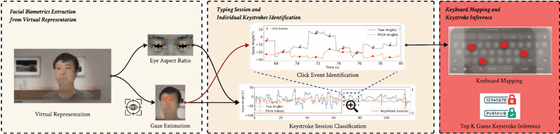

Below is a demo video of GAZEploit. The user in the upper left is an avatar, or persona, in a virtual space that can be seen by others. The user types by moving their eyes to look at the characters on the keyboard (center) that are only visible to them, and by analyzing their movements, the input is displayed as shown in the upper right.

GAZEploit, unblurred version of demo video - YouTube

GAZEploit uses two biometrics extracted from an individual's records: eye aspect ratio and gaze estimation. It analyzes the results to distinguish typing from other Apple Vision Pro-related activities, such as watching videos or playing games. It then maps the extracted gaze movements on a virtual keyboard to determine potential keystrokes. Research has shown that while typing, people tend to focus their gaze in a more cyclical pattern and blink less frequently.

GAZEploit's research was tested on the personal data of 30 people and showed high accuracy in detecting when users typed messages, passwords, URLs, email addresses, and passcodes. 'We developed algorithms that calculate eye gaze stability and set thresholds to classify eye movements. Evaluation on our dataset showed that the accuracy of identifying keystrokes within a typing session was 85.9% and the recall was 96.8%,' the researchers said.

Apple has acknowledged GAZEploit as a vulnerability that 'inputs to the virtual keyboard may be inferred from the persona,' and announced in

Cyber Security News, which distributes security-related news, said, 'While Apple quickly patched these vulnerabilities, it is a reminder that new attack vectors may continue to emerge as VR and AR capabilities expand. These studies underscore the need for strong privacy safeguards as VR technology becomes more widespread. As immersive systems collect increasingly rich behavioral data, balancing user experience with data protection will be important for widespread adoption.'

Related Posts: