How Google's machine learning architecture 'Transformer' is used to recommend music to users?

The machine learning architecture ' Transformer ', announced by Google researchers in 2017, plays an important role in building large-scale language models such as GPT-4 and Llama. Google says that Transformer can be used to build 'a more accurate music recommendation system based on user behavior'.

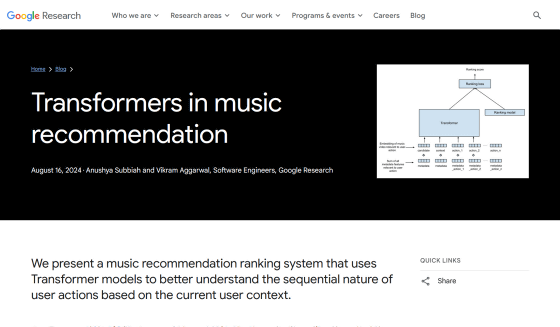

Transformers in music recommendation

https://research.google/blog/transformers-in-music-recommendation/

Transformer generates words that follow specific words or sentences through five steps: 'Tokenization,' 'Embedding,' 'Positional encoding,' 'Transformer block,' and 'Softmax.' This mechanism can be applied not only to text generation but also to AI for various other purposes.

How does the machine learning model 'Transformer' used in ChatGPT generate natural sentences? - GIGAZINE

Music subscription services such as YouTube Music and Apple Music contain a large number of songs, but it is difficult for a single user to listen to a large number of songs and find which ones suit their tastes. This is where a music recommendation system that matches the user's tastes becomes important. If you can successfully recommend songs that users like, users will listen to music for longer periods of time and use the platform more.

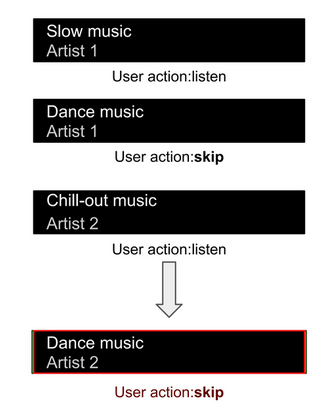

In general, recommendation systems for music subscription services place importance on the user's actions on recommended songs, in addition to the trends in songs the user has listened to in the past. If a user skips a song recommended to them, they are unlikely to like similar songs very much. However, if they listen to the song and add it to their favorites or listen to other songs by the same artist, they are likely to like similar songs.

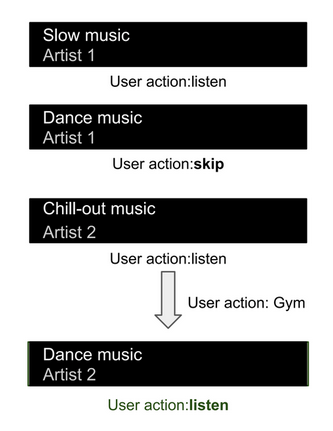

However, users' preferences are not always fixed, and the songs they want to listen to may change depending on the situation. For example, a user who generally likes slow-tempo songs is likely to skip over a recommended up-tempo song.

However, if you're working out at the gym, you might prefer to listen to more upbeat, pumping music than usual.

A typical user will perform hundreds of actions, some of which may be performed infrequently, while others may be performed frequently. To build a good recommendation system, you need to be flexible in handling data of various sizes.

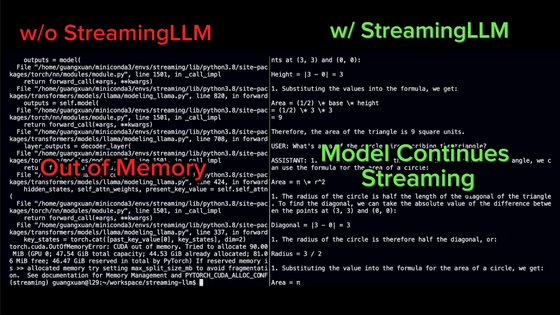

Existing models could collect data on user actions and situations, rank songs that match the user's needs based on that data, and filter the songs recommended to the user. However, it was difficult to identify which actions were related to the user's current needs, so the context of the actions could not be reflected well in the recommendations.

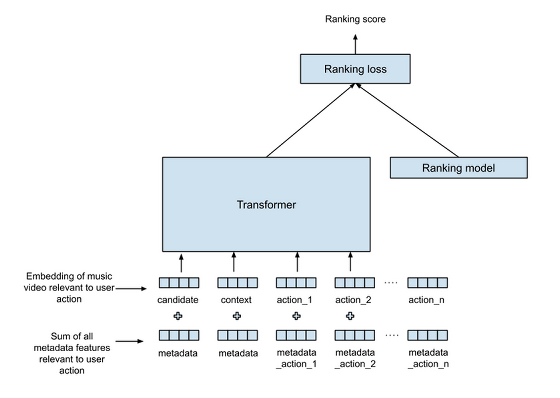

Google claims that Transformer is well suited to processing this diverse input data. Transformer can perform well in translation and classification tasks even when the input text is ambiguous. By applying this to a music recommendation system, it can determine the importance of actions according to the situation and adjust the weights.

Google is already using Transformer in its YouTube Music recommendation system. Google combines Transformer with its existing ranking system to create rankings that optimally combine user actions and listening history.

Offline analysis and live experiments conducted by Google have shown that using Transformer in a music recommendation system significantly improves the performance of the ranking model, reducing skip rates and helping users spend more time listening to music.

Google plans to apply this technique to other recommendation systems, such as search models, and to incorporate other factors into its music recommendation system to improve its performance.

Related Posts:

in Software, Web Service, Posted by log1h_ik