Meta announces open source AI tool 'AudioCraft' for generating music and sound effects from text

AI technology has advanced rapidly in recent years, and AI that generates highly accurate text and images has appeared. Newly, Meta, which operates Facebook, Instagram, etc., has announced an open source AI tool ` ` AudioCraft '' that generates music and sound effects based on text.

AudioCraft: A simple one-stop shop for audio modeling

Meta releases AudioCraft AI tool to create music from text | Reuters

https://jp.reuters.com/article/meta-platforms-ai/meta-releases-audiocraft-ai-tool-to-create-music-from-text-idUSL4N39J3PA

Meta releases open source AI audio tools, AudioCraft | Ars Technica

https://arstechnica.com/information-technology/2023/08/open-source-audiocraft-can-make-dogs-bark-and-symphonies-soar-from-text-using-ai/

On its official blog, Meta said that AI equipped with large-scale language models in recent years has made great progress in text generation, machine translation, voice dialogue agents, image and video generation, etc., but lags behind in the audio field. indicate. Of course, AIs that generate music from text have been announced many times in the past, but they were complicated and not very open, so it was difficult for people to try them easily, Meta says.

Generating high-fidelity audio requires modeling complex signals and patterns at various scales. Music, in particular, is a difficult type of audio to generate because it has a musical structure consisting of a series of notes and multiple instruments. According to Meta, samples used in text-based generative models consist of thousands of timesteps per sample, while a typical few-minute music sample recorded at standard-quality 44.1 kHz is It is said that it is fair in millions of timesteps.

Therefore, Meta is an open source audio generation AI that consists of three models: ' MusicGen ' that generates music, ' AudioGen ' that generates sound such as sound effects, and ' EnCodec ', a neural network-based audio compression codec. We announced the tool 'AudioCraft'. MusicGen is trained on Meta's approximately 20,000 hours of music with metadata in total, and AudioGen is trained on public sound effects, Meta explains.

When you play the video embedded in the post to X (formerly Twitter) below, AudioCraft shows 'Movie-scene in a desert with percussion' and '80s electric with drum beats' You can listen to the music generated by entering prompts such as 'Jazz instrumental, medium tempo, spirited piano' and 'Jazz instrumental, medium tempo, spirited piano'.

???? Today we're sharing details about AudioCraft, a family of generative AI models that lets you easily generate high-quality audio and music from text. https://t.co/04XAq4rlap pic.twitter.com/JreMIBGbTF

—Meta Newsroom (@MetaNewsroom) August 2, 2023

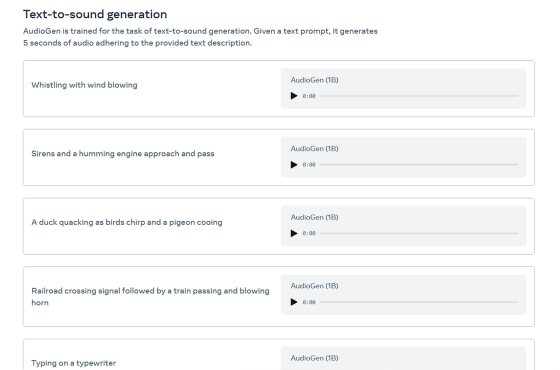

Also, the official site uses AudioCraft with prompts such as 'Whistling with wind blowing', 'Sirens and a humming engine approach and pass' It is possible to hear various sound effects generated.

AudioCraft's models are open source and available for people to use for research purposes and to improve their understanding of the technology. In a blog post, Meta said, 'By sharing AudioCraft's code, other researchers will be able to more easily test new approaches to limit or eliminate potential biases and misuse of generative models. ``Responsible innovation cannot happen in isolation. By open sourcing our research and results models, we can ensure equal access for everyone.'' I was.

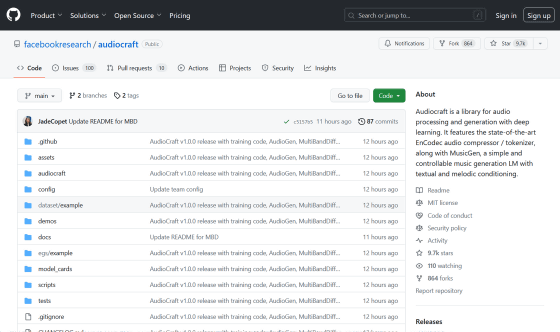

The AudioCraft code can be viewed in the following GitHub repository.

GitHub - facebookresearch/audiocraft: Audiocraft is a library for audio processing and generation with deep learning. It features the state-of-the-art EnCodec audio compressor / tokenizer, along with MusicGen, a simple and controllable music generation LM with textual and melodic conditioning.

https://github.com/facebookresearch/audiocraft

Related Posts:

in Software, Web Service, Video, Art, Posted by log1h_ik