A music generation model ``Music ControlNet'' that can control elements that change over time such as melody and tempo in addition to text will be developed.

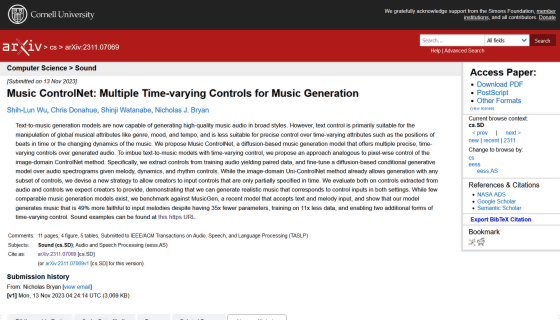

In recent years, AI tools that generate music from text have made progress, and it is now possible to generate high-quality music in a variety of styles. , it was difficult to control attributes that changed over time. A research team from Carnegie Mellon University and Adobe Research has announced a music generation model called Music ControlNet that enables multiple temporal change controls.

[2311.07069] Music ControlNet: Multiple Time-varying Controls for Music Generation

Music ControlNet

https://musiccontrolnet.github.io/web/

There have been many AI models that generate music based on text, and Meta has also announced AudioCraft , an open source tool that generates music and sound effects from text. However, the research team says, ``Text-based controls are primarily suited for manipulating global musical attributes such as genre, mood, and tempo, and are not suitable for manipulating attributes that change over time, such as the temporal placement of beats or changes in musical dynamics.'' 'It's not very suitable for precise control.'

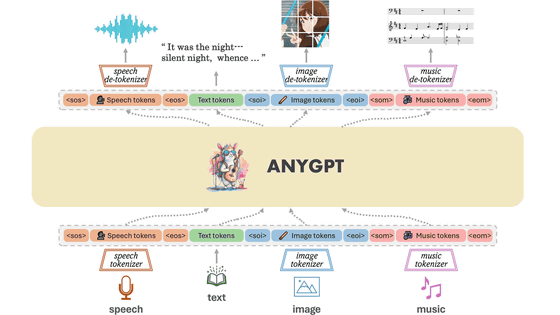

Therefore, the research team developed Music ControlNet, a music generation model based on a diffusion model that provides control over multiple temporal attributes of audio. Music ControlNet uses an approach similar to a neural network called `` ControlNet '' to incorporate temporal control into a model that generates music from text.

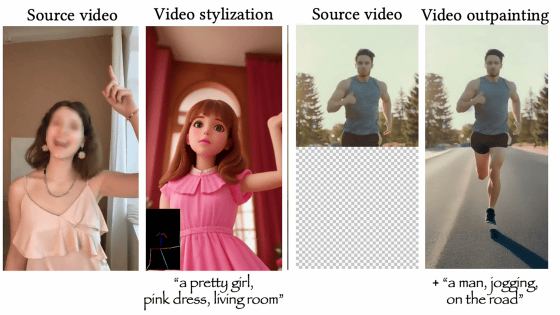

ControlNet is a technology that supports output by adding contours, depth, image segmentation information, etc. to pre-trained models, and can improve the quality of generated images by combining it with image generation models. I can. The research team applied this to a model that generates music rather than images.

You can see what kind of music generation model 'Music ControlNet' is by watching the video below.

Music ControlNet: Multiple Time-varying Controls for Music Generation - YouTube

In the conventional model that generates music from text, AI simply generates music that sounds like it based on a text such as 'Powerful rock.'

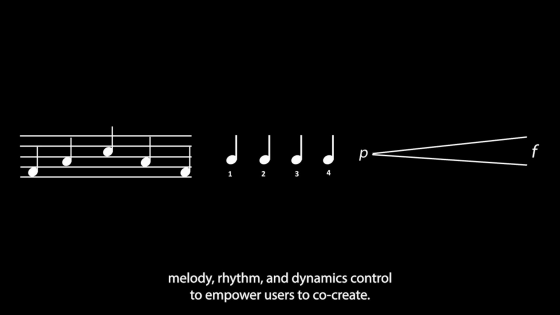

Music ControlNet is a new music generation model that also has the ability to control temporal attributes such as melody, rhythm, and dynamics.

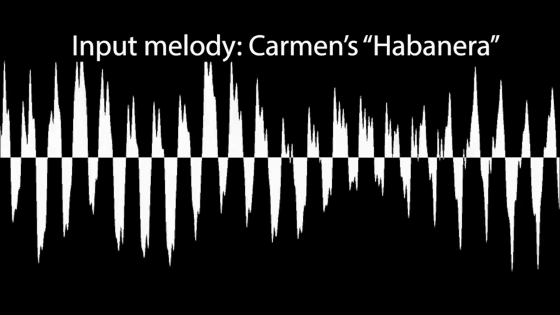

With Music ControlNet, you can input not only text but also your favorite melody. In the video, as an example, the melody of

You can combine the input melody with text such as 'Angry, Hip-hop' to create a hip-hop sound while maintaining the melody.

You can also change the text, such as 'Sexy, Electronic,' while keeping the melody as is.

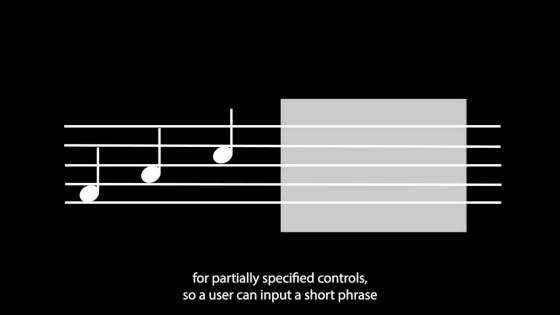

Also, with Music ControlNet, it is possible to control only part of the music and leave the rest to AI.

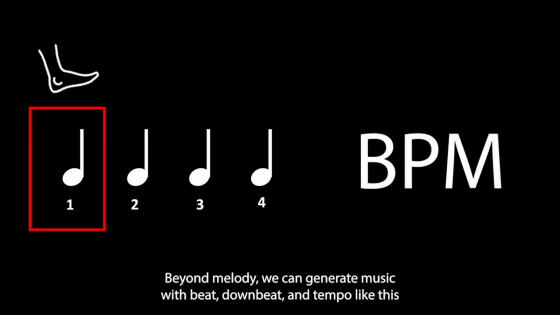

You can also adjust the tempo, beat, and BPM.

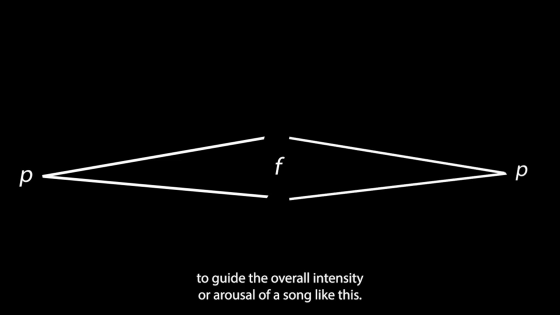

You can also specify the dynamics of the music.

When I asked it to get louder and louder, it started off with a low-key tune.

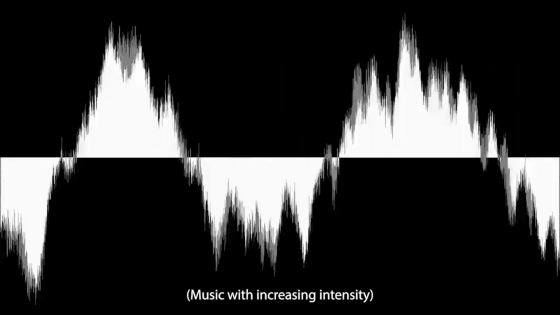

Then, the melody gradually became more intense.

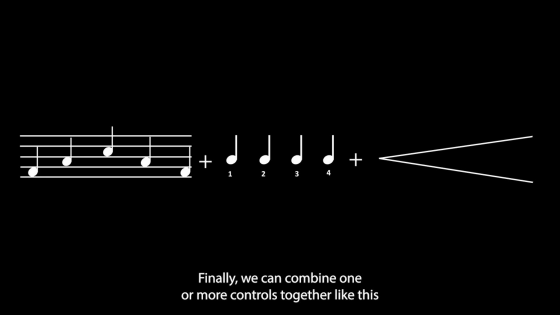

You can also control multiple elements at the same time.

Related Posts: