What is the 'correct way to use AI' as explained by Google DeepMind researchers?

How I Use 'AI'

https://nicholas.carlini.com/writing/2024/how-i-use-ai.html

Carlini feels that large-scale language models (LLMs) are 'overrated' by the public. However, he also wrote, 'As someone who has spent at least a few hours a week using LLMs for the past year, I am impressed with their ability to solve the difficult tasks I give them,' and as a heavy LLM user, he endorses their capabilities. Carlini wrote, 'LLMs have made writing code at least 50% faster for both research projects and side projects,' pointing out that LLMs can greatly improve the efficiency of users' work.

Therefore, Carlini explains 'the correct way to use LLM' in his blog. Carlini gives the following examples of how to use LLM, which he explains can be roughly categorized into 'study support' and 'automation of tedious tasks'.

-Build a web app using technologies you've never used before.

- Learn how to use various frameworks that you may not have used before.

- Converting dozens of programs to C or Rust can improve performance by 10-100x.

- Reduce large code bases and greatly simplify projects.

-Writing the initial experimental code for almost every research paper written in the past year.

Automate almost any mundane task or one-off script.

- Successfully replaced almost complete web search to help set up and configure new packages and projects.

Approximately 50% of error messages are replaced with web searches that help debug the error message.

Carlini cautions, 'These examples are just the ones I was able to use the LLM for. That is, they were tasks born out of the need to do real work, not tasks designed to showcase the LLM's impressive capabilities. So the examples are not sexy. And it shows that the majority of the work I do every day is not sexy, and can be largely automated with the currently available LLMs.'

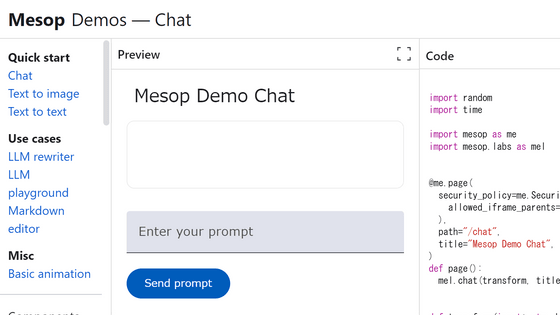

◆Build an app

In 2023, Carlini used GPT-4 to create

In the prompts, participants ask GPT-4 to implement the elements they want by describing them in words. Examples of prompts that were actually used include 'How can I add an inline frame to JavaScript that contains content loaded from a string?'

'LLM is great at solving problems that people have solved before,' Carlini wrote. '99% of the quiz app is basic HTML with a Python web server backend that anyone in the world can write. The reason this quiz app became popular is not because the technology behind it is good, but because the quizzes are fun. By automating the boring parts of app development with LLM, it has become very easy to create quiz apps.'

Carlini also wrote that he would never have created the quiz app if he hadn't been able to build it using the LLM.

◆ Learning support

To tackle something new, you need to acquire new knowledge about it, but humans only have a limited amount of time in a day. LLM is useful in such situations. For example, Carlini argues that it is much more efficient to use LLM to learn what is needed to solve a task, rather than fully understanding relatively new frameworks and tools such as Docker, Flexbox, and React.

Carlini needed to use Docker to complete his personal project, but he had never used it before and had very little knowledge of it. However, he didn't need to fully understand Docker to complete the project; he said he only needed about 10% understanding.

If this was the 1990s, you would have had to buy a book explaining the basics of Docker and read the first few chapters. In the 2010s or so, you might have solved your problem by searching the internet for a tutorial on how to use Docker, or by browsing blogs of people who were using Docker for the same purposes as you.

However, with LLM, you can learn only the necessary parts about Docker. In fact, Carlini was taught how to set up Docker by ChatGPT, and the details of the exchange are summarized on the following page.

Docker VM Bash Setup

'Rather than evaluating what an LLM can do, we need to evaluate what it can't do,' Carlini said. Just as you wouldn't consider someone completely useless just because you can't divide 64-bit integers in your head, just because there are tasks that an LLM can't solve doesn't mean that the LLM is incompetent. The question is, 'Can you find tasks that an LLM can provide value to?' Carlini said.

He added, 'LLMs don't mean you can do everything. They can't do most things. But today's LLMs are already quite valuable.' 'Most of the examples I gave are simple tasks that any computer science undergraduate could learn and do. But no one has a magical friend who is happy to answer any questions you have about these tasks. But an LLM can. An LLM still can't completely replace a programmer's work, but it can be a good substitute for simple tasks.'

Carlini also notes that he has been able to “only describe less than 2%” of the cases in which he has used the LLM, and argues that there are many other ways in which the LLM can be used beyond the examples he has given.

Related Posts:

in Software, Posted by logu_ii