Meta explains what they paid attention to and how they approached training large-scale language models

Meta released

How Meta trains large language models at scale - Engineering at Meta

https://engineering.fb.com/2024/06/12/data-infrastructure/training-large-language-models-at-scale-meta/

Meta has previously trained various AI models for recommendation systems on Facebook and Instagram, but these were small-scale models that required a relatively small number of GPUs, unlike the large-scale language models that require huge amounts of data and GPUs, such as the Llama series.

Challenges in training large-scale language models

In order to train a large-scale language model, which differs from traditional small-scale AI models, Meta says it needs to overcome four challenges:

Hardware reliability: As the number of GPUs in a job increases, hardware reliability is required to minimize the chance of failures interrupting training, including rigorous testing and quality control measures, and automated processes for detecting and fixing issues.

Rapid recovery in the event of a failure: Despite best efforts, hardware failures can occur, so it is important to take proactive steps to prepare for recovery, such as rescheduling training to reduce the load and quickly reinitializing.

- Efficiently saving training state: Training state needs to be periodically checked and efficiently saved so that in case of failure, training can be resumed from the point where it was interrupted.

Optimal connectivity between GPUs: Training large language models requires synchronizing and transferring large amounts of data between GPUs, which requires a robust, high-speed network infrastructure and efficient data transfer protocols and algorithms.

◆Infrastructure Innovation

Meta says that to overcome the challenges of large-scale language models, it was necessary to improve its entire infrastructure. For example, Meta allows researchers to use the machine learning library

Of course, to provide the computing power required for training large-scale language models, it is also essential to optimize the configuration and attributes of high-performance hardware for generative AI. Even when deploying the constructed GPU cluster in a data center, it is difficult to change the power and cooling infrastructure in the data center, so it is necessary to consider trade-offs and optimal layouts for other types of workloads.

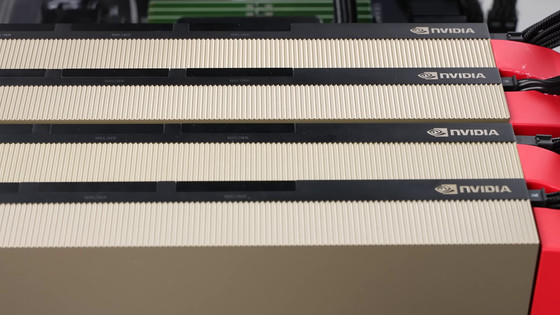

Meta has revealed that it has built a GPU cluster equipped with 24,576 NVIDIA H100 GPUs and is using them to train large-scale language models, including Llama 3.

Meta releases information about a GPU cluster equipped with 24,576 'NVIDIA H100 GPUs' and used for training on 'Llama 3' and other games - GIGAZINE

◆ Reliability Issues

To minimize downtime in the event of hardware failure, it is necessary to plan in advance how to detect and fix the problem. The number of failures is proportional to the size of the GPU cluster, and when running jobs that span the cluster, spare resources for restart should be reserved. The three most common failures observed by Meta are:

Degraded GPU performance: There are a few reasons for this issue, but it is most commonly seen in the early stages of running a GPU cluster and seems to subside as the servers get older.

- Uncorrectable errors in DRAM and SRAM: It is common for there to be uncorrectable errors in memory, and it is necessary to constantly monitor for uncorrectable errors and request a replacement from the vendor if a threshold is exceeded.

Network cable problems: Network cable problems are seen most frequently in the early stages of a server's life.

◆Network

When considering the rapid transfer of huge amounts of data between GPUs, there are two options : 'RDMA over Converged Ethernet (RoCE)' and 'Infiniband.' Originally, Meta has been using RoCE in production for four years, but the largest GPU cluster built with RoCE only supported 4,000 GPUs. On the other hand, the research GPU cluster was built with Infiniband, which had 16,000 GPUs but was not integrated into the production environment, and was not built for the latest generation of GPUs or networks.

When asked which system to use to build its network, Meta decided to build a cluster of about 24,000 GPUs using both RoCE and Infiniband. This was to accumulate operational experience with both networks, learn from them, and use them in the future, Meta said.

Future Outlook

Meta said, 'Over the next few decades, we will use hundreds of thousands of GPUs to handle even larger volumes of data and deal with longer distances and latencies. We will adopt new hardware technologies, including new GPU architectures, and evolve our infrastructure,' and will continue to push the boundaries of AI.

Related Posts: