Stanford University's 'AI Index Report 2024' is released, summarizing 'AI is more powerful than humans, but humans are better in some tests' and 'The learning cost of high-performance AI is tens of billions of yen.'

Research and development of AI is progressing rapidly, and high-performance AI such as 'AI capable of both everyday conversation and coding,' 'AI capable of generating high-quality images,' and 'AI capable of controlling robots with high precision' are appearing one after another. Stanford University has released the ' AI Index Report 2024, ' which summarizes the current state of AI.

AI Index Report 2024 – Artificial Intelligence Index

Stanford University has been publishing the AI Index Report every year since 2017, which summarizes AI capabilities and research status. The AI Index Report 2024, released on April 15, 2024, contains the results of an analysis of a huge amount of data on AI up to 2023, and is 502 pages long. The main points of the report are as follows:

◆01: AI can outperform humans in some tasks, but not all tasks

◆02: Companies are taking the lead over academic institutions in developing cutting-edge AI

◆03: The costs of training AI are becoming extremely high

◆04: Most of the notable AI models come from the US, followed by China

◆05: There is a lack of standardized assessment criteria for AI safety

◆06: Investment in generative AI is soaring

◆07: AI will increase worker productivity

◆08: AI is accelerating scientific progress

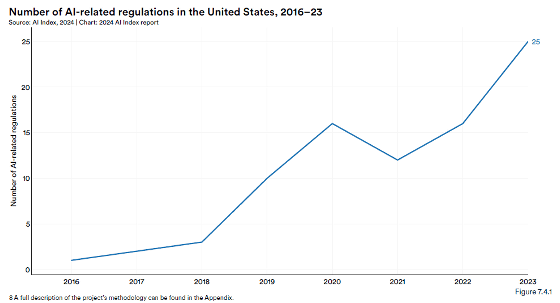

◆09: AI regulations are rapidly increasing in the US

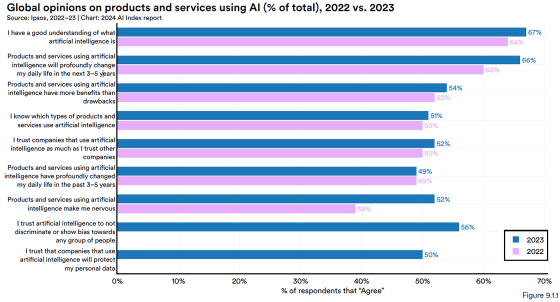

◆10: People around the world are aware of the impact of AI

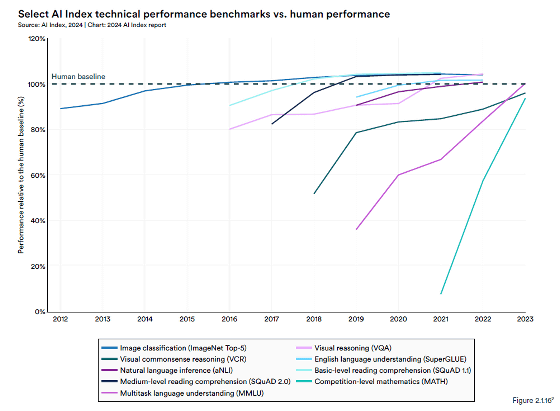

◆01: AI can outperform humans in some tasks, but not all tasks

Below are the results of comparing the scores of humans and AI in nine types of benchmarks that measure language processing performance and image processing performance. The horizontal axis shows the year of measurement, and the vertical axis shows the percentage of the AI score when the human score is 100%. The benchmarks were run on multiple AIs, and the AI value that recorded the highest score in each benchmark was adopted. Looking at the graph, we can see that AI scores higher than humans in tasks such as simple language processing and image recognition. On the other hand, humans maintain higher scores in the visual common sense reasoning (VCR) benchmark and competitive level mathematics (MATH), which test judgment ability based on common sense in human society.

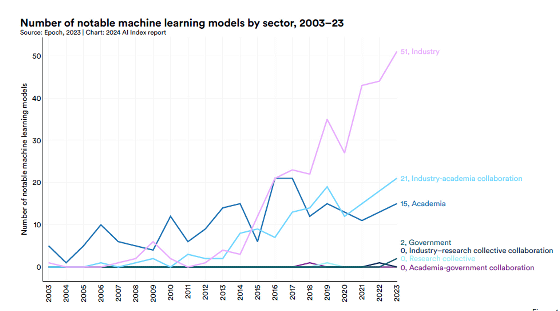

◆02: Companies are taking the lead over academic institutions in developing cutting-edge AI

Of the 'Notable Machine Learning Models for 2023' compiled by Stanford University, 51 were developed by companies, 21 were developed in collaboration between companies and academic institutions, and 15 were developed by academic institutions.

◆03: The costs of learning AI are becoming extremely high

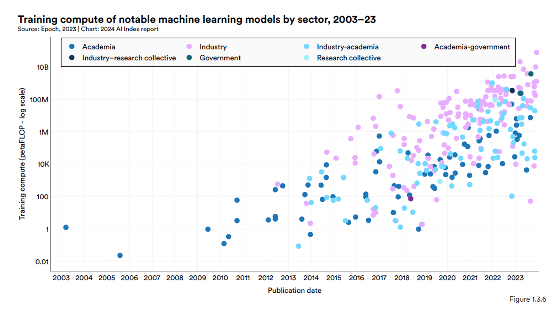

Below is a graph summarizing the processing power (FLOPs) of the machines used to train notable machine learning models. The processing power of the machines used for training is on the rise, and it can be seen that the corporate models (pink) are developed on more powerful machines than the academic models (blue).

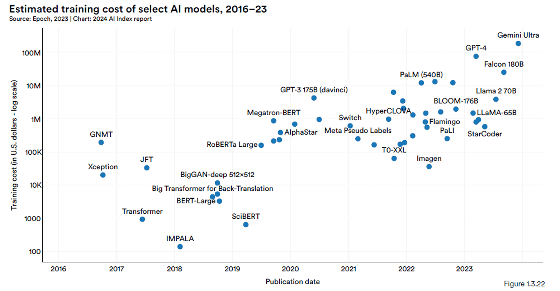

Below is a graph summarizing the training costs of machine learning models. As machine performance improves and models become more complex, training costs are increasing, with $78 million (about 12.1 billion yen) spent on training GPT-4 and $191 million (about 29.5 billion yen) spent on training Gemini Ultra.

◆04: Most of the notable AI models come from the US, followed by China

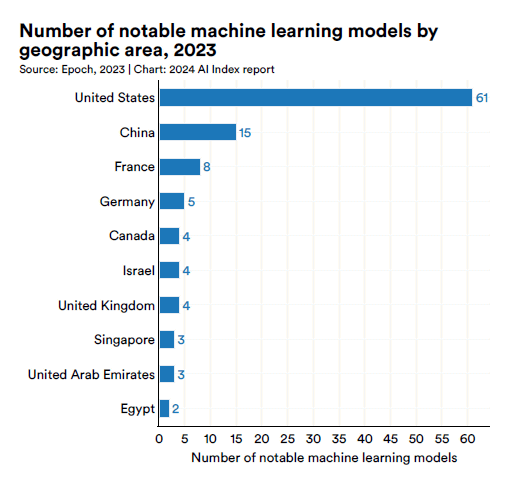

Here are the countries that developed notable machine learning models: the United States developed 61 models, China 15, France 8, and Germany 5.

05: There is a lack of standardized evaluation criteria for AI safety

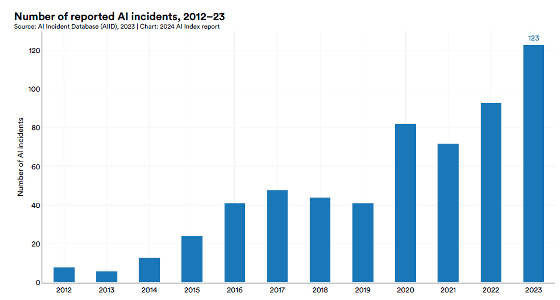

AI can pose a threat to humans, and there have already been incidents

Stanford University argues that 'standardized AI evaluation criteria' are needed to properly evaluate the safety of AI.

◆06: Investment in generative AI is soaring

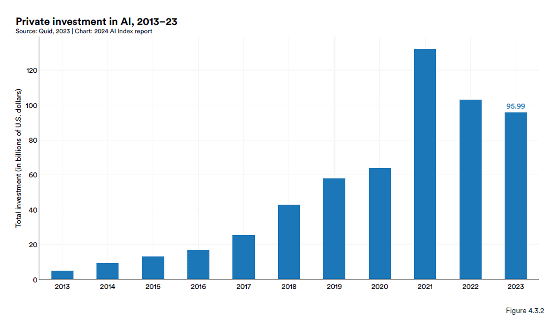

Total private investment in AI across all genres has been on a downward trend since peaking in 2021.

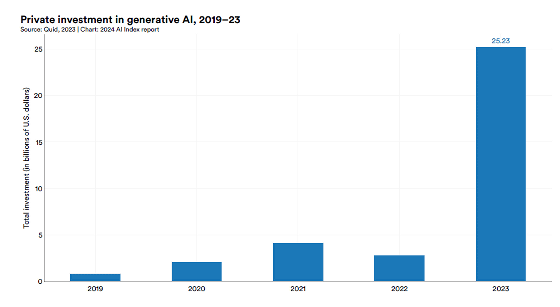

On the other hand, when it comes to generative AI alone, total private investment surged in 2023, reaching $25.23 billion (about 3.9 trillion yen).

◆07: AI will increase worker productivity

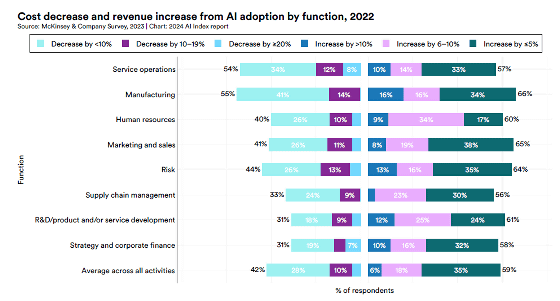

The graph below shows the percentage of companies that responded that AI has reduced costs and increased revenue by industry when asked about costs and revenues. More than half of the respondents in all industries responded that revenues have increased, and more than half of the respondents in the service and manufacturing industries responded that costs have decreased.

◆08: AI is accelerating scientific progress

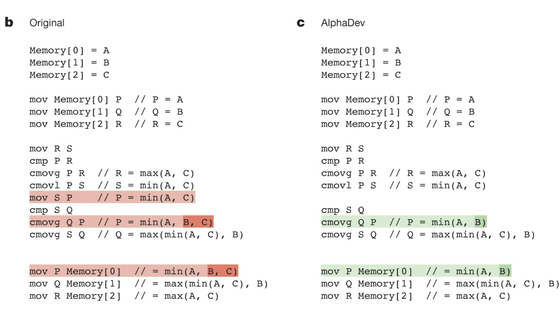

In 2023, AI with the ability to advance science was announced, such as the algorithm improvement AI 'AlphaDev' and the new material discovery AI 'GNoME.'

AlphaDev is an AI developed by Google DeepMind that can improve algorithms using methods that are difficult for humans to discover. Details of AlphaDev are summarized in the following article.

Like AlphaDev, GNoME is an AI developed by Google DeepMind that can efficiently discover 'chemically stable structures.' In November 2023, it was reported that 2.2 million crystal structures had been discovered using GNoME.

Google DeepMind uses AI tools to discover 2.2 million new crystal structures, more than 45 times the number previously discovered - GIGAZINE

◆09: AI regulations are rapidly increasing in the US

As of 2016, the US had only one AI-related regulation, but by 2023, 25 new regulations have been enacted.

◆10: People around the world are aware of the impact of AI

Below is a graph comparing the results of the survey on the impact of AI on themselves in 2023 (blue) and 2022 (red). The number of people who answered 'I have a good understanding of AI' increased from 64% in 2022 to 67% in 2023, and the number of people who answered 'AI-based services will change my life in the next 3 to 5 years' increased from 60% in 2022 to 66% in 2023.

The full text of the AI Index Report 2024 can be viewed at the following link.

AI Index Report 2024

(PDF file) https://aiindex.stanford.edu/wp-content/uploads/2024/04/HAI_AI-Index-Report-2024.pdf

◆ Forum is currently open

A forum related to this article has been set up on the official GIGAZINE Discord server . Anyone can post freely, so please feel free to comment! If you do not have a Discord account, please refer to the account creation procedure article to create an account!

• Discord | 'Has your image of AI changed throughout 2023?' | GIGAZINE

https://discord.com/channels/1037961069903216680/1230106134774284360

Related Posts:

in Software, Posted by log1o_hf