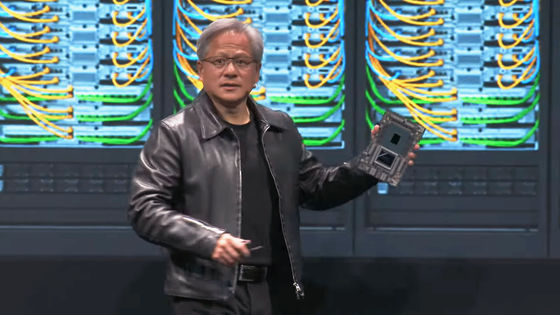

NVIDIA announces Blackwell GPU architecture and B200 GPU for trillion-parameter AI models

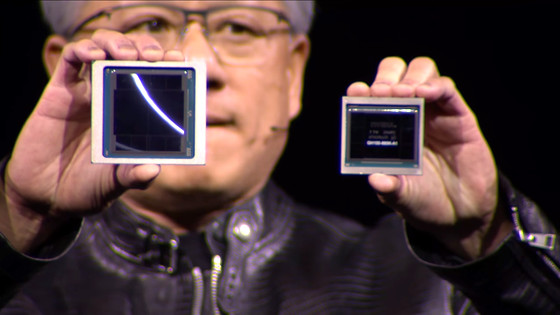

NVIDIA announced its first new GPU architecture in two years, Blackwell , and the Blackwell architecture-based GPU B200 at the technology conference

Blackwell Architecture for Generative AI | NVIDIA

https://www.nvidia.com/ja-jp/data-center/technologies/blackwell-architecture/

The Blackwell architecture is the successor to the Hopper architecture, announced in 2022. It is named after mathematician and statistician

NVIDIA announces next-generation GPU architecture 'Hopper', AI processing speed is six times faster than Ampere and other performance improvements are dramatically improved - GIGAZINE

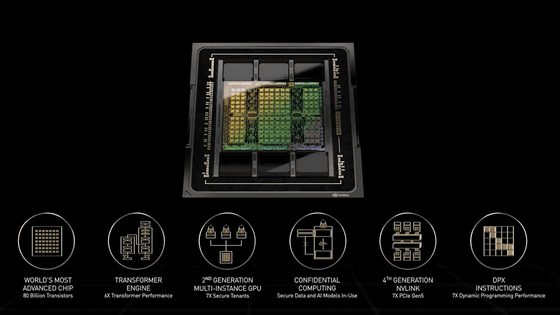

According to NVIDIA, Blackwell is equipped with six innovative technologies.

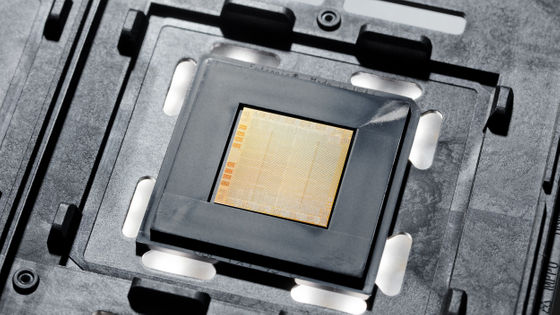

◆1: The world's most powerful chip

The Hopper architecture GPU H100 has 80 billion transistors manufactured using TSMC's 4nm process, while the Blackwell GPU has 208 billion transistors manufactured using an improved version of the 4nm process. Two GPU dies of the maximum size are connected with a 10TB/s high-speed interface 'NV-HBI' to be treated as one GPU.

◆2: Second-generation Transformer Engine

The dynamic range management algorithm, combined with

◆3: 5th generation NVLink

The latest version of the high-speed GPU interconnect, NVLink , delivers 1.8TB per second of bidirectional throughput per GPU, ensuring seamless, high-speed communication between up to 576 GPUs for massive LLMs.

◆4: RAS Engine

In addition to featuring a dedicated RAS (Reliability, Availability, and Serviceability) engine, the addition of AI-based preventive maintenance capabilities at the chip level increases resiliency, maximizes system uptime, and reduces operational costs.

5. Safe AI

The advanced ' Confidential Computing ' feature supports new native interface encryption protocols, providing strong hardware-based security to protect sensitive data and AI models from unauthorized access. Confidential Computing delivers throughput performance nearly equivalent to unencrypted mode.

◆6: Decompression engine

A dedicated decompression engine supports the latest compression formats, including LZ4, Snappy, and Deflate, while the ability to access the Grace CPU 's large memory at high speed with 900GB/sec bidirectional bandwidth accelerates overall database queries, delivering the best performance for data analysis and data science.

This Blackwell architecture-based GPU is the ' B200 '.

Prior to the official announcement, Dell Vice Chairman Jeff Clark mentioned this name in February 2024 in the context of NVIDIA developing a 1,000W GPU.

NVIDIA is developing a '1000W power consumption explosive GPU' - GIGAZINE

Platforms using B200 include the superchip 'GB200' which connects two B200s and one Grace CPU, the ' GB200 NVL72 ' which combines 72 B200s and 36 Graces, the integrated AI platform ' DGX B200 ' which connects eight B200s, and the server board ' HGX B200 '. Also announced are the ' DGX GB200 ' system which combines 36 GB200s, and the next-generation AI supercomputer ' DGX SuperPOD ' built with DGX GB200.

NVIDIA GB200 NVL72 Delivers Trillion-Parameter LLM Training and Real-Time Inference | NVIDIA Technical Blog

https://developer.nvidia.com/blog/nvidia-gb200-nvl72-delivers-trillion-parameter-llm-training-and-real-time-inference/

NVIDIA Launches Blackwell-Powered DGX SuperPOD for Generative AI Supercomputing at Trillion-Parameter Scale | NVIDIA Newsroom

https://nvidianews.nvidia.com/news/nvidia-blackwell-dgx-generative-ai-supercomputing

The integrated AI platform 'DGX B200' is the sixth generation of the air-cooled rack-mounted DGX platform. Equipped with eight B200 and two Intel Xeon processors, it delivers up to 144 PFLOPS of AI performance, 1.4TB of large-capacity GPU memory, and 64TB of memory bandwidth per second, achieving real-time inference of a trillion parameter model 15 times faster than the Hopper architecture.

'DGX SuperPOD' is a supercomputer that CEO Jensen Huang describes as 'the factory of the AI industrial revolution' and 'combines the latest advances in NVIDIA accelerated computing, networking and software to enable any company, industry or country to improve and generate its own AI.' The NVIDIA Quantum-2 InfiniBand architecture allows it to accommodate tens of thousands of GB200 chips.

It also supports the separately announced Quantum-X800 InfiniBand networking , providing up to 1,800GB per second of bandwidth to each GPU in the platform.

In addition, the fourth-generation SHARP (Scalable Hierarchical Aggregation and Reduction Protocol) technology delivers 14.4 TFLOPS of performance, four times faster than the previous generation.

Related Posts: