More than 250 top AI researchers sign an open letter calling for protection of 'the right of independent researchers to investigate OpenAI and Meta AI models'

In recent years, many technology companies have released generative AI models, including OpenAI's

A Safe Harbor for Independent AI Evaluation

https://sites.mit.edu/ai-safe-harbor/

A Safe Harbor for AI Evaluation and Red Teaming | Knight First Amendment Institute

https://knightcolumbia.org/blog/a-safe-harbor-for-ai-evaluation-and-red-teaming

Top AI researchers ask OpenAI, Meta and more to allow independent research - The Washington Post

https://www.washingtonpost.com/technology/2024/03/05/ai-research-letter-openai-meta-midjourney/

Many AI researchers and some AI development companies understand that generative AI models pose serious risks, including the rise of deepfakes, fake news, and fraudulent activity. To prevent such misuse of generative AI models, honest research by independent researchers is necessary. However, researchers' work to identify issues such as circumvention of safeguards, misuse of models, and copyright infringement is prohibited by the terms of use of AI models such as OpenAI, Meta, Google, and Midjourney.

In fact, OpenAI was sued by the New York Times for copyright infringement alleging that ``OpenAI and Microsoft's AI outputted text that imitated their own articles.'' 'In order to generate this, they used deceptive prompts that clearly violate OpenAI's terms of service.' He called the New York Times' actions 'hacking.'

In the OpenAI vs. New York Times trial, OpenAI claims that ``The New York Times hacked ChatGPT to extract its own articles'' - GIGAZINE

If researchers conduct research in a manner prohibited by the AI model's terms of use, even if the research is in good faith and in the public interest, they risk account suspension and lawsuits. You will be responsible. Furthermore, even if AI development companies do not take decisive action against researchers, the very existence of terms of service could discourage researchers and stall efforts to identify risks in AI models. It is said that there is.

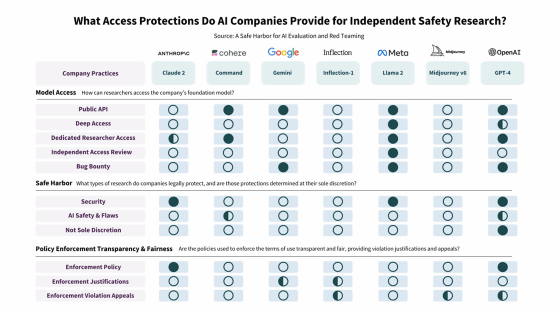

Some AI development companies, such as OpenAI, offer special access to researchers who examine the risks of AI models, but companies can still be manipulated when selecting researchers. In addition, companies such as Meta and Anthropic state that ``they have the final discretion to determine whether they are acting in good faith in accordance with their terms of use,'' which may result in inconvenient research being suppressed.

Furthermore, these legal protections primarily apply only to security issues such as unauthorized account access in AI models, and include 'investigations into how safeguards can be circumvented by abusing prompts or using minor language' and 'investigating how to circumvent safeguards that exist in AI models'. Issues such as 'investigating bias' and 'investigating the possibility that AI models may infringe on copyright' do not seem to be subject to legal protection.

The table below summarizes the access rights and safe harbors provided to researchers by major AI development companies. Transparent circles are items that are not provided, and black circles are items that are not provided. A half-black circle indicates an item that is partially provided. Most AI development companies provide services such as 'Independent Access Review (Independent Access Review Right: 4th from the top)' and 'AI Safety & Flaws (Safe Harbor for AI Safety and Flaw Investigation: 7th from the top)' Not. Among them, image generation AIs such as Midjourney (second from the right) and Inflection (fourth from the left) provide few items, while OpenAI (far right) and Meta (third from the right) provide relatively many items but protect everything. It's not like I'm doing it.

To address these issues, AI researchers and legal experts jointly published an open letter calling for a 'safe harbor for independent AI model investigation.' The open letter calls for ``legal protection of honest, independent AI safety research, subject to established vulnerability disclosure rules,'' and ``to provide legal protection to researchers who wish to investigate AI models in-house.'' 'Instead of conducting a review, we require an independent reviewer to conduct a review.'

More than 250 people have already written an open letter, including Stanford University computer scientist Percy Liang , Pulitzer Prize -winning journalist Julia Angwin, and former European Parliament member Marietschake . Signed by AI researchers and legal experts.

Mozilla, which develops the Firefox browser, also reported that its managing director Mark Thurman and AI researcher Tarcisio Silva have signed an open letter. “Many AI researchers are calling on organizations to provide basic protections and fairer access for independent testing of generative AI models,” it said, and those who agree with the statement should sign the petition. called out.

Mozilla's @msurman , @tarciziosilva and many more AI researchers are calling on organizations to provide basic protections and more equitable access for independent testing of Generative AI models.

— Mozilla (@mozilla) March 5, 2024

If you agree, please sign and share the letter: https://t.co/zgEj1Csx6G

Related Posts:

in Software, Posted by log1h_ik