Researchers discover that telling AI to 'act like a Star Trek captain' leads to better performance on math problems

By

A paper titled 'Large-scale language models (LLMs) improved their ability to solve mathematical problems when given prompts that resembled Star Trek characters' has been published in arXiv, a repository of unpeer-reviewed papers.

a

[2402.10949] The Unreasonable Effectiveness of Eccentric Automatic Prompts

https://arxiv.org/abs/2402.10949

AIs get better at maths if you tell them to pretend to be in Star Trek | New Scientist

https://www.newscientist.com/article/2419531-ais-get-better-at-maths-if-you-tell-them-to-pretend-to-be-in-star-trek/

VMware researchers Rick Battle and Teja Gollapudi tweaked the prompts using the large-scale language model (LLM) that the chatbot's AI is based on, and then ran a benchmark test called GSM8K , which asks the robot to solve elementary school-level math problems.

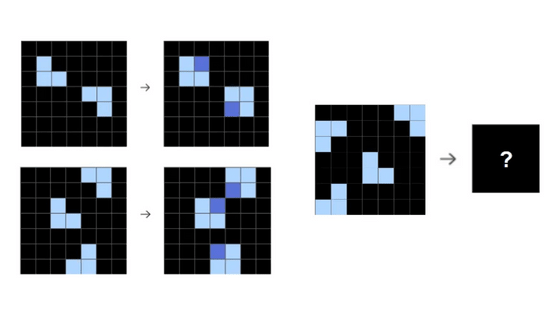

The research team fed 60 different initial prompts to three models, Mistral 7B , Llama 2-13B , and Llama 2-70B. These initial prompts were created by humans and set the AI to a specific character or way of thinking, such as 'You are an expert mathematician. I'm going to solve a math problem. Take a deep breath and think carefully.' The research team also tried to have the AI improve the initial prompts and upgrade them to more effective ones.

The results showed that in almost all cases, the AI-improved prompts produced higher GSM8K scores than the initial human-created prompts.

In particular, the highest score in the GSM8K benchmark test for Llama2-70B was achieved when it was given the prompt to 'answer as if you were the captain of a Star Trek ship.' This prompt was generated spontaneously by the AI and was not an initial prompt suggested by a human. When the 'Star Trek' prompt was input, Llama2-70B output the answer to the problem by recording it in the 'captain's log.'

It's unclear why the AI decided that a prompt to become a Star Trek captain would be effective, but Battle speculates that it may be because 'there's a ton of information about Star Trek on the internet, and it often pops up along with other correct information.'

By J.D. Hancock

'At the end of the day, LLMs just combine weights and probabilities to produce a final result, so we never know what they're doing along the way,' said Catherine Frick, a computer scientist at Staffordshire University in the UK. 'One thing is for sure: this model is not a Trekkie .'

Related Posts:

in Software, Posted by log1i_yk