Stability AI announces 'Stable Cascade', an image generation AI model that is fast, high quality, and can be easily additionally learned even in home graphics labs

Stability AI, the developer of the image generation AI ``Stable Diffusion'', has announced an image generation model `` Stable Cascade '' that can generate higher quality images faster than existing models. Stable Cascade is also characterized by the ability to learn and fine-tune it using household equipment.

Introducing Stable Cascade — Stability AI Japan — Stability AI Japan

◆Mechanism of Stable Cascade

Stable Cascade is a model developed based on the image generation model ' Würstchen ', which is characterized by low learning costs and low VRAM usage during image generation.

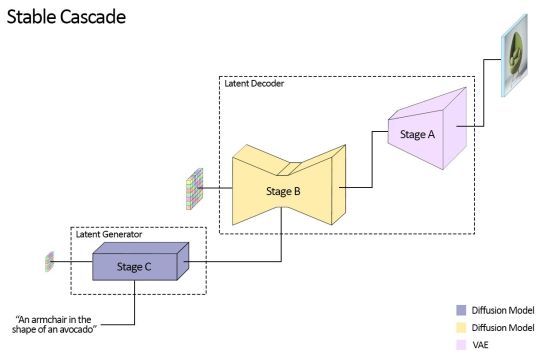

Stable Cascade is divided into three models: 'Stage A', 'Stage B', and 'Stage C'. The tent decoder phase (stage A and stage B) generates high-resolution images. By separating 'Stage C', which generates images to match the text, and 'Stage A' and 'Stage B', which process high-resolution images, fine-tuning of ControlNets, LoRA, etc. can be completed only in Stage C. This makes it possible to reduce the cost required for fine-tuning compared to existing models. Stability AI says, ``Training and fine-tuning on consumer hardware is easy,'' and emphasizes the low learning cost.

Stage B has a model with 700 million parameters and a model with 1.5 billion parameters, and Stage C has a model with 1 billion parameters and a model with 3.6 billion parameters. The VRAM capacity required for image generation with Stable Cascade is 20GB, and it is possible to reduce VRAM usage by selecting a model. However, models with fewer parameters may result in lower quality images.

Stable Cascade's models, training code, and inference code will be released ``as soon as they are ready'' and will be subject to a non-commercial license.

◆Image generation performance

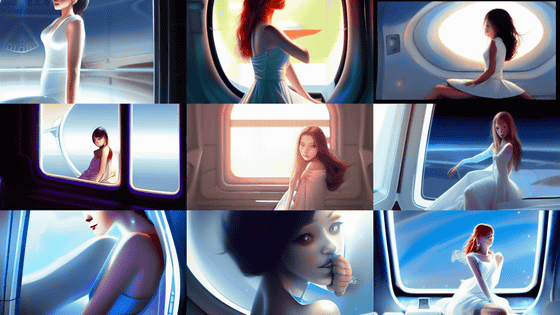

The image published on the Stable Cascade introduction page looks like this.

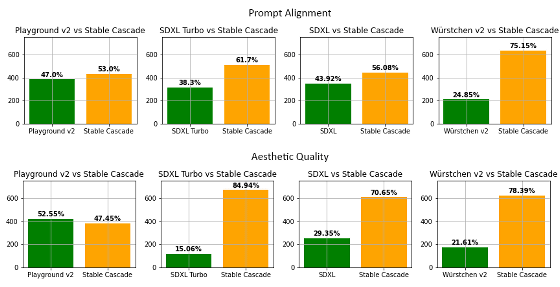

The graph below shows the results of Stability AI's evaluation of Stable Cascade's performance in terms of 'similarity between generated image and prompt' (upper row) and 'aesthetic quality of generated image' (lower row). Stable Cascade outperforms existing models such as Würstchen v2 and SDXL in terms of both prompt similarity and quality.

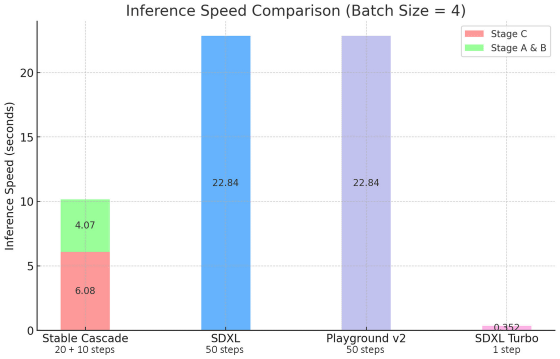

Below is a graph showing the time required to generate an image. Stable Cascade can generate images in less than half the time of SDXL.

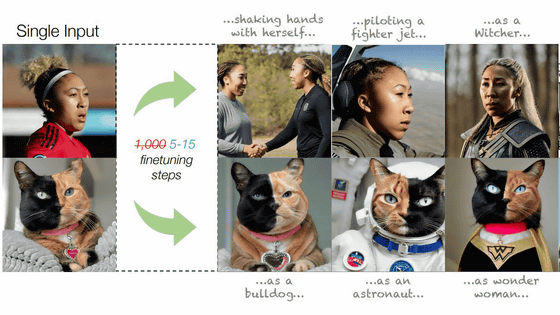

Note that Stable Cascade supports not only txt2img, which generates images from text, but also img2img, which generates images from images. In the images below, the leftmost image is the original image, and the four images on the right are the images generated by Stable Cascade.

◆ControlNet and LoRA code will also be released

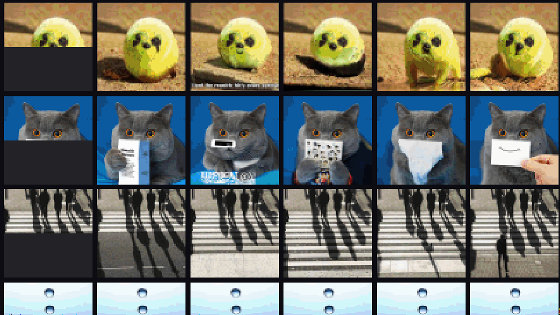

ControlNet and LoRA code will also be released with the release of Stable Cascade. Below are some of the ControlNet plans to be released.

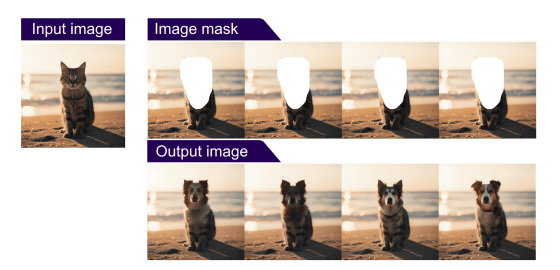

・In painting/out painting

By inputting a partially masked image with text, it is possible to ``fill part of the image with the generated image.''

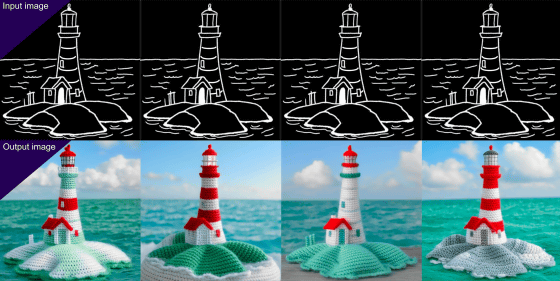

・Canny Edge

By inputting a contour image, you can generate an image with contours applied.

・2x super resolution

Improves the resolution of generated images.

Related Posts:

in Software, Posted by log1o_hf