Version 2.0 of image generation AI ``Stable Diffusion'' has appeared, and the function to increase the resolution of the output image and insert a digital watermark

British startup Stability AI has announced that it has released `` Stable Diffusion 2.0-v '', which is version 2.0 of image generation AI

Stable Diffusion 2.0 Release — Stability.Ai

https://stability.ai/blog/stable-diffusion-v2-release

GitHub - Stability-AI/stablediffusion: High-Resolution Image Synthesis with Latent Diffusion Models

https://github.com/Stability-AI/stablediffusion

Versions 1.1, 1.2, 1.3, and 1.4 of Stable Diffusion, an image generation AI published as open source, have been released so far. Also, although version 1.5 was not released as open source, it was available in DreamStudio , a paid image generation service operated by Stablitiy AI. Also, Runway ML, which developed Stable Diffusion jointly with Stability AI, has released a version 1.5 model separately from Stability AI.

Version 2.0, which was released this time, has the same number of U-Net parameters as version 1.5, but uses OpenAI's OpenCLIP-ViT/H as a text encoder to learn from scratch. In addition, Stable Diffusion 2.0-v has been fine-tuned from ``Stable Diffusion 2.0-base'', which was trained as a noise prediction model with a default resolution of 512 x 512 pixels, and a model that upconverts the resolution by a factor of 4 has been added. It seems that an image of 2048 × 2048 pixels can also be output.

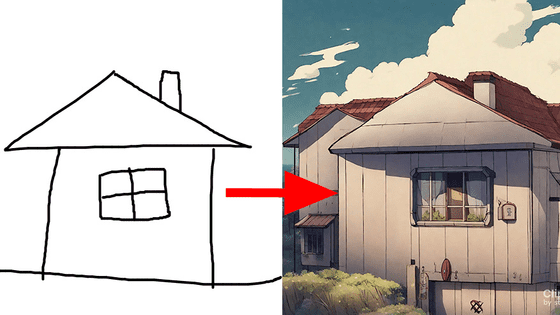

In addition, in order to strengthen the img2img function that can input not only text but also images as prompts, a function called 'depth2img' that incorporates

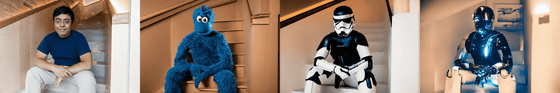

Additionally, Stable Diffusion 2.0-v now supports reference sampling scripts. This is to incorporate a 'digital watermark' into the image that indicates that the image was generated by AI. Some of the photographs and illustrations generated by Stable Diffusion are of such high quality that it is difficult to distinguish whether they were made by humans or AI, and the works actually output by AI have won gold awards at art fairs. To some extent. By adding a digital watermark to the AI-generated image, even people other than the creator can distinguish it.

At the time of writing the article, the Stable Diffusion 2.0-v model has not been released as open source, but it can be used with DreamStudio. You can also experience a demo of Stable Diffusion 2.0-v on the AI platform Hugging Face. However, at the time of article creation, access seemed to be concentrated, 'This application is too busy.' Was displayed, and it was not possible to actually generate the image.

Stable Diffusion 2 - a Hugging Face Space by stabilityai

https://huggingface.co/spaces/stabilityai/stable-diffusion

Related Posts:

in Software, Web Service, Creation, Posted by log1i_yk