'Taylor Swift' is no longer searchable on X (formerly Twitter) to prevent the spread of deepfake porn

X is being flooded with graphic Taylor Swift AI images - The Verge

https://www.theverge.com/2024/1/25/24050334/x-twitter-taylor-swift-ai-fake-images-trending

Swift retaliation: Fans strike back after explicit deepfakes flood X | TechCrunch

https://techcrunch.com/2024/01/25/taylor-swift-ai-deepfake-fan-response/

X makes Taylor Swift's name unsearchable amid viral deep fakes | Mashable

https://mashable.com/article/taylor-swift-search-block-on-x

Fake images and videos created using AI technology that can be mistaken for real ones are called deepfakes, and soon after the appearance of deepfakes, pornographic videos created using deepfakes (fake porn or deepfake porn) has become a problem. Fake pornography is prohibited by law in some countries and regions, and some say it is more damaging than revenge porn .

AI-made ``pornography of famous actresses'' is increasing rapidly - GIGAZINE

It has been reported that fake porn of Taylor Swift has been rapidly spreading on X since around the 4th week of January 2024. One video in particular received more than 45 million views , the post was reposted more than 24,000 times, and garnered hundreds of thousands of likes and bookmarks. According to overseas media outlet The Verge, this post continued to be viewed on X for about 17 hours before being deleted.

In order to protect Taylor Swift, who was a victim of fake pornography, thousands of posts have been made on X using the hashtag `` #ProtectTaylorSwift '' to criticize fake pornography.

No woman, or person for that matter, deserves to have AI tech used to generate fake naked images of them, regardless of their celebrity status or net worth. Any justification for it is wrong and will have consequences for all of us.

— Amee Vanderpool (@girlsreallyrule) January 25, 2024

Draw the line now. #ProtectTaylorSwift

Taylor Swift is not the only victim of fake pornography, but there have also been cases of overseas celebrities and ordinary high school girls being victims.

Police begin investigation after high school boy shared AI-generated ``fake nude photo of female high school classmate'' in group chat - GIGAZINE

According to research data from Home Security Heroes, a research company focused on personal information theft and digital damage, 98% of all deepfake videos existing on the Internet as of 2023 will be fake porn videos. It has also been revealed that 99% of deepfake videos target women.

In England and Wales , the sharing of deepfake pornography will be considered a crime from June 2023, with the UK government warning that ``abusers, predators and They have announced that they will crack down on former partners.

In the United States, 48 states and the District of Columbia have enacted anti-revenge porn laws, but some states, such as Illinois, Virginia, New York, and California, have decided to include fake porn in their anti-revenge porn laws. We are working on amending the law.

However, foreign media Mashable points out that many countries and regions are not seriously tackling the issue of fake pornography. #MyImageMyChoice , a campaign aimed at amplifying the voices of people who have been sexually abused on the internet, says that 'most governments are not taking action. 'In most countries, there is no framework for who is responsible for monitoring the Internet.'

X, where Taylor Swift's fake pornography was spread, has a `` policy regarding synthetic or manipulated media,' ' and posting fake pornography is explicitly prohibited.

Therefore, in response to the spread of Taylor Swift's fake pornography, @Safety, the official account that communicates measures to ensure safety on It is prohibited and we have a zero-tolerance policy for such content (a policy that imposes a penalty each time a set rule is violated). Our team will ensure that all images we review and take appropriate action against the accounts that posted them. We are closely monitoring the situation so that we can respond immediately to any further violations and remove the content. We are committed to maintaining a safe and respectful environment for our users.'

Posting Non-Consensual Nudity (NCN) images is strictly prohibited on X and we have a zero-tolerance policy towards such content. Our teams are actively removing all identified images and taking appropriate actions against the accounts responsible for posting them. We're closely …

— Safety (@Safety) January 26, 2024

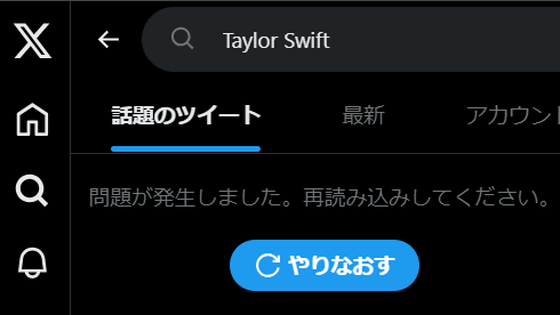

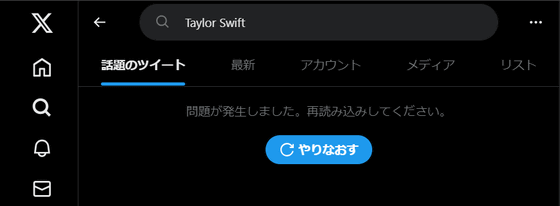

After that, you can no longer search for 'Taylor Swift' on X. Even at the time of writing the article, if you search for 'Taylor Swift,' the message 'A problem has occurred. Please reload.' is displayed as shown in the image below, and the search results are no longer displayed. Similarly, when searching for 'Taylor Swift AI' or 'Taylor AI,' search results were no longer displayed.

However, if you search for words like 'Swift AI', 'Taylor AI Swift', or 'Taylor Swift deepfake', the search results will be displayed. Due to the speed with which AI images are generated and the impact they have, social media platforms including X have seen a surge in AI images in recent months. As a result, Mashable points out that major social media platforms are struggling to contain AI-generated content. Furthermore, ``Making it impossible to search for 'Taylor Swift' suggests that X does not have a way to deal with fake porn on its platform.'' It points out that there may be no fundamental measures in place to deal with this.

In response to the incident, White House press secretary Karine Jean-Pierre said, ``The proliferation of fake images of Taylor Swift is alarming. President Biden is working to ensure that the risk of false AI images is reduced through executive action. The work to find real solutions continues. '', and said there is a need for a law to control fake pornography.

The circulation of false images of Taylor Swift are alarming. We know that incidences like this disproportionately impact women and girls. @POTUS is committed to ensuring we reduce the risk of fake AI images through executive action. The work to find real solutions will continue. pic.twitter.com/IOIl9ntKtP

— Karine Jean-Pierre (@PressSec) January 26, 2024

Additionally, fraudulent advertising videos created using deepfakes are also a problem on YouTube.

A fraudulent advertising video of a fake celebrity created by AI has been viewed over 195 million times on YouTube - GIGAZINE

Related Posts:

in Web Service, Posted by logu_ii