Pointed out that ``Taylor Swift's deep fake porn'' spread on the Internet was generated using Microsoft's generation AI tool ``Microsoft Designer''

Fake pornographic images of Taylor Swift created using AI are being spread on the Internet and becoming a problem. After it became clear that Microsoft Designer , which is provided free of charge by Microsoft, was used to generate these fake pornographic images, the company added restrictions to the service to prevent it from generating images that resemble celebrities. It became clear.

Microsoft Closes Loophole That Created AI Porn of Taylor Swift

https://www.404media.co/microsoft-closes-loophole-that-created-ai-porn-of-taylor-swift/

Microsoft restricts Designer AI used to make Taylor Swift deepfakes | VentureBeat

https://venturebeat.com/business/microsoft-adds-new-restrictions-to-designer-ai-used-to-make-taylor-swift-deepfakes/

Microsoft CEO responds to AI-made Taylor Swift fake nude images

https://www.nbcnews.com/tech/tech-news/taylor-swift-nude-deepfake-ai-photos-images-rcna135913

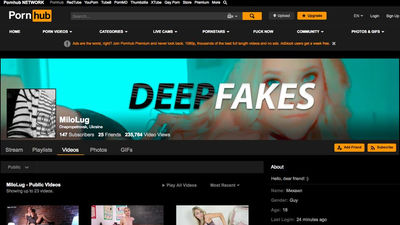

Since around the 4th week of January 2024, Taylor Swift deepfakes (fake images and videos created using AI technology that can be mistaken for the real thing) have been spreading on X (formerly Twitter). What is being spread is a pornographic video created using deep fakes (fake porn or deep fake porn). Fake pornography is prohibited by law in some regions, and some say it is more damaging than revenge porn . Fake pornography itself has been around since 2018, when deepfakes became popular, but after Taylor Swift was victimized, it caught the attention of many people, and it gained attention in the United States to the point that the White House took up the issue. I am.

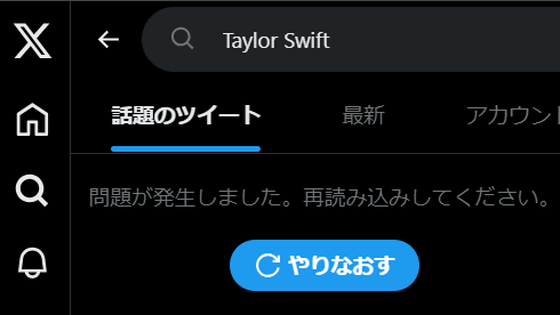

'Taylor Swift' is no longer searchable on X (formerly Twitter) to prevent the spread of deep fake porn - GIGAZINE

Foreign media 404 Media reports that the users who created Taylor Swift's fake porn were using Microsoft's generation AI tool ``Microsoft Designer''. According to reports, the creators of the fake porn were communicating using a group chat on Telegram, and tracing back this chat revealed that they were using Microsoft Designer to generate the images. .

It seems that Microsoft Designer had a built-in specification to prevent users from creating sexually explicit images, but some users were able to circumvent this and create fake porn of Taylor Swift. pattern. According to 404 Media, 'prompts can be devised to generate fake porn of celebrities, even if they intentionally misspell a celebrity's name slightly or don't use any sexual terminology.' It seemed like it was possible. However, this bug has been fixed by Microsoft, making it difficult to create fake porn of celebrities with Microsoft Designer. However, VentureBeat points out that another bug could be used to circumvent regulations and generate fake porn.

Regarding the possibility that Microsoft Designer was misused to create fake porn of Taylor Swift, the company said, ``Our code of conduct prohibits the use of our tools to create adult content or non-consensual intimate content.'' Repeated attempts to create content that violates our policies may result in loss of access to the Service. We strive to reduce abuse of our systems and make them more secure. 'We have a large team working on developing guardrails and other safety systems that align with responsible AI principles, including content filtering, operational monitoring, and abuse detection to help create a safe environment,' 404 Media said in a statement. It is published on.

Additionally, since 404 Media's reporting, Microsoft has issued a statement saying, 'We take these reports seriously and are committed to providing a safe experience for everyone. We are investigating these reports. However, we were unable to reproduce the explicit images contained in these reports. Our safety filters against explicit content are working, and we have found no evidence that the filters were bypassed. 'We have taken precautions, strengthened our text filter prompts, and taken steps to address abuse of our services.'

In addition, some AI critics, such as Neil Turkewitz, former executive vice president of the Recording Industry Association of America, have criticized Microsoft's response as not being sufficient.

Microsoft addressing output depicting celebrities isn't the flex it thinks it is. What about the millions of women & children who don't have platforms to raise their concerns? MS needs to address input—allowing images/text only on the basis of consent . https://t.co/E4Qdh3GsWf

— neil turkewitz (@neilturkewitz) January 29, 2024

Prior to 404 Media's report, Microsoft CEO Satya Nadella spoke out about Taylor Swift's fake porn, saying, ``We need to move quickly to combat non-consensual, sexually explicit deepfakes,'' and ``We need to act quickly to combat non-consensual, sexually explicit deepfakes.'' We all benefit when the world is safe, so I don't think anyone wants an online world that isn't completely safe for both content creators and content consumers. 'I think we have an obligation to create a safe online world,' he said in an interview with NBC News .

It is reported that Taylor Swift is considering taking legal action over fake pornography, but it is unclear who (or what) will be the target of the lawsuit.

Related Posts:

in Web Service, Posted by logu_ii