A bill will be submitted that will allow lawsuits to be filed against the creation of deep fake pornography by AI

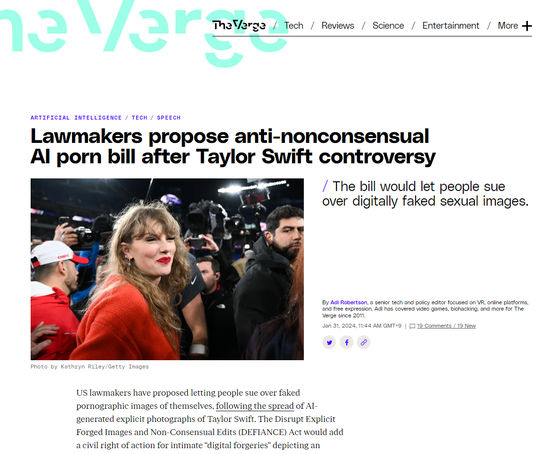

Elaborate fake images and videos created using AI are called deepfakes , and this pornographic version is 'deepfake porn (fake porn)'. At the end of January 2024, fake pornography of singer Taylor Swift was spread on social media platforms such as X (formerly Twitter), and it became a big problem, but a bill was submitted to ban fake pornography. It is reported that there is an active movement towards this.

Lawmakers propose anti-nonconsensual AI porn bill after Taylor Swift controversy - The Verge

https://www.theverge.com/2024/1/30/24056385/congress-defiance-act-proposed-ban-nonconsensual-ai-porn

Taylor Swift AI images prompt US bill to tackle nonconsensual, sexual deepfakes | Deepfake | The Guardian

https://www.theguardian.com/technology/2024/jan/30/taylor-swift-ai-deepfake-nonconsensual-sexual-images-bill

From around the 4th week of January 2024, fake porn of Taylor Swift began to be spread on X. Some of Taylor Swift's fake porn has been viewed more than 45 million times, but at the time of writing, X has addressed the problem by making it impossible to search for the word 'Taylor Swift.'

'Taylor Swift' is no longer searchable on X (formerly Twitter) to prevent the spread of deep fake porn - GIGAZINE

Because it was used to create Taylor Swift's fake porn, Microsoft has added restrictions so that the generation AI tool ``Microsoft Designer'' cannot generate ``images similar to celebrities''.

It has been pointed out that ``Taylor Swift's deep fake porn'' spread on the Internet was generated using Microsoft's generation AI tool ``Microsoft Designer'' - GIGAZINE

In the United States, 48 states and the District of Columbia have enacted anti-revenge porn laws, and in response to the Taylor Swift incident, some states, including Illinois, Virginia, New York, and California, have enacted anti-revenge porn laws. The law is currently being amended to include it in the Revenge Porn Prevention Act.

In response to the spread of Taylor Swift's fake porn, US lawmakers have passed legislation called the Obstructing Explicitly False Images and Non-Consent Editing, which would allow those whose fake porn was created to sue them. (DEFIANCE) Act ” was proposed. The law would add the right for victims to bring civil lawsuits against 'fake sexually explicit images' that could be used to identify an individual that were created without their consent. Financial damages can be claimed from the person who 'intentionally produced or possessed' the material for the purpose of disseminating it.

The DEFIANCE Act was introduced by Democratic Sen. Dick Durbin and is supported by Republican Sens. Lindsey Graham and Josh Hawley, and Democratic Sen. Amy Klobuchar. The DEFIANCE Act builds on the Violence Against Women Act (VAWA) , which was reauthorized by President Joe Biden in 2022 and provides similar rights of action for explicit images that are not fabricated. Masu.

Senator Durbin and his colleagues, who introduced the bill, describe it as ``a law in response to the rapidly increasing amount of digitally manipulated and explicit fake pornography,'' and that fake pornography is ``a law that is being used to address the rapidly increasing amount of digitally manipulated explicit fake pornography.'' The Taylor Swift incident is a specific example of how it is used to exploit and harass people and politicians.

In addition, when the fake porn of Taylor Swift was spread, White House press secretary Karine Jean-Pierre said, ``It is alarming that fake images of Taylor Swift are being spread. We understand that incidents like this disproportionately impact women and girls. President Biden is working to ensure that we reduce the risk of false AI images through executive action. The work to find solutions will continue,'' he said, adding that laws are needed to regulate fake pornography.

The circulation of false images of Taylor Swift are alarming. We know that incidences like this disproportionately impact women and girls. @POTUS is committed to ensuring we reduce the risk of fake AI images through executive action. The work to find real solutions will continue. pic.twitter.com/IOIl9ntKtP

— Karine Jean-Pierre (@PressSec) January 26, 2024

The DEFIANCE Act is explained as being submitted in response to AI-generated fake pornography, but it states that ``any software, machine learning, AI, or other computer-generated or technologically created It is explained that 'sexual images' fall under the category of fake images, and it will now be possible to file a lawsuit against them. Images created with Photoshop also fall under the category of fake images, and even if a ``label indicating that the image is not genuine'' is added, it does not exempt the person from liability.

However, foreign media outlet The Verge points out that this type of regulation raises big questions about artistic expression. In fact, the possibility of legal action being taken by powerful people over the treatment of political parody and creative fiction has been raised. The DEFIANCE Act may raise some of the same questions, and while it still faces an uphill battle to pass, some note that its regulatory content is significantly limited.

Related Posts:

in Note, Posted by logu_ii