What is the performance of 'NVIDIA L40S', which can be a replacement for 'NVIDIA H100', which is considered optimal for machine learning?

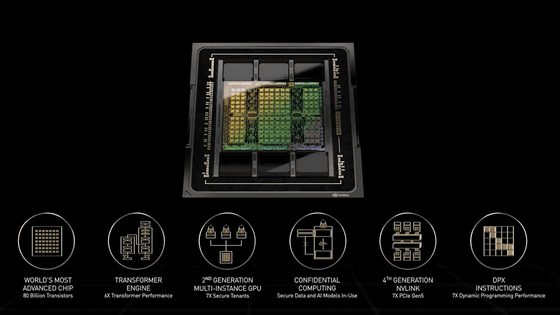

The NVIDIA H100, which sells for over several million yen per unit, is popular because it is ideal for machine learning, and Facebook's Mark Zuckerberg's charity organization is conducting high-performance medical research with over 1,000 NVIDIA H100 units installed. We have

NVIDIA L40S is the NVIDIA H100 AI Alternative with a Big Benefit

https://www.servethehome.com/nvidia-l40s-is-the-nvidia-h100-ai-alternative-with-a-big-benefit-supermicro/

NVIDIA H100 is a high-end GPU, with very high demand and price. In response to demand for machine learning, NVIDIA sells 'L40S'. This can be said to be a variant of the graphics-oriented 'L40' and can be obtained for about half the price of the H100.

According to Kennedy, the NVIDIA H100 80GB SXM5 remains the GPU of choice if you want to train basic models, such as something like ChatGPT. However, once the basic model is trained, even low-cost products can customize the model based on domain-specific data and inference.

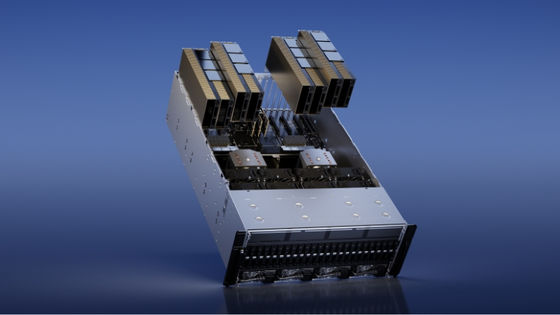

There are currently three mainstream GPUs used for high-end inference: NVIDIA H100, NVIDIA A100, and the new NVIDIA L40S. The L40S is completely different from the other two, as it is originally based on the L40, a visualization GPU, and has been tweaked for AI.

According to Kennedy, the L40S is a significantly improved GPU for AI training and inference, but it is not suitable when memory capacity, bandwidth, etc. are required. Although the specs seem to have significantly less memory than the A100, it supports NVIDIA Transformer Engine and FP8, so by using FP8, which significantly reduces data size, it can operate with less memory. .

The results of inference using LLaMA 7B, one of the large-scale language models, are as follows. Blue is H100, black is L40S. Mr. Kennedy said, ``Why L40S when H100 is faster?'' Especially when using FP8, 48GB of memory is sufficient, and even the SXM version using FP16 is better than A100. On the other hand, H100 PCIe is generally 2.0 to 2.7 times faster than L40S, but also 2.6 times more expensive. Another reason is that L40S is available much earlier than H100. 'There is one,' he said.

Related Posts:

in Hardware, Posted by log1p_kr