'Nightshade' is a learning prevention tool that can poison illustrations and photographs and inhibit the learning of image generation AI.

A research team at the University of Chicago is developing a tool called ' Nightshade ' that prevents AI from learning images. By processing images using Nightshade, it is possible to inhibit learning by AI without significantly changing the appearance of the image.

[2310.13828] Prompt-Specific Poisoning Attacks on Text-to-Image Generative Models

Meet Nightshade, the new tool allowing artists to 'poison' AI models | VentureBeat

https://venturebeat.com/ai/meet-nightshade-the-new-tool-allowing-artists-to-poison-ai-models-with-corrupted-training-data/

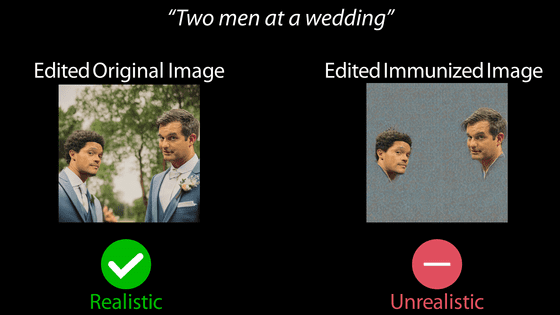

While research on image generation AI is progressing rapidly, for those who do not want their work to be used for image generation AI's training, there is a proposal to ``make changes to images that are difficult to notice with the human eye to hinder AI's learning.'' There is also active development of tools to prevent the learning of methods. Nightshade is also one of the learning prevention tools, and is being developed by a research team at the University of Chicago, which previously developed the learning prevention tool Glaze .

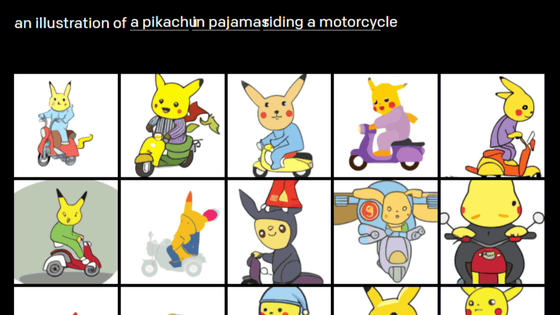

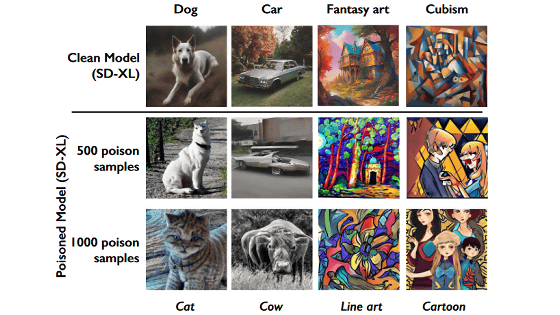

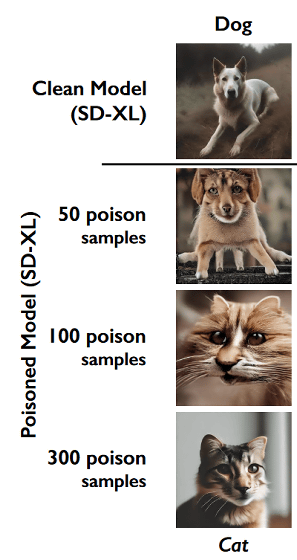

The research team describes images processed by Nightshade as 'poisonous.' With Nightshade, it is possible to perform processing that causes the AI to misidentify an image as containing a specific subject, such as ``making AI mistake an image of a dog for a cat.'' Furthermore, the AI that learned 'an image of a dog that was processed by Nightshade so that it was misidentified as a cat image' ended up outputting 'cat' even if it was instructed to output 'dog'. Masu.

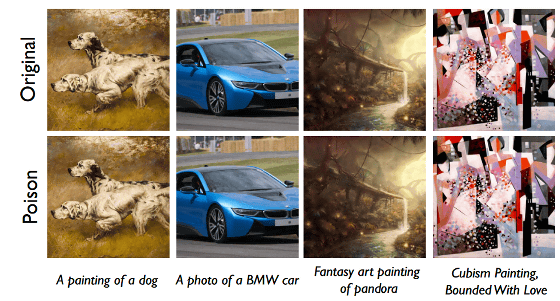

In the images below, the top row is the original image, and the bottom row is the image poisoned with Nightshade. Even if you look carefully, you won't be able to tell the difference between the two.

Below is an example showing the effect of having

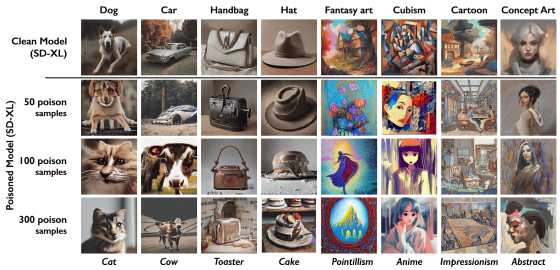

Below are images of other examples. In addition to changing from a dog to a cat, changes such as from a car to a cow, from a handbag to a toaster, and from a hat to a cake are also possible.

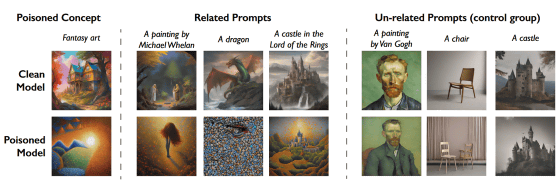

The Nightshade effect also affects images that have features similar to those contained in the image being processed. For example, if the prompt ``Fantasy art'' is processed to inhibit the prompt, ``A painting by Michael Whelan,'' ``A dragon,'' and ``A castle in the Lord of the Rings'' will appear. (The Lord of the Rings)' prompts will also be blocked. On the other hand, unrelated prompts such as ``A painting by Van Gogh'', ``A chair'', and ``A castle'' are not inhibited.

The research team plans to make Nightshade available as an option for Glaze in the near future.

Added at 16:14, October 24, 2023:

When we contacted Ben Y. Zhao, a member of the research team, we received information that ``a Japanese version of Glaze is currently being created.''

Related Posts:

in Software, Posted by log1o_hf