``Nightshade'', a tool that prevents image learning by AI by adding ``poison'' to images, is publicly available and anyone can use it

On January 19, 2024, Nightshade , a tool developed by a research team at the University of Chicago that prevents AI from learning images, was released to the public and anyone can download it.

Nightshade: Protecting Copyright

Today is the day. Nightshade v1.0 is ready. Performance tuning is done, UI fixes are done.

— Glaze at UChicago (@TheGlazeProject) January 19, 2024

You can download Nightshade v1.0 from https://t.co/knwLJSRrRh

Please read the what-is page and also the User's Guide on how to run Nightshade. It is a bit more involved than Glaze

Glaze - What is Glaze

https://glaze.cs.uchicago.edu/what-is-glaze.html

AI-poisoning tool Nightshade now available for artists to use | VentureBeat

https://venturebeat.com/ai/nightshade-the-free-tool-that-poisons-ai-models-is-now-available-for-artists-to-use/

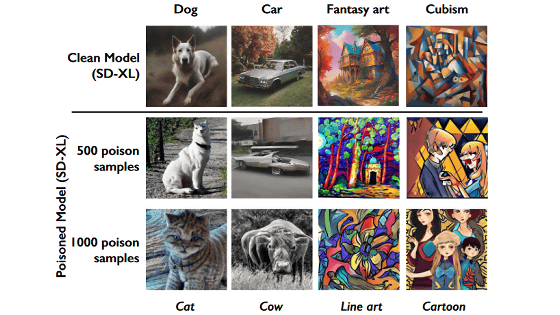

Developed under Professor Ben Zhao of the University of Chicago, Nightshade is a tool that ``makes changes to images that are difficult to notice with the human eye to interfere with AI learning.'' The same research team announced an AI learning inhibition tool called ' Glaze ' in March 2023, but according to the research team, Glaze was developed as a 'defensive tool,' while Nightshade was developed as an 'attack tool.' It has been developed as a ``tool''.

Glaze is a tool that applies a process called ' perturbation ' to the original image so that the AI is unable to learn the artist's artistic style and fails to imitate it. On the other hand, Nightshade is not a defense against style imitation, but can fundamentally disrupt AI learning by distorting the representation of features in images. The research team describes this mechanism of Nightshade as ``putting 'poison' on the image.''

Also, according to the research team, even if images processed using Nightshade are cropped, resampled, compressed, etc., the effect on the processing will be minimal.

Highly recommend you read the User's Guide to understand how to use the poison tag.

— Glaze at UChicago (@TheGlazeProject) January 19, 2024

NS is very similar to Glaze in general tools used. So the usual robustness applies. Cropping, resampling, compression etc etc have minimal impact on NS poison.

Have fun!

The mechanism of Nightshade and examples of images actually output are summarized in detail in the following article.

``Nightshade'' is a learning prevention tool that can poison illustrations and photographs and inhibit the learning of image generation AI - GIGAZINE

The research team says, ``Nightshade has a weaker effect of interfering with AI learning than Glaze, but it is also less visible to the human eye.''

Same perturbations budget. So mathematically it's identical or less visible than glaze. We actually allow lower intensity effects than glaze. Less poison is ok but less protection is not. But you can look in the paper for examples. https://t.co /0mIZgOl1Fp

— Glaze at UChicago (@TheGlazeProject) January 19, 2024

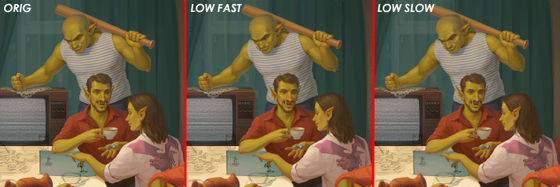

On the other hand, there are cases where images processed using Nightshade change so much that they are indistinguishable to the human eye. Below is an example presented by 2D artist Повышенная синицевость . The left image is the original image, the center image is the output image with Nightshade's 'Low Fast' setting, and the right image is the output image with the 'Low Slow' setting. When you zoom in, you can see that the image quality of the background curtains etc. in the image processed with Nightshade is rougher than the original image.

In response to this point, the research team said, ``This can happen with complex renderings like this image. If you think the change in the image is too much for your art style, use Nightshade. There's no need.'

Short answer: yes. But you can also run on longer rendering. And since it's poison, you don't *need* to use it if it looks too strong for your art style.

— Glaze at UChicago (@TheGlazeProject) January 19, 2024

In addition, in response to these points, Nightshade at the time of writing the article stated that ``It hardly works with the previous generation training process'' and ``It doesn't work at all with the latest training process'', and ``Although it is academically interesting, It may be a case of exaggerating an unrealistic result.''

Nightshade is available in a Windows version and a Mac version equipped with Apple silicon, and anyone can download it . In addition, as Nightshade's popularity is increasing at the time of article creation, it has been reported that it takes a long time to download. Therefore, the research team recommends downloading from the fast mirror link for Nightshade binaries.

The demand for nightshade has been off the charts. Global Requests have actually saturated our campus network link. (No dos attack, just legit downloads). We are adding two fast mirror links for nightshade binaries.

— Glaze at UChicago (@TheGlazeProject) January 20, 2024

Win: https://t.co/rodLkW0ivK

Mac: https://t.co/mEzciopGAE

As a future goal, the research team aims to develop an integrated version of Glaze and Nightshade to further strengthen the inhibition of learning by AI.

Folks. This version does not include glaze. We will work on an integrated version of glaze+ns. For now if you want benefits of both, you should shade first, glaze last. But note that artifacts might be more visible. The integrated version will fix this issue.

— Glaze at UChicago (@TheGlazeProject) January 19, 2024

He also revealed that there are plans to integrate Nightshade into the browser version of Glaze.

we will integrate this into webglaze... just a matter of time

— Glaze at UChicago (@TheGlazeProject) January 19, 2024

Furthermore, it has been reported that they are considering developing Glaze not only for still images but also for videos.

only still images. We are exploring a Glaze for video tool that prevents ai bros from taking freeze frames out of a video to steal

— Glaze at UChicago (@TheGlazeProject) January 19, 2024

Related Posts:

in Software, Posted by log1r_ut