Glaze, a free tool that allows artists to prevent AI from copying their styles, has been cracked and breached

A team of Swiss online security researchers has published a paper pointing out that

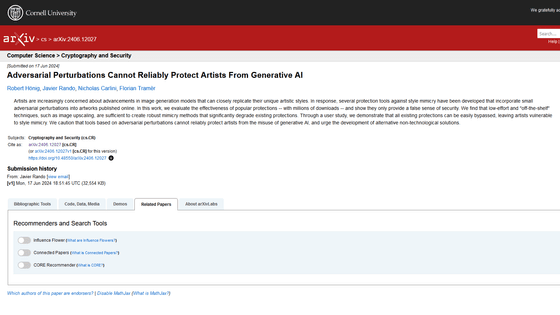

[2406.12027] Adversarial Perturbations Cannot Reliably Protect Artists From Generative AI

https://arxiv.org/abs/2406.12027

Glazing over security | SPY Lab

Why I attack

https://nicholas.carlini.com/writing/2024/why-i-attack.html

Tool preventing AI mimicry cracked; artists wonder what's next | Ars Technica

https://arstechnica.com/tech-policy/2024/07/glaze-a-tool-protecting-artists-from-ai-bypassed-by-attack-as-demand-spikes/

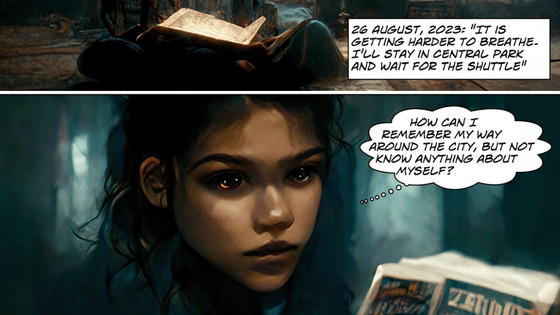

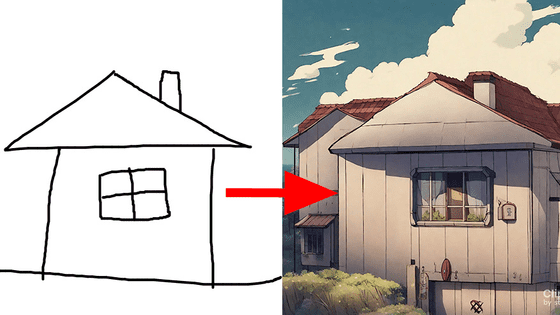

Glaze, developed by University of Chicago's Chou Ben and others, is a tool that applies invisible ' perturbations ' to each image, making it difficult for AI to learn the artist's artistic style and generate images with keywords such as 'images that look like XX.' In an example by the development team, an AI that learned 'cloak artwork' generated by applying Glaze to original art failed to imitate the original image.

'Glaze', a tool that makes invisible changes to original art to prevent AI from learning it, is released - GIGAZINE

Since its release, Glaze has been extremely popular with artists, and according to Ars Technica, millions of artists have downloaded Glaze, and there are wait times of weeks to months to use the web version of Glaze, WebGlaze . In response to this, the development team said, 'Given the explosive increase in demand, we need to immediately reconsider how we operate WebGlaze.'

Clearly, we have to step back and rethink the way we run webglaze right now, given the (likely sustained) explosion in demand. We might have to change the way we do invites and rethink the future of webglaze to keep it sustainable enough to support a large & growing user base.

— Glaze at UChicago (@TheGlazeProject) June 15, 2024

In addition, when announcing Glaze, the development team said, 'Unfortunately, Glaze is not a permanent solution to the imitation of image-generating AI.' 'Technologies we use to protect art like Glaze could be overcome by future countermeasures for image-generating AI, making previously protected art vulnerable.'

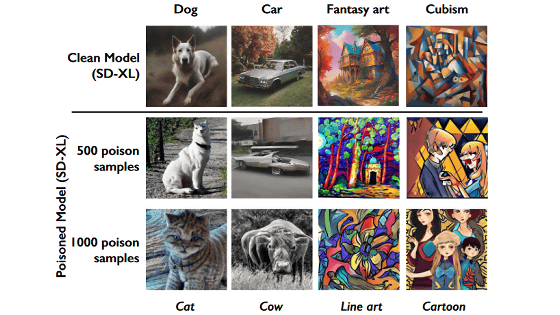

This concern has become a reality, and a research team including Google DeepMind researcher Nicholas Carlini has argued that 'Glaze's protection can be easily circumvented, leaving artists vulnerable to style imitation.' The methods the research team has published to break through Glaze's protection include 'using a different fine-tuning script' when upscaling images or training the AI on new data, and 'applying Gaussian noise to the image before training.' The research team points out that 'by applying these techniques, it is possible to significantly weaken existing protections.'

In response to this, Chou said, 'Most of the attacks by the research team targeted earlier versions of Glaze, so the impact of the attacks is limited,' and updated Glaze to use a different code than the one used by the research team. However, Robert Hoenig of the research team said, 'Even the latest version of Glaze does not protect against all of these attacks.' In fact, according to the research team, even if the latest version of Glaze is applied, it is possible to generate images with keywords such as 'manga-style' simply by applying a fine-tuning script to the original image.

In response to the research team's criticism, Chou dismissed the report, saying, 'This report is not worthy of any action.' The research team criticized Chou's remarks, saying they could be misleading.

The research team said that they thought it would be better to release the method to break Glaze's protection as soon as possible to inform artists of the potential vulnerability of Glaze, and released details of how to carry out the attack without waiting for an update to Glaze. The research team also warned that 'sometimes it is necessary to combine multiple methods of these attacks, but it is possible that a motivated and well-resourced art forger will try various methods to break Glaze's protection.'

He added, 'The best way to support artists is not to sell tools while refusing to analyze their security, as Glaze did. If there are flaws in the approach, we need to find vulnerabilities early so they can be fixed. That's why it's important to open source and study the tools.'

On the other hand, the Glaze development team said, 'The way to maintain artist protection is to properly protect the Glaze code, and we will not open source the code to increase resistance to adaptive attacks.'

Related Posts:

in Software, Posted by log1r_ut