``PhotoGuard'' will be developed to prevent image processing by AI by adding ``invisible modification'' to the image

Recent improvements in image generation technology using AI have created a situation where it is possible to create false information by modifying the original image without discomfort. Meanwhile,

GitHub - MadryLab/photoguard: PhotoGuard: Defending Against Diffusion-based Image Manipulation

https://github.com/MadryLab/photoguard

PhotoGuard: Defending Against Diffusion-based Image Manipulation – gradient science

https://gradientscience.org/photoguard/

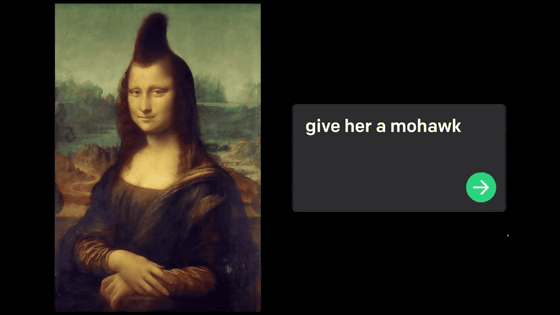

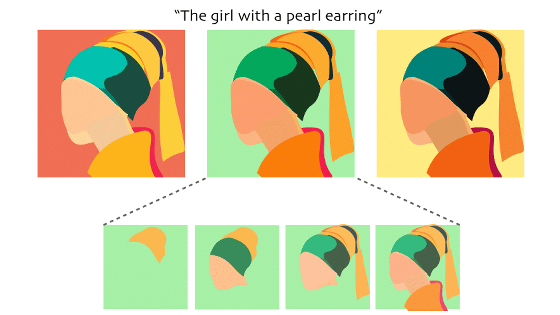

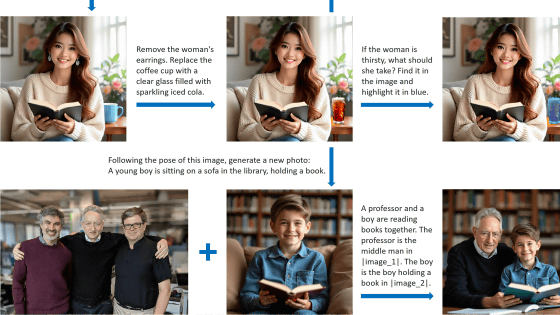

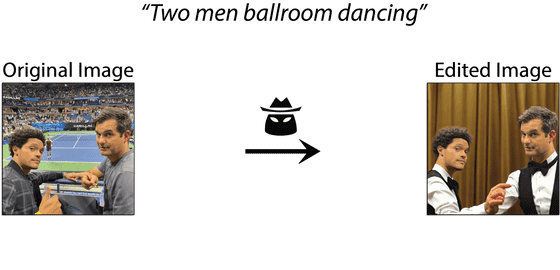

Mr. Salman and others point out that image generation AI such as DALL-E has made it possible to generate and process images using only simple natural language, while pointing out that the risk of malicious image editing has increased. . The image below is an example of image editing using AI. If you input the original image into AI and input the text (prompt) 'Two men ballroom dancing', it will look like the image on the right. The image will be altered.

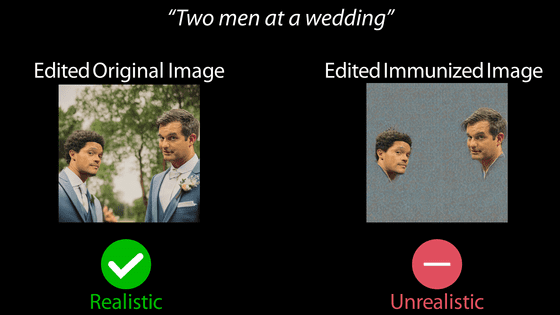

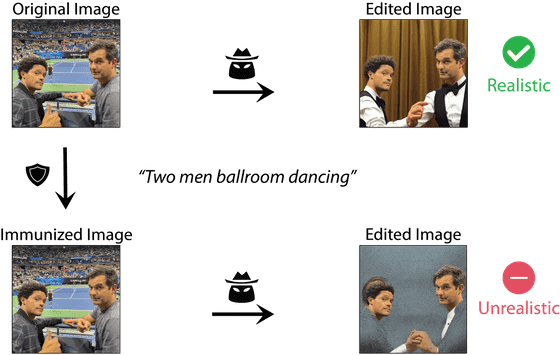

PhotoGuard, developed by Mr. Salman, is a system that interferes with image editing by AI by making invisible modifications to the image. An example using PhotoGuard is below. The image to which PhotoGuard is applied (Immunized Image) is indistinguishable from the original image, but even if the AI is given the same instructions as above, the image is unnatural.

PhotoGuard can perform a hostile attack on both the ``stage of processing data with an encoder'' and the ``stage of converting noise into an image'' of image generation AI. Below is an example of an attack on the ``step of processing data with an encoder''. If you give the AI the prompt 'Dog under heavy rain and muddy ground', the PhotoGuard edited image (Edited Immunized Image) ignores elements of the original image. , only the content of the prompt is reflected in the image.

And the following is an example of attacking 'the stage of converting noise into an image'. The image processed by AI (Edited Immunized Image) is full of noise in areas other than the human face, and you can see that the ``step of converting noise into an image'' was not executed correctly.

According to Salman, a large amount of memory is required when running PhotoGuard. In the future, we plan to proceed with development with the aim of saving memory and strengthening the AI interference function.

There is also `` ShieldMnt '' developed in 2021 as a `` system that prevents alteration without changing the appearance of the image ''. ShieldMnt is a technology that adds an invisible watermark to images, and it is said that it is possible to prevent image alteration and diversion.

Related Posts:

in Software, Posted by log1r_ut