What is the reason for the shortage of the high-end GPU 'NVIDIA H100', which is craving for the development of generative AI and large-scale language models?

Semiconductor manufacturer

Nvidia H100 GPUs: Supply and Demand GPU Utils ⚡

https://gpus.llm-utils.org/nvidia-h100-gpus-supply-and-demand/

Sam Altman, CEO of Open AI, an AI company that develops ChatGPT, said at a hearing held in May 2023, ``There is a huge shortage of GPUs, so few people use our products. It's good enough.' 'I'm happy if the usage of our products is reduced because there is a shortage of GPUs,' he said , revealing the impact of the lack of supply of GPUs on the company. AI companies such as Open AI are looking for high-end GPUs like the NVIDIA H100.

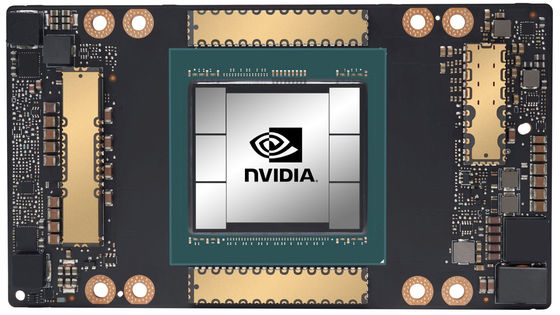

NVIDIA H100 is needed by startups like OpenAI training LLMs and cloud service providers like Microsoft Azure and AWS. In some cases, it is used to test existing AI models, and in other cases, almost unknown startups are buying up high-end GPUs like the NVIDIA H100 to build new AI models from scratch.

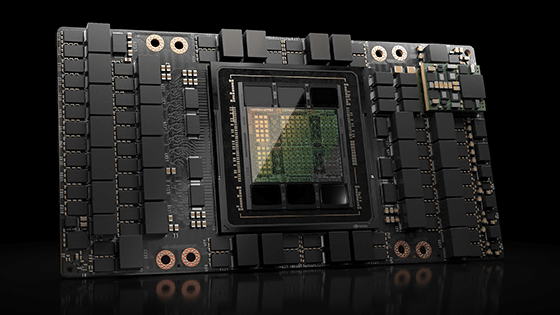

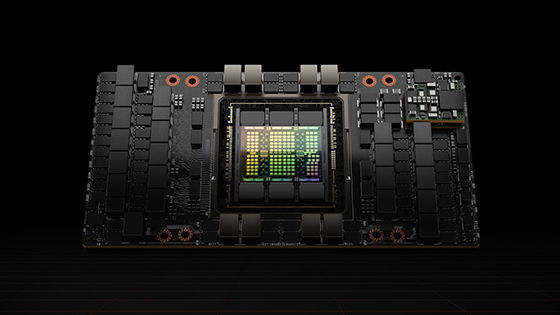

The reason most companies looking for high-end GPUs want the NVIDIA H100 is simple: ``It has the best performance among existing products for both LLM inference and training.'' The NVIDIA H100 is also highly cost-effective, which helps reduce the time it takes to train AI models.

One deep learning researcher said, ``The NVIDIA H100 is recommended because it is up to three times more efficient. And the cost is only 1.5 to 2 times higher than existing GPUs, so it has high cost performance. Even with the overall system cost and the GPU combined, the NVIDIA H100 can deliver much higher performance per dollar (about 140 yen), and if you look at system performance, probably $1 The performance per unit will be 4 to 5 times,' he said about the usefulness of NVIDIA H100.

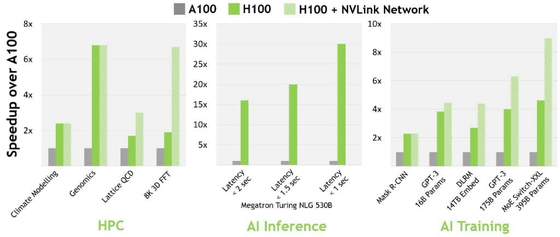

NVIDIA H100 (green) and the previous generation high-end GPU NVIDIA A100 (gray), and the combination of NVIDIA H100 and

Also, NVIDIA H100 (green) achieves nine times the throughput compared to NVIDIA A100 (light green). What takes seven days to train on the NVIDIA A100 can be completed in just 20 hours on the NVIDIA H100.

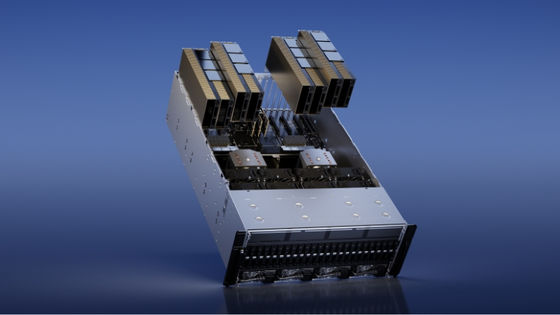

The price range of such NVIDIA H100 depends on the product, but for example, in the case of NVIDIA DGX H100 with eight NVIDIA H100, the selling price is 460,000 dollars (about 66 million yen). Of this, 100,000 dollars (about 14 million yen) is a support fee, and startups can receive a discount of about 50,000 dollars (about 7 million yen).

In the case of Open AI's LLM, GPT-4, it is believed to have been trained on approximately 10,000 to 25,000 NVIDIA A100 units. And Meta is said to have about 21,000 units, Tesla about 7,000 units, and Stability AI about 5,000 NVIDIA A100 units. Also, it is revealed that Inflection used 3500 NVIDIA H100s to train AI models equivalent to GPT-3.5.

And in the case of Microsoft's Azure, it uses about 10,000 to 40,000 NVIDIA H100s, and Oracle is believed to have an equivalent NVIDIA H100. In the case of Azure, most of the owned NVIDIA H100 seems to be assigned to Open AI.

Still, it is believed that OpenAI needs 50,000 units, Meta 25,000 units, and large-scale cloud services such as Azure, Google Cloud, and AWS each require about 30,000 NVIDIA H100 units. If you estimate the price of one NVIDIA H100 to be about 35,000 dollars (about 5 million yen), you should be able to understand that a ridiculous capital investment is required if the company tries to introduce NVIDIA H100.

In the case of ChatGPT, which is the most popular LLM product, GPU Utils explains how GPU demand is formed as follows.

1: Users are using ChatGPT a lot, and Open AI generates recurring revenue of nearly $ 50 billion (about 7.14 trillion yen) annually.

2: ChatGPT runs on GPT-4 and GPT-3.5 APIs.

3: A GPU is required to run GPT-4 and GPT-3.5. Also, OpenAI would like to release many features for ChatGPT and API, but we have been unable to do so due to lack of access to enough GPUs.

4: For Open AI, we use NVIDIA GPUs through Microsoft's Azure. Specifically, I'm asking for the NVIDIA H100.

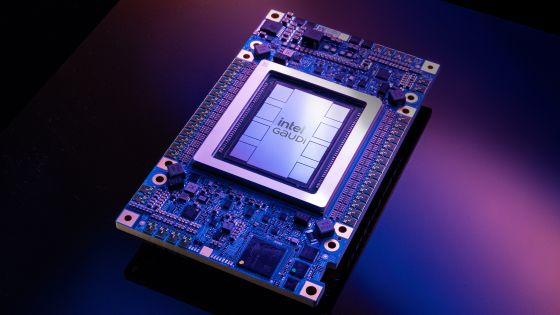

5: NVIDIA outsources manufacturing to TSMC to produce NVIDIA DGX H100 with NVIDIA H100. Using CoWoS packaging technology, TSMC mainly uses HBM3 from SK Hynix.

Not only Open AI but also many AI companies are working on the development of LLM products in the same way, so the demand for high-end GPUs will increase globally and supply shortages will occur. According to GPU Utils, the supply shortage of NVIDIA H100 will continue at least until the end of 2023 and may continue until the middle of 2024.

Related Posts:

in Hardware, Posted by logu_ii