It turns out that more than 100,000 ChatGPT accounts have been stolen and traded on the dark web, and there is also a risk of leakage of confidential company information

Group-IB Discovers 100K+ Compromised ChatGPT Accounts on Dark Web Marketplaces; Asia-Pacific region tops the list | Group-IB

https://www.group-ib.com/media-center/press-releases/stealers-chatgpt-credentials/

Trove of ChatGPT creds found on dark web • The Register

https://www.theregister.com/2023/06/20/stolen_chatgpt_accounts/

Over 100,000 ChatGPT accounts stolen via info-stealing malware

https://www.bleepingcomputer.com/news/security/over-100-000-chatgpt-accounts-stolen-via-info-stealing-malware/

Group-IB's threat intelligence platform monitors dark web cybercrime forums, marketplaces, closed communities and more in real time. As a result, it identifies compromised credentials and new malware, and identifies risks before further damage occurs.

Newly, Group-IB reported that more than 101,000 'ChatGPT account credentials' were stolen over the past year by a type of malware called an infostealer that steals various credentials from infected devices. bottom. Infostealers mainly collect web service credentials, credit card details, cryptocurrency wallet passwords, email and messaging app conversations, etc., and the stolen data can be misused by hosts or used by other hackers. It is sold to

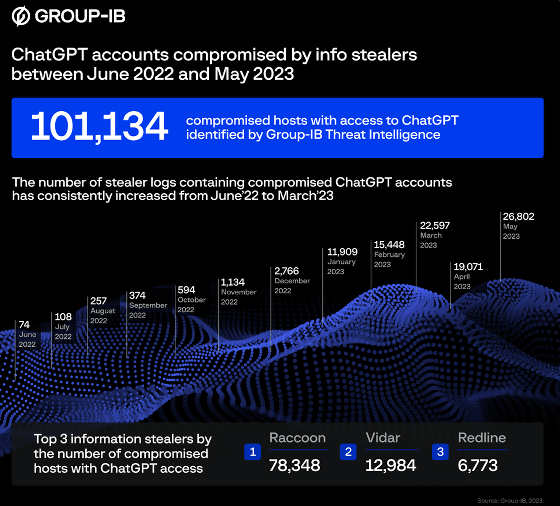

The chart below shows the number of ChatGPT accounts compromised by infostealers and traded on the dark web from June 2022 to May 2023. As of June 2022, there were only 74 cases per month, which increased to 1,134 cases in November, and reached 26,802 cases in May 2023, the highest number ever. During the monitoring period, 101,134 ChatGPT accounts were compromised, of which 78,348 were compromised by Raccoon , 12,984 by Vidar , and 6,773 by infostealers named Redline .

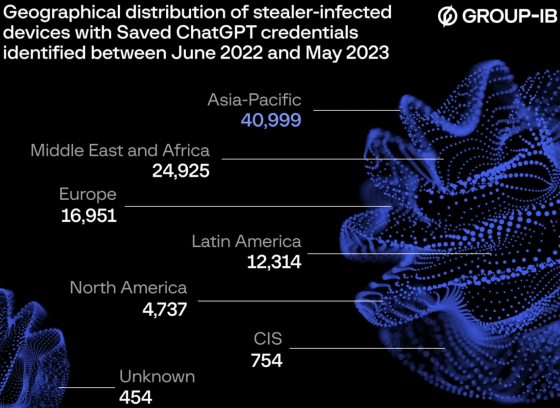

The number of ChatGPT accounts compromised by infostealers is also skewed by region, with 40,999 in the Asia-Pacific region, 24,925 in the Middle East and Africa, 16,951 in Europe, and 12,314 in Latin America. 4737 in North America, 754 in

In recent years, an executive at consulting firm McKinsey & Company said , ``About half of our employees are using generative AI as part of their work, with permission from McKinsey.'' Employees are increasingly using AI such as ChatGPT at work. Moreover, it is known that the number of employees who have 'entered confidential company data into ChatGPT' is increasing , and the risk of confidential information being leaked through ChatGPT has also been pointed out.

Samsung engineers have already pasted confidential source code into ChatGPT, and Apple has restricted the use of external AI tools such as ChatGPT due to concerns about confidential data leakage.

Apple restricts internal use of external AI tools such as ChatGPT and GitHub Copilot, due to concerns about confidential data leakage - GIGAZINE

By default, ChatGPT saves the user's query and response history, so if an infostealer compromises an employee's ChatGPT account, confidential company information may be exposed.

Tech outlet BleepingComputer recommends disabling the save chat feature from the settings menu if you enter sensitive information into ChatGPT, or clearing the history after the conversation is over. However, infostealers may also breach data through system screenshots and keylogging , and data breaches may occur even if conversations are not stored in ChatGPT accounts.

Related Posts:

in Web Service, Security, Posted by log1h_ik