AI's godfather denies AI threat theory such as `` AI takes over the world '' and `` AI research should be strictly controlled '', claiming that `` AI has not reached the intelligence of dogs let alone humans ''

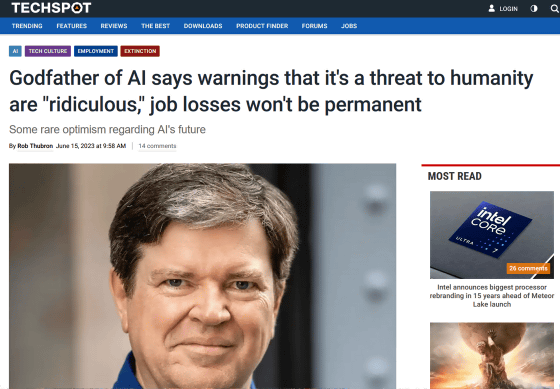

Godfather of AI says warnings that it's a threat to humanity are 'ridiculous,' job losses won't be permanent | TechSpot

https://www.techspot.com/news/99086-godfather-ai-warnings-threat-humanity-ridiculous-job-losses.html

AI is not even at dog-level intelligence yet: Meta AI chief

https://www.cnbc.com/2023/06/15/ai-is-not-even-at-dog-level-intelligence-yet-meta-ai-chief.html

In recent years, while high-precision generative AIs have appeared one after another and are attracting people's attention, the AI threat theory that AI may cause harm to humans is also growing. OpenAI, which developed chatGPT for interactive AI, is concerned that ``AI will exceed the skill level of experts in most fields within 10 years,'' and argues that it is necessary to establish an international regulatory body .

On May 30, 2023, more than 350 AI researchers and CEOs, including Sam Altman, CEO of OpenAI, and Demis Hassabis, CEO of Google DeepMind, said, ``Reducing the risk of extinction by AI is an important way to prevent pandemics and nuclear war. It should be recognized as a global priority alongside large-scale social risks such as The EU is already planning to approve a `` bill to regulate the use of AI '' in 2023 , and Mr. Altman said that if OpenAI cannot comply with the EU's AI regulations, it may suspend service operation in the EU. says .

More than 350 AI researchers and company CEOs, including Sam Altman, CEO of OpenAI, signed a letter appealing that ``reducing the risk of extinction by AI is the same priority as nuclear war and pandemic countermeasures''-GIGAZINE

Among the Turing Award-winning AI godfathers with Mr. Lucan, the stance on AI threat theory is different. Joshua Bengio was one of the signatories to the May 30 letter, and Jeffrey Hinton said Hinton exposed threats such as an increase in deepfakes from AI and negative impacts on jobs, which will be announced in April 2023. It was reported that he left Google in May.

``Godfather of AI'' regrets AI research and leaves Google - GIGAZINE

On the other hand, Mr. Lecan has long taken a negative stance on the AI threat theory. At a panel discussing AI threat theory at the tech conference Viva Tech held in Paris in June 2023, Mr. Lecan took the stage with French thinker Jack Atari .

Mr. Atari pointed out that whether AI becomes good or bad depends on how it is used, ``If AI is used to develop fossil fuels and weapons, it will be terrible.'' , AI can also be great for health, education and culture.'

In the same panel, Mr. Lucan argues that the large-scale language model that is the basis of AI at the time of writing the article is limited in that it is 'trained only in language'. ``These systems are still very limited and don't understand the fundamentals of the real world at all,'' Lecan said. “Most of human knowledge is language agnostic, so some of the human experience cannot be captured by AI.”

OpenAI's GPT-4 already has the performance to be in the top 10% of the bar exam , but even the simple task of `` washing dishes '' that human children can learn in about 10 minutes can not be done. “This tells us that we are missing something necessary to reach dog-level intelligence, let alone humans,” LeCun said, referring to the analogy.

Human babies don't understand the basic laws of the world from the beginning, but five-month-old babies don't particularly react to floating objects, but nine-month-old babies see floating objects. You will be amazed when you see it. This means that the baby understands the basic law that 'no object floats in space'.

“At this stage, we have absolutely no idea how to replicate this ability in machines,” LeCun said. You won't be able to have it,' he said. In order to solve these problems, Meta is working on AI training using videos as well as languages.

In addition, when Mr. Atari asked about ``the danger of AI rebelling against humans'', Mr. Lucan said that AI that is smarter than humans will appear in the future, but scientists believe that AI is ``controllable and basically human. Denied that it can be developed as a subordinate. ``We should not see this as a threat, but rather as a very beneficial one.'' ``It's like a staff member who helps you in your daily life, which is smarter than you,'' Lecan said.

Regarding the concern that AI will take over the world, Mr. Lecan said, ``The fear spread in science fiction novels is that if robots were smarter than us, they would try to conquer the world. But there is no correlation between being smart and trying to conquer the world.'

In addition, Mr. Lucan also responded to the BBC interview , saying that some experts' concerns that AI will be a threat to humanity are 'silly', and if they realize it is dangerous, they just need to stop development. Answered. He opposes the opinion that `` AI research should be strictly controlled '', saying that the concern that AI will take over the world is a projection of humanity on AI.

Mr. Lucan told the BBC that the AI threat theory at the time of writing the article was ``like asking in 1930 before the invention of the turbojet engine safety measures,'' and that AI will acquire intelligence comparable to humans. indicates that it will take several years. Regarding concerns that more workers will lose their jobs due to AI, he said, ``AI will not permanently put many people out of work,'' and said that while the content of jobs may change, the jobs themselves will continue to exist. claimed.

In addition, it is reported that Meta wants to make it possible for people to use the large-scale language model LLaMa, which is open source, for commercial use.

Meta Wants Companies to Make Money Off Its Open-Source AI, in Challenge to Google — The Information

https://www.theinformation.com/articles/meta-wants-companies-to-make-money-off-its-open-source-ai-in-challenge-to-google

Related Posts:

in Software, Web Service, Posted by log1h_ik