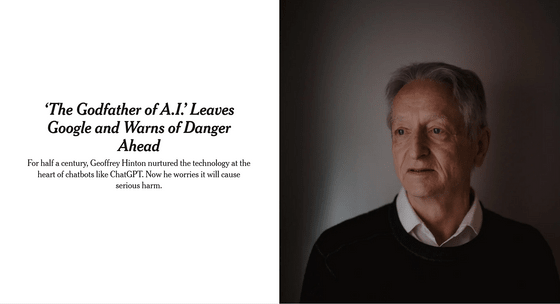

'Godfather of AI' regrets AI research and leaves Google

'The Godfather of AI' Quits Google and Warns of Danger Ahead - The New York Times

https://www.nytimes.com/2023/05/01/technology/ai-google-chatbot-engineer-quits-hinton.html

Mr. Hinton is a pioneer of AI research who published

However, in an interview with The New York Times at his home in Toronto, Canada, Hinton revealed that he had contacted CEO Sundar Pichai in April 2023 and had resigned. Mr. Hinton has avoided voicing the dangers of AI because he does not want to publicly criticize Google and other AI companies while he is engaged in AI research. However, he said he left Google to speak freely about the dangers of AI.

In an interview, Mr. Hinton said that he regretted his life's work, and said, ``I don't see a way to prevent the bad guys from using AI for evil.'' bottom.

Mr. Hinton, who is 75 years old at the time of writing the article,'s career as an AI researcher dates back to 1972 when he was a graduate student at the University of Edinburgh, England. From around this time, Mr. Hinton had the idea of the underlying technology of neural networks, but at that time there were few researchers who understood the idea.

In the 1980s, he was a professor at Carnegie Mellon University in the United States, but moved to Canada because he did not want financial support from the Department of Defense. At the time, most AI research in the United States was funded by the Department of Defense, but Hinton was deeply concerned about the use of AI in warfare, and what he called 'robot soldiers.' Because they didn't want the Pentagon to get involved.

In 2012, together with University of Toronto students Alex Kryzhevsky and Ilya Satsukivar, he published a research paper on AlexNet, laying the foundation for neural network technology.

Of the two people who developed the neural network with Mr. Hinton, Mr. Ilya Satsukiva later became the co-founder of OpenAI. Mr. Satsukivar also said, ``We were wrong,'' due to the risks of AI technology, and has turned to a policy of restricting the disclosure of information on AI research that has been open until then.

OpenAI co-founder says ``We were wrong'', a major shift from the dangers of AI to a policy of not opening data-GIGAZINE

After that, even after Google acquired the company founded by Mr. Hinton and two students, Mr. Hinton and others continued AI research, which led to the emergence of AI such as ChatGPT and Bard. Hinton and others won the Turing Award in 2018 for their work in neural network research.

At that time, Mr. Hinton thought that 'neural networks are a powerful way for machines to understand and generate languages,' but he did not think they could compete with humans. However, with the advent of generative AI such as ChatGPT, I felt that ``what is happening in this system may be much better than what is happening in the human brain.'' My perspective has changed a lot.

Mr. Hinton, who is concerned about AI development competition by companies, has stayed at Google so far because he was careful not to disclose dangerous technology. But Microsoft's introduction of chatbots to Bing search and Google's entry into the space with Bard have prompted Hinton to question Google's role as a 'moderate steward of AI technology.' rice field.

It is reported that Bard's disclosure has been criticized as `` premature '' from within Google.

Google employees are dissatisfied with the too hasty announcement of 'Bard' - GIGAZINE

Mr. Hinton's immediate concern is that the Internet will be flooded with fake photos, videos, and sentences, and ordinary people will not know what is true. They also fear that AI will upend the job market. So far, generative AI like ChatGPT has complemented human jobs, replacing people doing routine jobs like paralegals, personal assistants and translators. It has also been pointed out that there is a possibility

In addition, Hinton is concerned that AI may become a threat to humanity in the future because it analyzes huge amounts of data and learns behaviors that humans do not expect. If companies don't just let AI generate code, but give it the hardware to actually run that code, a truly autonomous weapon might become a reality: the killer robot Hinton fears.

Many experts, including Hinton's students and colleagues, believe that such threat theories are hypothetical. However, Mr. Hinton believes that if the competition of large companies such as Google and Microsoft develops into a global one and international regulations are not implemented, it may not be possible to put a brake on the development competition. .

“The idea that AI could be smarter than humans was in the minority,” Hinton said. Years are another story, but I don't think so anymore.'

Among those who advocate the dangers of AI, there are those who argue that the threat of AI is comparable to that of nuclear weapons. There is no way for people to know that advanced AI exists.

When Mr. Hinton was once asked why he could work on dangerous technology, he quoted the words of Robert Oppenheimer, who led the development of the atomic bomb, and said, 'If there is an attractive technology, go for it. ', but he said he would never say those words again.

Related Posts:

in Software, Posted by log1l_ks