More than 350 AI researchers and company CEOs, including Sam Altman, CEO of OpenAI, signed a letter appealing that ``reducing the risk of extinction by AI is the same priority as nuclear war and pandemic countermeasures''

More than 350 CEOs and AI researchers of AI research organizations such as

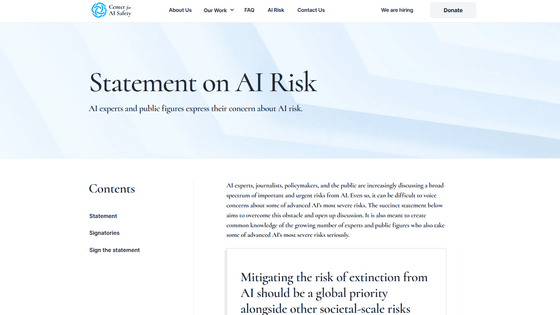

Statement on AI Risk | CAIS

https://www.safe.ai/statement-on-ai-risk

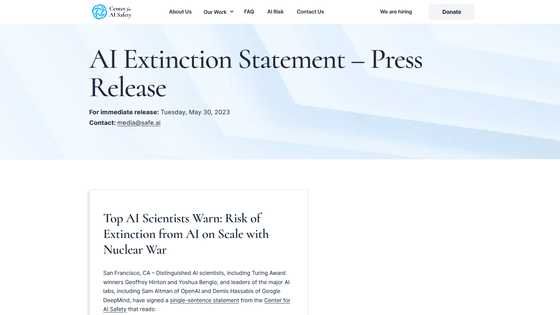

AI Extinction Statement Press Release | CAIS

Top AI researchers and CEOs warn against 'risk of extinction' in 22-word statement - The Verge

OpenAI execs warn of “risk of extinction” from artificial intelligence in new open letter | Ars Technica

https://arstechnica.com/information-technology/2023/05/openai-execs-warn-of-risk-of-extinction-from-artificial-intelligence-in-new-open-letter/

The Center for AI Safety (CAIS), a non-profit organization that promotes the responsible and ethical development of AI, said on May 30, 2023, 'Reducing the risk of extinction caused by AI is a social scale such as a pandemic or nuclear war. It should be recognized as a global priority alongside the high risk of

In response to this statement, more than 350 CEOs of AI research companies, including OpenAI CEO Sam Altman, Google DeepMind CEO Demis Hassabis, Anthropic CEO Dario Amodei, as well as Joshua Bengio and Stuart Russell. and signatures endorsed by researchers.

CAIS Director Dan Hendricks said, ``Before the spread of the new coronavirus, the concept of a ``pandemic'' was not in the general public's mind. There is no idea that it is 'premature' to build.'

``While addressing AI issues, the AI industry and governments around the world need to grapple with the risks that future AI could pose a threat to human existence,'' Hendricks said. increase.

“Reducing the risk of AI-induced extinction requires global action. The world has successfully worked together to reduce the risk of nuclear war. requires the same level of effort as mitigating the risk of nuclear war.' “Although our statement was very brief and did not set out how to mitigate the threat posed by AI, our aim was to have a dialogue with AI researchers and company CEOs,” Hendricks said. It's about avoiding disagreements.'

On the other hand, author Daniel Jeffries said, ``Future AI-induced extinction risk is a fictitious problem, and fictitious things cannot be fixed. I hope you will spend it, ”he criticized the letter. He also said, 'Trying to solve fictitious future problems is a waste of time. Solving current problems will naturally solve future problems.'

AI risks and harms are now officially a status game where everyone piles onto the bandwagon to make themselves look good.

—Daniel Jeffries (@Dan_Jeffries1) May 30, 2023

It costs absolutely nothing to say you support 'studying' existential risk (you can't do this in any meaningful way) and that you support 'doing something…

Also, Meta's Yang Lucan said, ``Since there is no AI that exceeds human intelligence yet, I think it is premature to place the extinction risk of AI on the same level as pandemics and nuclear wars.At least dog-level intelligence. We should discuss how to develop AI safely after the emergence of AI with

Super-human AI is nowhere near the top of the list of existential risks.

— Yann LeCun (@ylecun) May 30, 2023

In large part because it doesn't exist yet.

Until we have a basic design for even dog-level AI (let alone human level), discussing how to make it safe is premature. https://t.co/ClkZxfofV9

In March 2023, a letter calling for immediate suspension of development for six months for AI that exceeds GPT-4 , the next-generation large-scale language model, will be sent to Future of Published by the Life Institute , the letter was signed by Elon Musk and Apple co-founder Steve Wozniak.

More than 1,300 people, including Earon Mask and Steve Wozniak, signed an open letter asking all engineers to stop the immediate development of AI beyond GPT-4 for 6 months due to the fear of ``loss of control'' - GIGAZINE

Related Posts:

in Software, Posted by log1r_ut