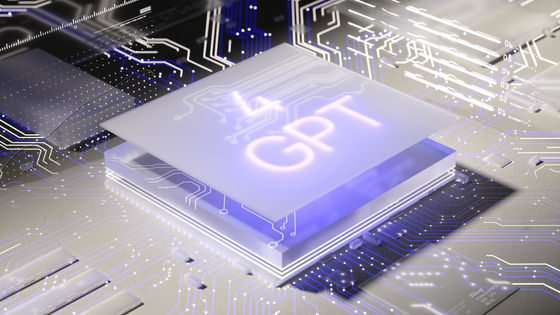

More than 1,300 people, including Elon Mask and Steve Wozniak, signed an open letter asking all engineers to stop the immediate development of AI beyond GPT-4 for 6 months due to the fear of ``loss of control''

With the success of

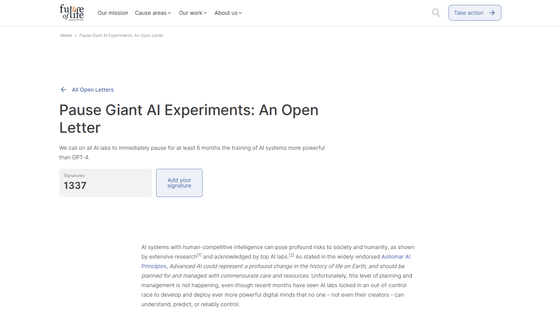

Pause Giant AI Experiments: An Open Letter - Future of Life Institute

https://futureoflife.org/open-letter/pause-giant-ai-experiments/

Tech pioneers call for six-month pause of 'out-of-control' AI development | IT PRO

AI Leaders Urge Labs to Halt Training Models More Powerful Than ChatGPT-4 - Bloomberg

https://www.bloomberg.com/news/articles/2023-03-29/ai-leaders-urge-labs-to-stop-training-the-most-advanced-models

Elon Musk, scientists, tech leaders sign open letter about GPT-4, Open AI

https://www.axios.com/2023/03/29/elon-musk-gpt-4-chat-open-ai

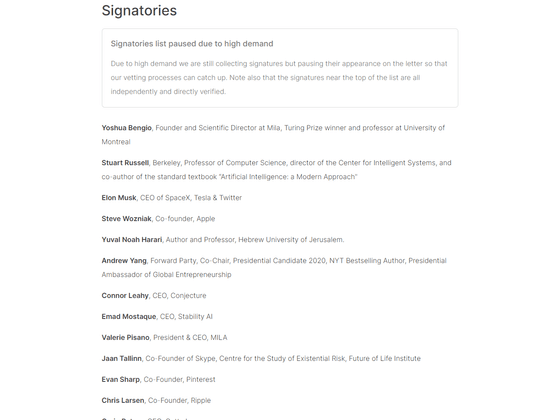

In an open letter, the Future of Life Institute , a non-profit organization that promotes the responsible and ethical development of artificial intelligence, said, 'As the wave of AI development continues, it risks posing serious risks to society. There is a clear lack of corporate and regulatory safeguards against generative AI developments like 4 and ChatGPT.'

In addition, the letter presented

“Modern AI has now grown to the point where it can compete with humans in common tasks such as testing and programming. Should we risk losing the civilization we have cultivated to date?'

The Future of Life Institute therefore called for an immediate suspension of advanced AI development and training for at least six months to jointly develop and implement a set of shared safety protocols for advanced AI development. I'm here. Furthermore, the Future of Life Institute states that ``development moratoriums must be public and verifiable'' and ``if a decision to suspend AI development cannot be made immediately, governments should intervene with AI development bodies.'' should,' he argues.

Further, the Future of Life Institute says, ``Sophisticated AI should only be developed when there is confidence that the impact of development will be positive and the risks can be managed.''

In response to these Future of Life Institute claims, Musk and Wozniak, as well as Skype co-founder Jan Tallinn,

On the other hand, an OpenAI spokesperson revealed, ``We spent more than six months adjusting the safety of the model after training GPT-4,'' and showed a stance not to sign the Future of Life Institute letter. I'm here.

・Continued

It turned out that there were many fake signers in the open letter requesting a temporary suspension of the ``uncontrollable AI development competition'', and AI researchers continued to refute the letter-GIGAZINE

Related Posts:

in Posted by log1r_ut