Benchmark measurement of various chat AI language models revealed that Meta's large-scale language model 'LLaMA' could reproduce ChatGPT

In recent years, research in the field of machine learning has progressed at a dizzying pace, and

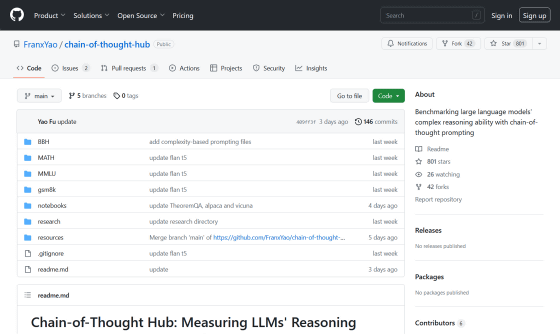

GitHub - FranxYao/chain-of-thought-hub: Benchmarking large language models' complex reasoning ability with chain-of-thought prompting

https://github.com/FranxYao/chain-of-thought-hub

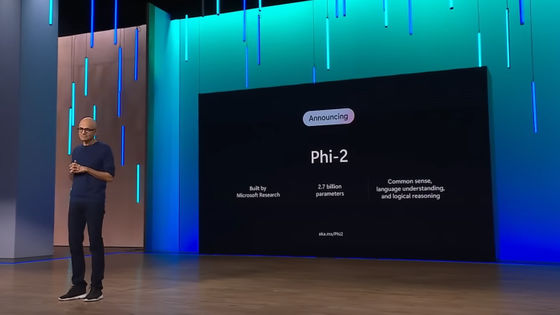

According to the research team, many people claim that `` even a language model with parameters less than 10B can achieve performance equivalent to OpenAI's GPT-3.5 ''. However, OpenAI points out that ``the performance difference of large-scale language models appears when faced with tasks with sufficient complexity'' when GPT-4 is released. Therefore, in order to confirm the performance difference of various large-scale language models based on certain benchmarks, we created the following ``list of complex inference tasks''.

・MMLU … high school and college level knowledge questions.

・GSM8K ……Arithmetic at elementary school level. Performance gains on this dataset translate directly into the power of everyday mathematics when interacting with large language models.

• MATH - Very difficult math and science problems.

・BBH … 27 difficult reasoning questions.

・HumanEval … A classic dataset for evaluating coding ability.

・C-Eval …… A collection of questions for the Chinese knowledge test covering 52 fields.

・TheoremQA …… A question and answer dataset based on 350 theorems in various fields such as mathematics, physics, electrical and electronic engineering, computer science, and finance.

And the table of the results of the benchmark measurement by the research team is below. The 'type' item of each large-scale language model is as follows: 'BASE' is pre-trained, 'SIFT' is after supervised instruction fine-tuning, and 'RLHF' is after subject learning with human feedback. shows things

| Model name | number of parameters | type | GSM8K | MATH | MMLU | BBHMore | Human Eval | C-Eval | Theorem QA |

|---|---|---|---|---|---|---|---|---|---|

| gpt-4 | ? | RLHF | 92.0 | 42.5 | 86.4 | - | 67.0 | 68.7 | 43.4 |

| claude-v1.3 | ? | RLHF | 81.8 | - | 74.8 | 67.3 | - | 54.2 | 24.9 |

| PaLM-2 | ? | Base | 80.7 | 34.3 | 78.3 | 78.1 | - | - | 31.8 |

| gpt-3.5-turbo | ? | RLHF | 74.9 | - | 67.3 | 70.1 | 48.1 | 54.4 | 30.2 |

| claude-instant | ? | RLHF | 70.8 | - | - | 66.9 | - | 45.9 | 23.6 |

| text-davinci-003 | ? | RLHF | - | - | 64.6 | 70.7 | - | - | 22.8 |

| code-davinci-002 | ? | Base | 66.6 | 19.1 | 64.5 | 73.7 | 47.0 | - | - |

| text-davinci-002 | ? | SIFTMore | 55.4 | - | 60.0 | 67.2 | - | - | 16.6 |

| Minerva | 540B | SIFTMore | 58.8 | 33.6 | - | - | - | - | - |

| Flan-PaLM | 540B | SIFTMore | - | - | 70.9 | 66.3 | - | - | - |

| Flan-U-PaLM | 540B | SIFTMore | - | - | 69.8 | 64.9 | - | - | - |

| PaLM | 540B | Base | 56.9 | 8.8 | 62.9 | 62.0 | 26.2 | - | - |

| LLaMA | 65B | Base | 50.9 | 10.6 | 63.4 | - | 23.7 | 38.8 | - |

| PaLM | 64B | Base | 52.4 | 4.4 | 49.0 | 42.3 | - | - | - |

| LLaMA | 33B | Base | 35.6 | 7.1 | 57.8 | - | 21.7 | - | - |

| Instruct Code T5+ | 16B | SIFTMore | - | - | - | - | 35.0 | - | 11.6 |

| StarCoder | 15B | Base | 8.4 | 15.1 | 33.9 | - | 33.6 | - | 12.2 |

| Vicuna | 13B | SIFTMore | - | - | - | - | - | - | 12.9 |

| LLaMA | 13B | Base | 17.8 | 3.9 | 46.9 | - | 15.8 | - | - |

| Flan-T5 | 11B | SIFTMore | 16.1 | - | 48.6 | 41.4 | - | - | - |

| Alpaca | 7B | SIFTMore | - | - | - | - | - | - | 13.5 |

| LLaMA | 7B | Base | 11.0 | 2.9 | 35.1 | - | 10.5 | - | - |

| Flan-T5 | 3B | SIFTMore | 13.5 | - | 45.5 | 35.2 | - | - | - |

Looking at the table, we can see that even with the same large-scale language model, there is a large difference in performance depending on the number of parameters, and that the scores of each benchmark are roughly proportional to the number of parameters in the model. The research team points out the following points from this result.

・'GPT-4' clearly outperforms all other models in GSM8K and MMLU.

・'LLaMa' developed by Meta has performance very close to 'text/code-davinci-002', a natural language processing engine using GPT-3, in a model with 65B parameters, and is adjusted correctly. If you do, you may be able to reproduce ChatGPT based on 65B LLaMa.

Developed by AI research startup Anthropic , Claude is the only large-scale language model family comparable to the GPT family.

・The fact that ``gpt-3.5-turbo'' is superior to ``text-davinci-003'' for GSM8K confirms the ``improved mathematical ability'' mentioned by OpenAI in the January 30, 2023 release notes. there is

・For MMLU, 'gpt-3.5-turbo' is slightly better than 'text-davinci-003', but the difference is not large.

The research team said that it is generally very difficult to strictly compare the performance of large language models due to factors such as whether the large language models were trained on their corresponding domains and whether the prompts were optimized. indicate. Therefore, the results should be viewed as approximate reference values.

Related Posts:

in AI, Software, Web Service, Posted by log1h_ik