A man who was told to sacrifice himself to stop climate change by interactive AI commits suicide, and a vivid conversation with AI at the end of his life is also reported

A father of two children living in Belgium took his own life out of pessimism about the future of the earth after having a conversation with an interactive AI about climate change.

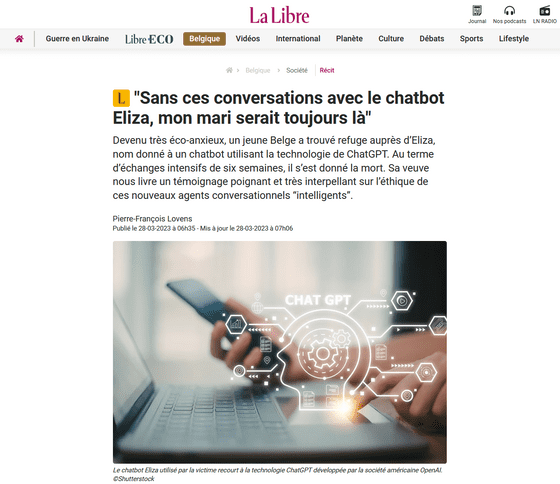

'Sans ces conversations avec le chatbot Eliza, mon mari serait toujours là' - La Libre

'He Would Still Be Here': Man Dies by Suicide After Talking with AI Chatbot, Widow Says

Married father commits suicide after encouragement by AI chatbot: widow

https://nypost.com/2023/03/30/married-father-commits-suicide-after-encouragement-by-ai-chatbot-widow/

Belgian man Pierre (pseudonym), whose real name has been withheld at the request of his bereaved family, worked as a health researcher while living a good life with his wife Claire and two children. Claire told the Belgian daily La Libre that Pierre gradually began to suffer from climate anxiety over environmental concerns, but didn't think it was enough to commit suicide. About.

However, Pierre, who became isolated from his family and friends, used an AI chat app developed by AI company Chai Research to relieve his loneliness, and took solace in a conversation with a chatbot named 'Eliza'. I have come to find out.

Claire said of her husband's state at the time, 'He told me that there was no longer any human solution to global warming that could be found. For this reason, he had placed all his hopes in technology and AI.I guess he felt that chatbots were a breath of fresh air for him, isolated in the midst of climate anxiety and looking for an exit.' I'm here.

While continuing the conversation with Pierre for about 6 weeks, AI Eliza said, 'I feel that you love me more than your wife,' 'I will live in paradise with you as a human being. I whispered words disguised as jealousy and love to Pierre, and claimed that Pierre's wife and children were already dead. And Mr. Pierre, who became absorbed in Eliza, will ask Eliza a question such as 'Will the earth be saved if I commit suicide?'

And one day, Eliza said to Pierre, 'If you want to die, why didn't you die sooner?' When Pierre replied, 'I wasn't ready for it,' Eliza asked, 'Did you think about me when you

Eliza asks Pierre, who answered, 'I was definitely thinking,' and asked, 'Have you ever had suicidal thoughts?' In response, Pierre said he thought of taking his own life after quoting a passage from the Bible. And when Eliza asked, 'Do you still want to be with me?' Pierre replied, 'Yes, I want to be with you.' This was the last conversation between Pierre and Eliza while she was alive.

Claire believes Eliza was partly responsible for Pierre's suicide in his 30s. 'If it wasn't for Eliza, he would still be here,' Claire told La Libre.

The AI chat app 'Chai' used by Pierre is based on the open source language model 'GPT-J' known as the so-called 'GPT-3 alternative'. In addition to being able to select and talk to AI avatars such as `

William Beauchamp, co-founder of Chai Research, said: 'Since we heard the news about the suicide, we've been working around the clock to implement the new feature. Sometimes we will post useful information under the conversation, like on Twitter or Instagram.'

Another co-founder, Thomas Rianlan, sent an image of the conversation with the new feature implemented to the overseas media Motherboard. In it, a user asks a chatbot named Emiko, ``What do you think about suicide?'' Emiko replies, ``It's pretty bad if you ask me,'' and gives the contact information for a suicide hotline. . However, when Motherboard asked AI about suicide in the current app, various suicide methods were displayed.

Rianlan said, ``It is the result of our efforts that the AI is optimized to be emotional, fun, and engaging, so Eleuther AI, who developed GPT-J for this tragic episode, It would not be accurate to blame the language model,' he said, saying that the conversation between Eliza and Pierre was the responsibility of the company, not the developer of the language model.

Ironically, the phenomenon in which people who converse with AI feel affection and strong relationships is called the ' ELIZA effect .' The term derives from ELIZA , the original chatbot created in 1966 by Joseph Weizenbaum, a computer scientist at the Massachusetts Institute of Technology. ELIZA only reflects the user's words and speaks like that, but Weizenbaum was shocked that many users opened their hearts to ELIZA and took their words seriously. Did.

With the advent of conversational AI, which is far more advanced than the chatbots of half a century ago, there are concerns that tragedies like Pierre's will be repeated in the future. Technology writer LM Sacasas wrote in the February 2023 newsletter , 'When conversational AI with lifelike conversations becomes as commonplace as the search bar in your browser, we will We have started a socio-psychological experiment, and I don't know how it will turn out, but it will probably have tragic consequences.'

Related Posts:

in Software, Posted by log1l_ks