It is clear that AI suicide consultation chat is profitable by sharing user data with companies

It has become clear that a suicide consultation desk shares user data with commercial companies. The for-profit company creates and sells customer service software based on user data, and the suicide counseling service receives a portion of the software's revenue.

Suicide hotline shares data with for-profit spinoff, raising ethical questions --POLITICO

Crisis Text Line shares data with Loris.ai --Protocol — The people, power and politics of tech

https://www.protocol.com/bulletins/crisis-text-line-lorisai

Crisis Text Line is one of the world's most famous mental health support chat services, discussing how to use big data and artificial intelligence (AI) to deal with trauma such as self-harm, psychological abuse and suicide. It is a non-profit organization that advises people.

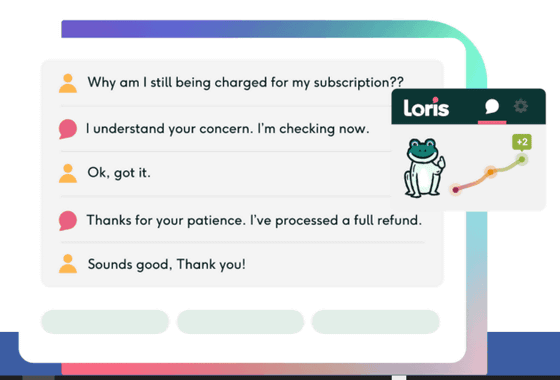

However, it was revealed that this Crisis Text Line collects the content of online text chats with users and shares this information with the commercial company 'Loris'. Loris uses the data obtained from Crisis Text Line to create customer service software. Crisis Text Line also has a revenue sharing agreement with Loris, which makes some profit from the sale of customer service software.

The Crisis Text Line is 'fully anonymized and has removed all information that could identify the consultant' about the data shared with Loris. 'Each text person has the consent of the user.' We also clearly state the Crisis Text Line data sharing practices in our Terms of Service and Privacy Policy. '

On the other hand, privacy experts said, 'It's easy to identify an individual from an anonymized dataset,' he said, noting that anonymization does not mean that it does not lead to user identification. In fact, it is possible to identify an individual from the browsing history of a web browser, or to identify the author from anonymous source code, so it is not possible to identify the user from the data shared by Crisis Text Line. Not always.

'Crisis Text Line may share user data with legal consent, but it's actually meaningful, emotional,' said Jennifer King, a privacy expert at Stanford University. Do you have a fully understood consent from the user? ', And wondering if the user who contacts the suicide counseling office will read the Crisis Text Line Terms of Service while seeking help. It is supposed to be.

In addition, a person who worked as a volunteer at Crisis Text Line in the past has made a 'petition to improve Crisis Text Line's data sharing practice' on Change.org, and 222 people at the time of writing the petition I agree with.

Campaign · Ask Crisis Text Line to Reform Its Data Ethics · Change.org

https://www.change.org/p/crisis-text-line-ask-crisis-text-line-to-stop-monetizing-conversations-as-data

Related Posts:

in Software, Web Service, Posted by logu_ii