ChatGPT turned out to be not good at the word puzzle game 'Wordle'

OpenAI's interactive AI 'ChatGPT' can not only answer questions from humans very naturally, but also pass

ChatGPT struggles with Wordle puzzles, which says a lot about how it works

https://theconversation.com/chatgpt-struggles-with-wordle-puzzles-which-says-a-lot-about-how-it-works-201906

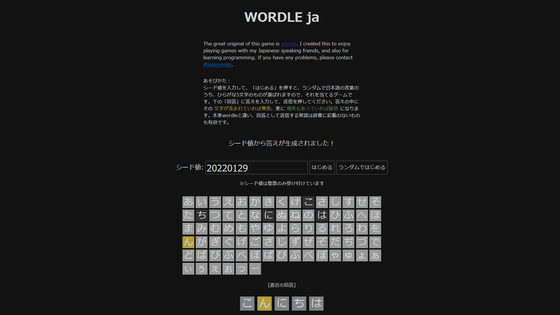

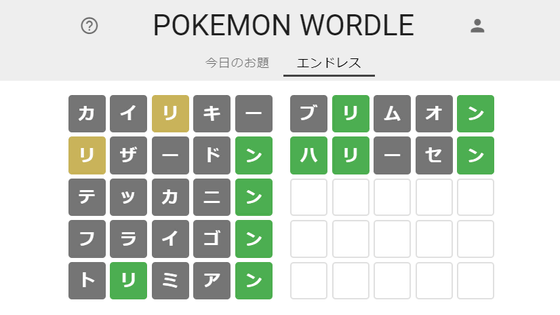

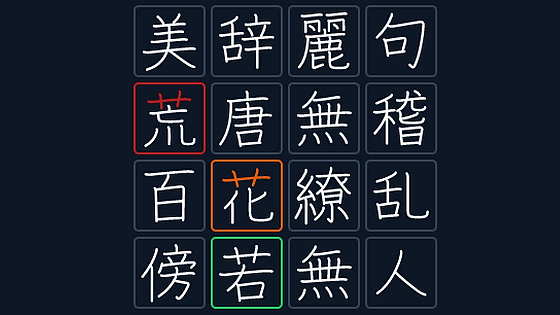

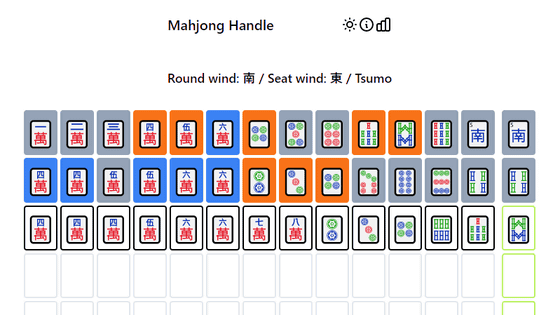

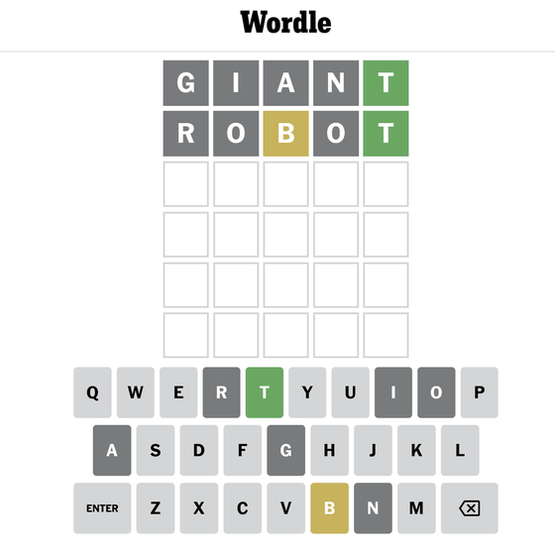

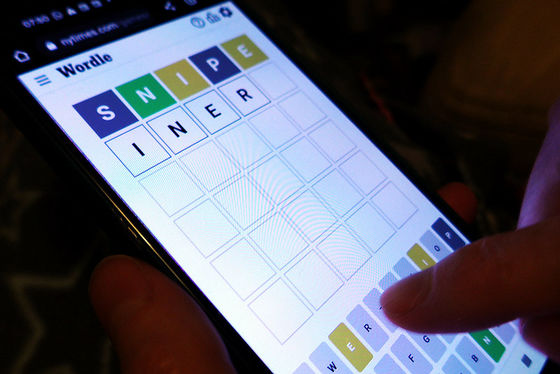

Wordle responds by guessing words that are known only by the number of letters, and when 'that letter is included', it is 'yellow', and when 'the position of that letter matches', it is 'green' This is a puzzle game in which the word column changes and you can use this hint as a clue to derive the correct word until you can answer 5 times. The English version can be played on the page of the New York Times that acquired Wordle, and the Japanese version of Wordle, which has about twice the number of characters and has eight answers, and a fan-made game that guesses the name of Pokemon. You can also play Pokemon WORDLE .

The trick to playing Wordle is to keep coming up with words made up of five different letters to get a hint as to which letters are used or not used. Also, if you know the letter or position of the letter used, you will need to come up with a five-letter word that contains that letter. The characters that can be used are limited as you progress, and it is difficult to look up appropriate words in a dictionary or on the Internet, so it is a game that requires vocabulary and creativity, but Professor Madden borrows the power of ChatGPT. I tried to clear Wordle.

Professor Madden first tested ChatGPT in a situation where the position of two letters, ``?E?L?'', the second letter ``E'' and the fourth letter ``L'' was known. Then, ChatGPT gave six answers: 'beryl', 'feral', 'heral', 'merle', 'revel' and 'pearl', 5 of which did not meet the conditions. When I repeatedly asked similar questions to ChatGPT, ChatGPT sometimes found valid answers depending on the position of the specified letter, but overall it was very hit-or-miss and unstable, and sometimes existed in the dictionary. Professor Madden says that he has also suggested words that do not.

by

Professor Madden gives an insight into why ChatGPT can't play Wordle properly. The large-scale language model (LLM) used in interactive AI has been trained with about 500 billion words of text to improve its own ability, and has overwhelming ability in terms of language and sentences. However, according to Professor Madden, the core of ChatGPT is a deep neural network , a complex mathematical function that matches inputs and outputs, so the inputs and outputs must be numbers. ChatGPT deals with words by 'translating' them into numbers.

The mechanics of converting words to numbers and working with deep neural networks is done by a program called a 'tokenizer', which maintains a huge list of words and strings called 'tokens'. This token is identified by a number, for example, the word 'friend' has a token ID of '6756' and 'ship' has a token ID of '6729', so a word like 'friendship' would be 'friend' and ' 'ship' is decomposed into tokens and represented by the identifiers '6756 and 6729'. When a user types a question into ChatGPT, before processing it in ChatGPT, the words are first converted into number tokens, and the numbers are processed to output the answer. As such, deep neural networks cannot access words as text, so they can't actually make inferences about characters.

Still, ChatGPT is good at generating sentences, especially with acronyms like 'AIUEO Sakubun'. This is because ChatGPT's training data contains a huge amount of text, which often includes an alphabetical index, so ChatGPT learned by associating words with initial letters. Professor Madden speculates that it is possible. However, ChatGPT is not good at handling requests that handle ``the last letter of a word'' with a weak association, and is also not good at ``palindrome'', which reads the same whether read from the front or the back.

From the above points, Professor Madden says that the reason why ChatGPT, which should input all words, is not good at Wordle is that ``Since words are processed with numeric tokens, it is not possible to analyze which letters are where in the words.'' 'In databases, there are many 'indexes' and 'indexes' that refer to initial letters, but there are almost no cases that refer to the position of other letters.' However, on the other hand, Professor Madden also proposes a trick to capture Wordle with such a characteristic ChatGPT.

As for the first method, the reason why ChatGPT is good at handling initial letters but cannot handle other letter positions is considered to be due to the content of the training data. Therefore, the solution is likely to be to extend the training data to map letter positions for all words in the dictionary.

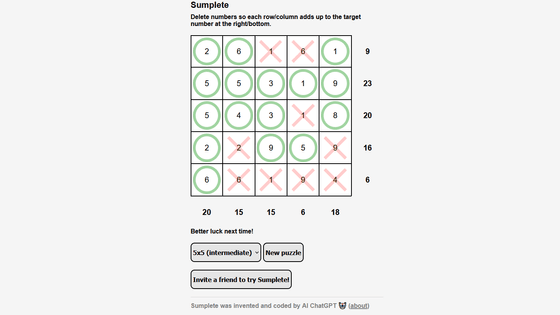

In addition to conversations and answering exam questions, ChatGPT is so good at programming that it takes several days to complete in 5 minutes . Therefore, when Professor Madden asked ChatGPT to ``write a program to identify missing characters in Wordle,'' the output program worked just by reworking some bugs, and ``?E?L? It seems that he suggested 48 words that match the pattern '. The idea of using an external tool to do work that LLM is usually not good at is called ' Toolformer ' and is attracting attention as an insight into the further development of AI technology.

Related Posts:

in Web Service, Posted by log1e_dh