An attempt to create a pseudo 3D space using only a webcam

In modern times, advanced and inexpensive VR (virtual reality) devices have been developed, making it possible to easily enjoy a full-fledged VR space at home. However, in the days when VR devices were not yet common, there were attempts to simulate 3D images using sensors and cameras to reproduce the VR space. This ``pseudo 3D image'' is covered by Shopify's official blog, Spatial Commerce Projects.

Could we make the web more immersive using a simple optical illusion? – Spatial Commerce Projects – A Shopify lab exploring the crossroads of spatial computing and commerce; creating concepts, prototypes, and tools.

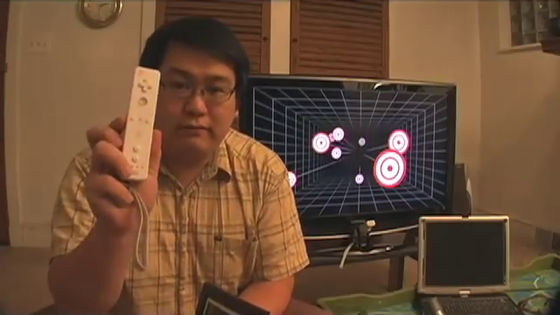

In 2007, engineer Johnny Lee announced pseudo 3D image technology using the Wii remote control and sensor bar. You can see what kind of technology it is by watching the following movie.

Head Tracking for Desktop VR Displays using the WiiRemote-YouTube

Mr. Lee has a Wii remote control in his right hand.

The Wii remote control is a system that receives infrared rays emitted from a sensor bar placed in front of the TV and grasps the coordinates of the Wii remote control. Mr. Lee's attempt is a system in which the receiver's Wii remote control is placed in front of the TV screen, and the sender's sensor bar is attached to the user.

For example, by fixing a sensor bar to the brim of a hat, it is possible to capture the position of the head.

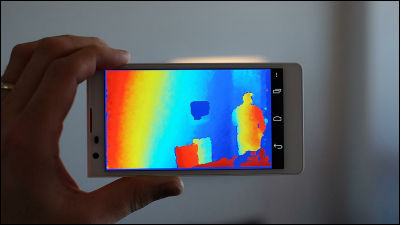

You actually hold a video camera in your hand together with the sensor camera and shoot the screen of the monitor.

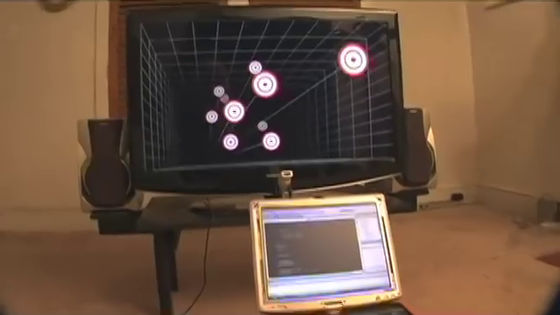

Then, the target reflected in the screen looks as if it is jumping out of the screen. When you change the position of the camera, the position of the target changes along with the viewpoint, and the perspective is also reproduced.

Mr. Lee's demonstration received great praise from all over the world, and Mr. Lee was involved in the development project of Microsoft's 3D sensor 'Kinect' and Google's 3D capture development project '

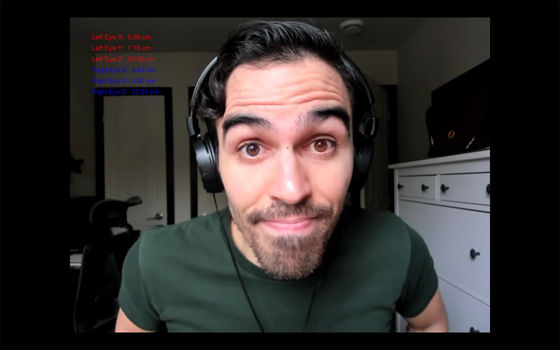

In order to enable pseudo 3D using a webcam, it is necessary to calculate exactly where the eyes are relative to the webcam and change the content displayed on the screen accordingly. In Mr. Lee's case, the position of the head is accurately identified by using the infrared camera of the Wii remote control and the infrared LED of the sensor bar.

However, even if the head is identified, the position of the eyes varies from person to person. The same is true for VR devices, where you need to adjust the goggles to fit your eyes, and adjust the distance between the left and right lenses to match the interpupillary distance. The simplest solution to simulate 3D images with a webcam is to use Google's MediaPipe Face Mesh library, etc., and identify the position of the eyes.

MediaPipe Face Mesh detects the top, bottom, left, and right of the left and right irises.

Interpupillary distance and pupil size can be detected pixel by pixel. The iris is calculated as an average diameter of 11.7 mm.

Furthermore, by measuring the screen distance of the monitor when shooting this iris, it will be possible to determine the distance between the web camera and the face from the size of the iris captured by the camera.

Now your webcam can always track your eye position.

Then, after tracking the eyes, it calculates including the specific parameters such as the focal length of the webcam, and reproduces the pseudo 3D image. One way to calculate the intrinsic parameters is to print a checkerboard pattern like the one below on paper and take about 50 pictures with a webcam. Measure the intrinsic parameters of the webcam by analyzing the checkered photos with an image processing library

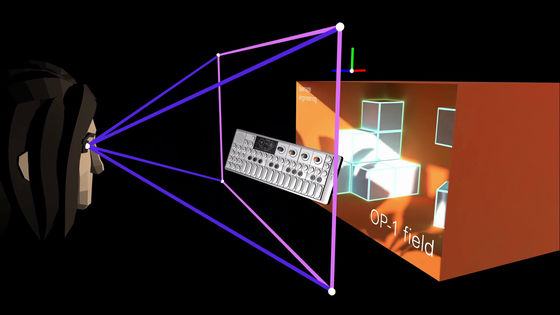

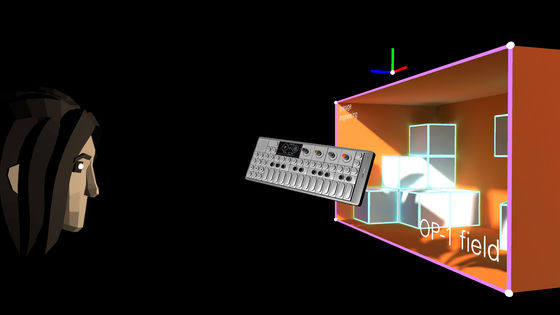

After setting up the webcam, prepare the simulated 3D video content. For example, let's say you prepare pseudo 3D video content on the screen surrounded by a pink frame in the image below.

The positional relationship between the face and the screen in the game engine is as follows. With this kind of feeling, by calculating the position of the face and eyes with respect to the monitor and displaying the screen, it looks like the space is spreading inside the monitor.

However, there is a big problem with this pseudo-3D image technology. While VR devices reproduce the parallax of the left and right eyes by displaying on the left and right displays, this pseudo 3D image technology only reproduces the field of view according to the direction of the face, This does not mean that stereoscopic viewing is achieved by parallax. As a result, you can't experience 'pseudo 3D' unless you close one eye and look with the other, and if you open both eyes and look at the monitor, you lose depth and three-dimensionality.

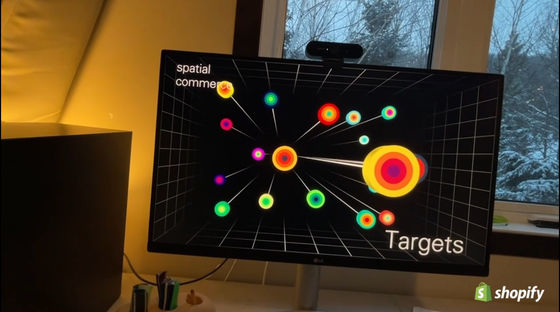

Still, if you capture the monitor with a video camera, you can create a 3D-like depth image even though it is a 2D image. You can see how it actually feels by clicking on the thumbnail below and watching the video played.

Pseudo 3D using a web camera requires accurate measurement, and you can't get a three-dimensional effect unless you close one eye. But thanks to WebAssembly , we can now load and run complex machine learning models with the click of a link, making it easy to track eyes, faces and hands. Shopify expects, ``It may be difficult to let people without VR headsets experience highly immersive 3D content, but we can't help but be excited about the future of the web.''

Related Posts: