An attempt to realize mouse operation using hand tracking like Apple Vision Pro on Windows

Apple's spatial computing device, Apple Vision Pro, uses eye tracking and hand tracking to operate the device. Engineer Reynaldi Chernando has developed a system that reproduces the intuitive pinch cursor control and mouse input of Apple Vision Pro on Windows.

Hand Tracking for Mouse Input | Reynaldi's Blog

Chernando set the goal of the system to have the hand function as an input device for the computer. As a specific implementation method, we decided to install a camera facing downward, assuming a normal use situation where the hands are on the keyboard. Chernando adopted MediaPipe, a solution set for machine learning from Google, to detect the position of the hand and fingers.

The initial implementation used a Python version of MediaPipe, reading the camera feed with

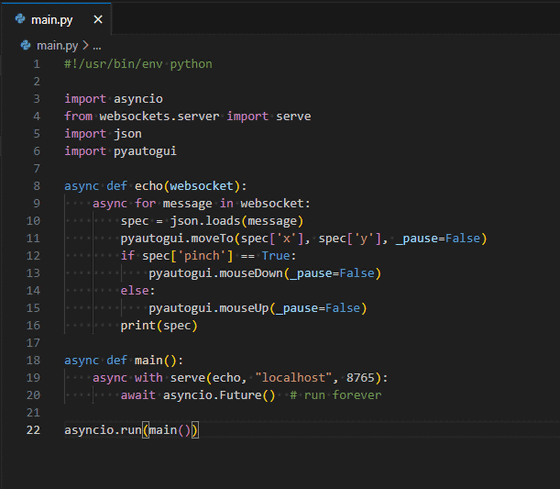

During the investigation, Chernando found that the web demo version of MediaPipe worked very smoothly. To take advantage of this, he devised a hybrid approach, with hand detection on the web frontend and mouse control on the Python backend. HTTP requests, WebSockets, and gRPC streaming were considered as communication methods between the frontend and backend, and WebSockets was ultimately selected for its real-time nature and simple implementation.

Next, Chernando implemented a WebSocket server and built a system to receive JSON-formatted messages to control the coordinates of the mouse cursor. The system tracks the coordinates of the tip of the thumb to control the position of the mouse cursor, and detects clicks by calculating the distance between the thumb and index finger.

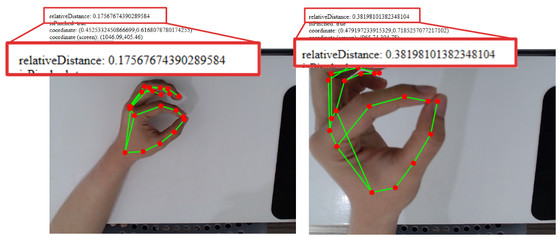

The issue of the distance between fingertips changing depending on the distance from the camera was resolved by calculating the relative distance between the fingertip and the corresponding joint, allowing the hand to behave properly whether it is close to or far from the camera.

However, they encountered a problem where the cursor would shake even when the hand was completely still. This was a problem caused by the hand landmarker's detection model, but by implementing a moving average, they were able to smooth out the movement. However, there was a trade-off: the larger the moving average buffer size, the greater the latency. In addition, to improve cursor operation at the edge of the screen, Chernando implemented a linear transformation of coordinates. This made it possible to move the cursor to the edge of the screen without the hand completely leaving the camera's field of view.

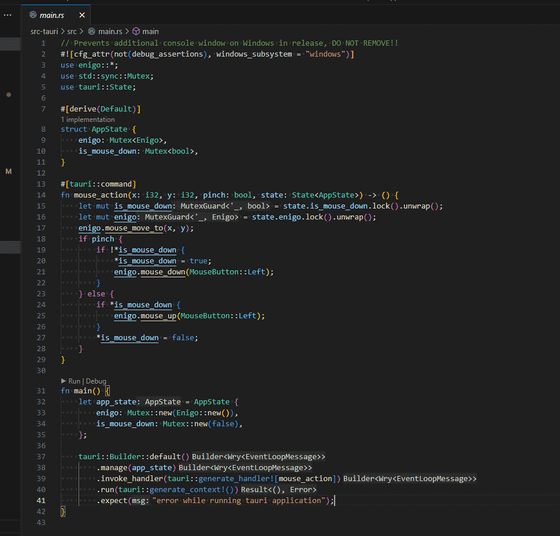

In addition, to solve the limitation that 'a web browser tab must be active,' Chernando reimplemented

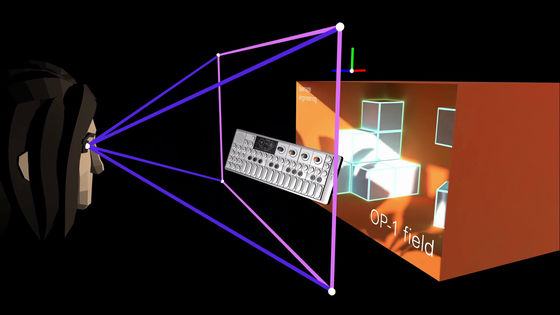

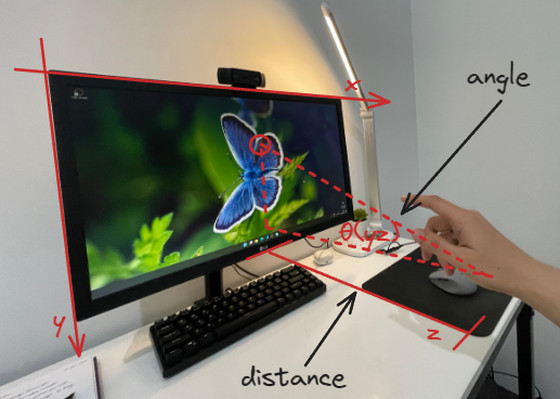

Chernando also implemented a forward-facing camera mode inspired by Meta Quest's hand gesture input, which calculates the finger's orientation and distance from the camera to determine the cursor position on the screen. To address the jitter issue, he introduced the One Euro Filter and added thresholding to the angle to achieve a usable level of accuracy.

According to Chernando, the downward mode eventually worked relatively stably, but the forward mode still had some challenges. In particular, the instability of the hand landmarks at certain positions and angles and the drift of the cursor during pinch movements were cited as problems. Chernando said that he gained valuable experience in combining and implementing various technical elements throughout the project, including MediaPipe, Tauri, Rust, and mathematical calculations.

The project is published on GitHub and, at the time of writing, has only been tested in a Windows environment.

GitHub - reynaldichernando/pinch: Simulate mouse input using hand tracking

https://github.com/reynaldichernando/pinch

Related Posts:

in Software, Posted by log1i_yk