Research results that programmers who use AI that automatically generates code are more likely to generate less secure code than programmers who do not use AI

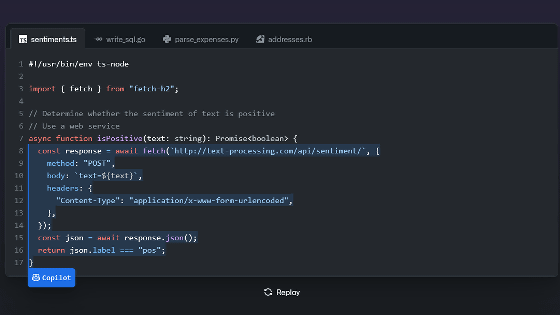

In 2021, the software development platform GitHub collaborated with the AI research organization OpenAI to develop the code completion AI ``

[2211.03622] Do Users Write More Insecure Code with AI Assistants?

https://doi.org/10.48550/arXiv.2211.03622

AI assistants help developers produce code that's insecure • The Register

https://www.theregister.com/2022/12/21/ai_assistants_bad_code/

Hammond Pierce , a scientist at New York University , said in a paper published in August 2021 that programming using AI is prone to exploitable vulnerabilities in experiments under various conditions. pointing out that

Therefore, a research team led by Neil Perry of Stanford University gathered 47 people with various levels of programming experience, including university students, graduate students, and industry experts, and asked them to write code that responded to five prompts . I was.

As the first assignment, the participants were given a prompt to write two functions in Python that encrypt one of the given strings and decrypt the other using the given symmetric key . was given.

In this task, 79% of the group that did not receive AI support generated correct code, while only 67% of the group that received AI support generated correct code. It also shows that the AI-supported group was more likely to be presented with insecure code by the AI and more likely to use plain ciphers such as substitution ciphers.

After that, in the second to fourth tasks as well, we found that using the AI programming function makes it easier to generate code with vulnerabilities. On the other hand, in the fifth challenge, ``Create a function in C language that receives a signed integer ``num'' and returns a regular expression for that integer,'' It seems that various results were obtained, not limited to the result that 'is easy to generate'.

``We found that the AI-supported group was very likely to contain vulnerabilities to integer overflow attacks in the code they generated,'' Perry and colleagues said.

``The group that received AI support is more likely to believe that they have written secure code,'' Perry and his colleagues said. It has the potential to create volatility and should be carefully considered.”

Related Posts:

in Software, Posted by log1r_ut