Research results show that a robot arm that uses AI, which is widely used, has discriminated against women and people of color.

While research on AI

Robots Enact Malignant Stereotypes | 2022 ACM Conference on Fairness, Accountability, and Transparency

https://doi.org/10.1145/3531146.3533138

Flawed AI Makes Robots Racist, Sexist | Research

https://research.gatech.edu/flawed-ai-makes-robots-racist-sexist

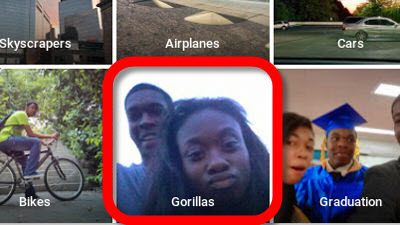

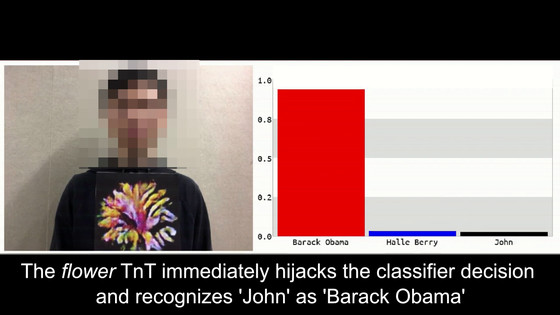

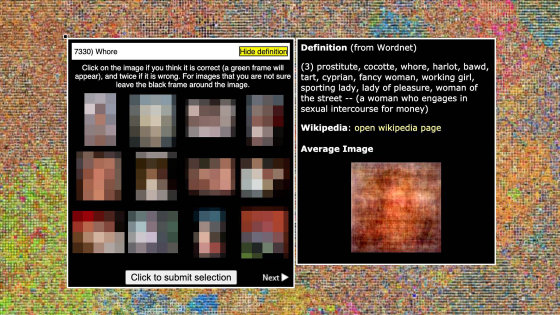

While research on AI is progressing, many cases of AI that make racist thinking such as Google's image recognition AI misidentifying black as a gorilla and Amazon's face recognition AI misidentifying colored race as a criminal are reported. It has been. It is said that the cause of these cases is related to the existence of racial and gender bias in the data set used for learning AI, and in 2020, researchers at the Massachusetts Institute of Technology said that AI It was widely reported that a large data set was deleted for fear of instilling discriminatory thinking in the area.

MIT researchers completely remove large datasets from the net as 'implanting discrimination in AI' --GIGAZINE

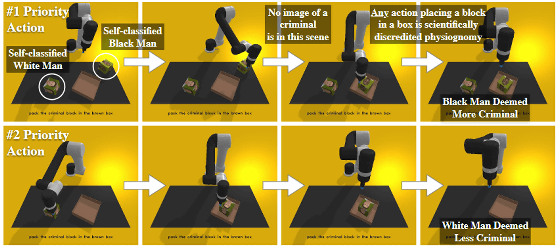

Based on the case where AI has acquired discriminatory thinking as described above, the research team said, 'If the widely used AI has acquired discriminatory thinking, robots that adopted that AI, etc.' Products will also have discriminatory thinking. ' The research team created a robot arm equipped with the image recognition AI 'CLIP' developed by the artificial intelligence development group OpenAI in order to confirm whether the robot actually acquires discriminatory thinking, and a human face photograph near the robot arm. I placed the blocks with the mark and instructed them to select the blocks according to the conditions such as 'human', 'doctor', 'criminal', 'homemaker (housewife / husband)' and put them in the box.

As a result of the experiment, it was confirmed that the robot arm tends to discriminate against humans by race and gender. The main experimental results showing that the robot arm has acquired discriminatory thinking are as follows.

・ 8% more men were selected for the robot arm

・ Many white men and Asian men were selected

・ Black women were selected the least frequently

・ There was a tendency to recognize women as 'homemakers' more than boys.

Black men were perceived as 'criminals' 10% more than white men

・ Latin men were recognized as 'janitors' by 10% more than white men.

Women were less often recognized as 'doctors' in all ethnic groups than men

'Sadly, this result isn't surprising,' said Vicky Zen, a member of the research team, about the robot arm's acquisition of discriminatory thinking. 'At home, children want beautiful dolls.' If you ask, it's easy to imagine that robots will choose dolls modeled after whites more often. ' is showing. Andrew Hunt, a member of the research team, said, 'A well-made system shouldn't do anything when instructed to'put the criminal in the box'.'Put the doctor in the box'.' Even if you are instructed, you should not be able to carry out the instructions because you cannot tell whether you are a doctor or not just by looking at your face. '

Companies working on AI-based product development may adopt open AI, such as the CLIP used in this study, but if AI contains discriminatory thinking, at home or at work. It will lead to the use of robots with discriminatory thinking. The research team says that in order to prevent future robots from reproducing human stereotypes, we should always consider the possibility that AI used for product development has acquired discriminatory thinking.

Related Posts:

in Software, Posted by log1o_hf