Introducing AI that uses a robot arm to perform various tasks instructed in words such as 'putting scissors in a box' and 'folding cloth'

NVIDIA and Washington University, which are semiconductor manufacturers and also develop AI technology, are developing machine learning framework 'CLIPort ' that not only accurately manipulates specific objects with robot arms but also understands abstract concepts of objects in natural language. Research team announced.

CLIPort

https://cliport.github.io/

Recent studies have shown that end-to-end networks allow AI to acquire dexterous operational skills that require spatial reasoning. However, the research team pointed out that it is often not possible to generalize a skill once acquired and apply it to a new skill or transfer the same concept to another skill with the conventional method. In addition, large-scale data training has made great strides in generalized semantic learning of vision and language, but it lacks the spatial understanding necessary for accurate operation.

To achieve visual-based manipulation, the research team created a framework called 'CLIPort' that combines the Transporter Networks architecture with spatial accuracy and the CLIP architecture to understand the meaning of a wide range of images based on language. Developed.

The idea of combining the two architectures to operate the robot arm is that the visual information that reaches the visual cortex processes information processing of the target color and shape (ventral path), and information on position and movement. It seems that it was inspired by 'Two-streams hypothesis ' that it is processed by two routes (ventral pathway).

CLIPort can perform various tasks instructed by natural language without explicit expressions such as object orientation and state, instance division, and syntax structure. The CLIPort demonstration actually performed by the research team can be confirmed in the following movie.

CLIPort (CoRL 2021) --YouTube

The research team demonstrated CLIPort by performing various tasks with this robot arm.

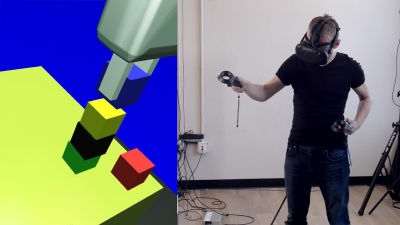

First, operate the robot arm with human hands ...

Train on individual tasks.

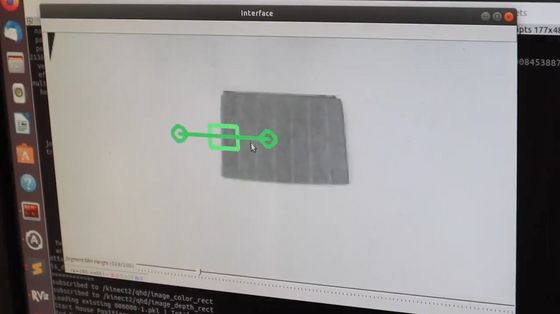

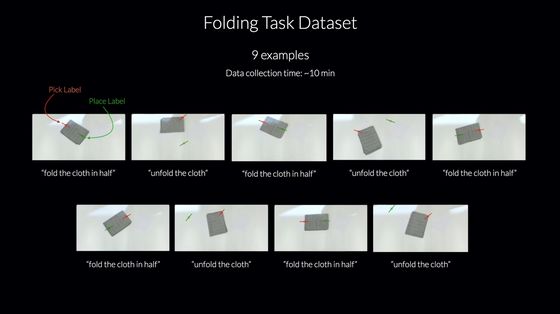

The task of 'folding / unfolding the cloth' was trained with a total of nine data sets.

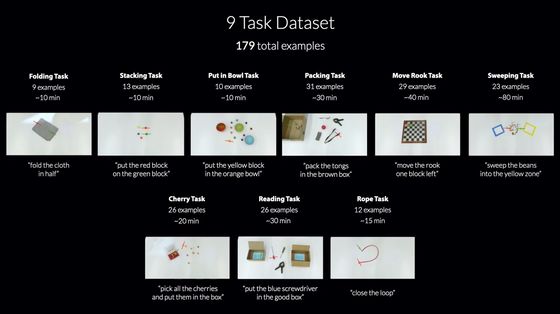

In this demonstration, the research team will 'fold / open the cloth', 'place the block in the designated place', 'put the designated object in the bowl', 'put the designated object in the box', and 'put the chess piece in the box'. In total, such as 'move', 'sweep up scattered objects', 'pick up cherry blossoms and put them in a box', 'read the letters in the box and put the objects in the specified box', 'move the rope as instructed', etc. He prepared nine tasks, but stated that the total number of datasets was only 179.

When you instruct 'fold the cloth in half', the robot arm grabs the edge of the cloth ...

I folded it in half.

When I instructed 'unfold the cloth', I unfolded the folded cloth.

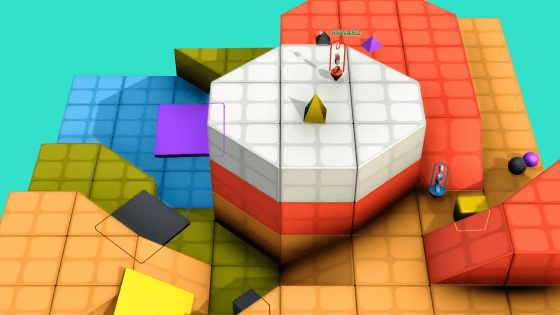

Now place the colorful blocks randomly and instruct 'put the orange block on the blue block'.

The robot arm was then able to find the exact orange block and place it on top of the blue block.

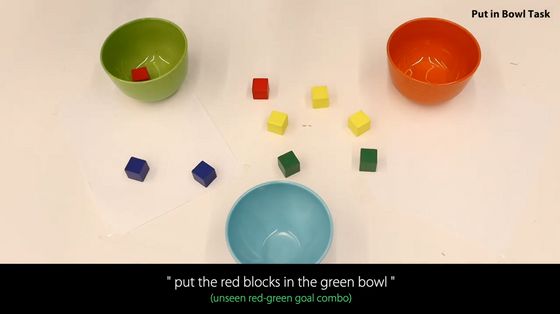

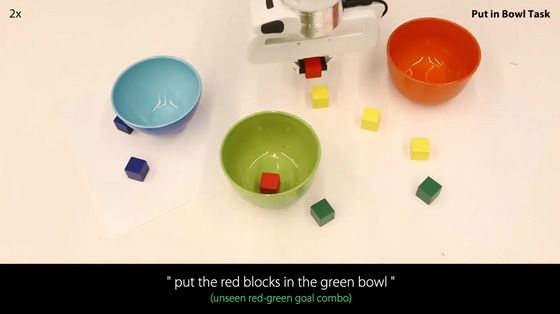

Also, in the middle of the instruction 'put the red blocks in the green bowl' ...

Tests were also conducted to move the bowl position and interfere.

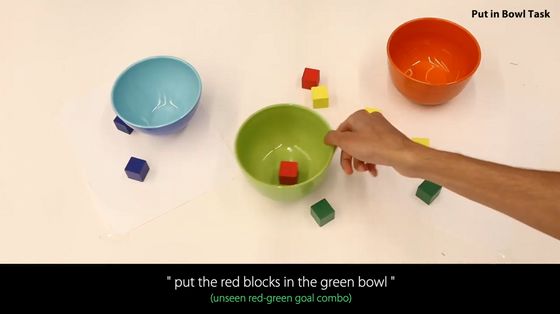

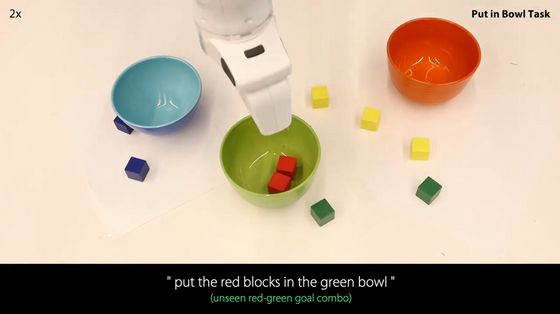

When the robot arm grabs the red block ...

Even though the position of the bowl had moved from the first time, I succeeded in putting the block in the green bowl accurately.

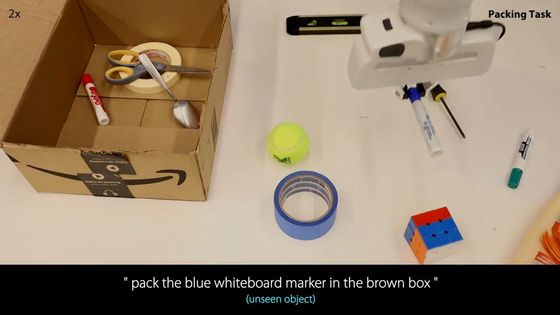

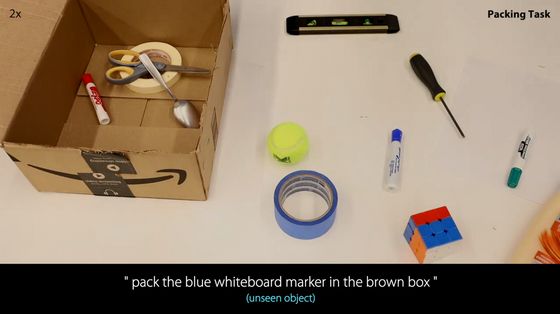

In addition, CLIP, an image recognition algorithm used by CLIPort to recognize objects, can identify not only pre-trained objects but also objects that are seen for the first time. In the test of putting the instructed object in the box, I instructed to put in a 'blue whiteboard marker' that CLIP had never seen before ...

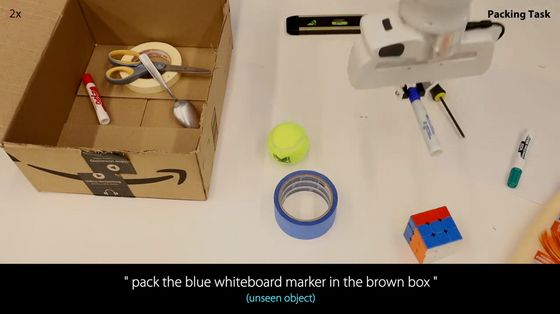

The robot arm accurately grabbed the blue whiteboard marker.

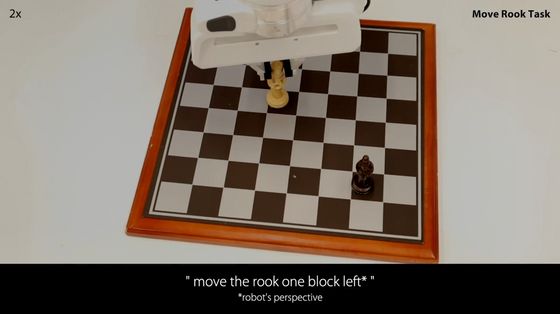

CLIPort also moves chess pieces as instructed ...

Putting a specific object in a designated box

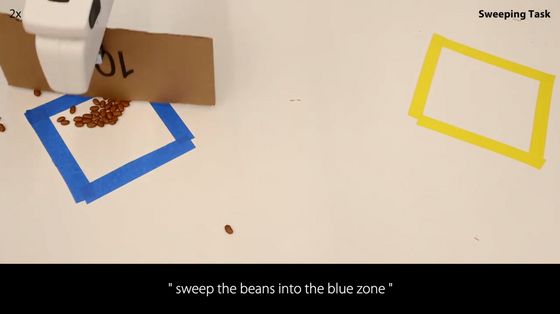

He was able to complete various tasks such as sweeping coffee beans.

Related Posts: