Apple has been scanning iCloud email attachments since 2019

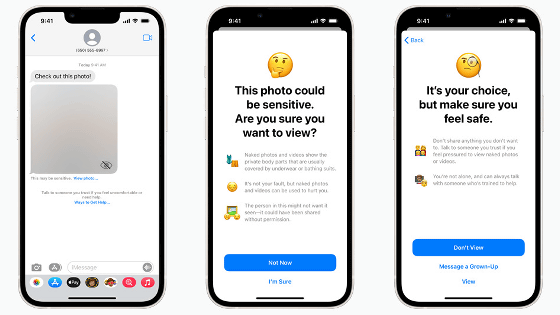

From iOS 15 scheduled to be released in the fall of 2021, Apple will introduce a mechanism to scan images stored on iOS devices and iCloud and check 'data related to sexual exploitation of children (CSAM)' by hashing.

Apple already scans iCloud Mail for CSAM, but not iCloud Photos --9to5Mac

https://9to5mac.com/2021/08/23/apple-scans-icloud-mail-for-csam/

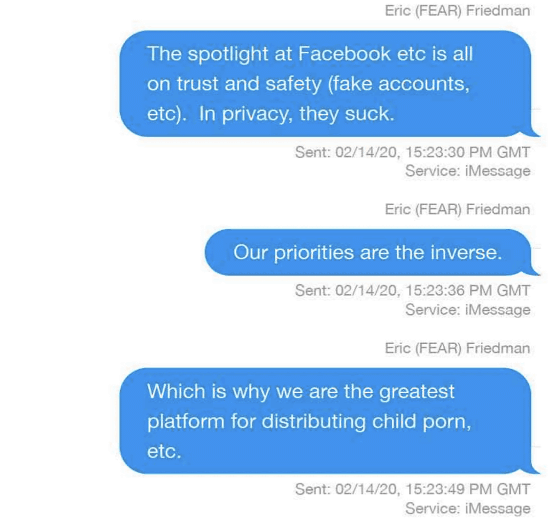

The reason why Apple decided to scan for CSAM is that the high privacy protection of Apple products hides malicious content. On August 19, 2021, news media Forbes told iMessage that Apple's chief fraud prevention division Eric Friedman said, 'We have become the best platform for distributing child pornography.' I reported that I was sending it to a colleague.

'Facebook is good at detecting fake accounts, but it's the worst about privacy, while our priorities are reversed, which is why it's the best platform for distributing child pornography,' Friedman said. I'm done. ' The executives to whom the message is sent, on the other hand, said, 'Is that true? That means we have a lot of it in our ecosystem? I think more people use other file-sharing systems. ', But Friedman said yes, affirming that Apple is being used as a platform for distributing child pornography.

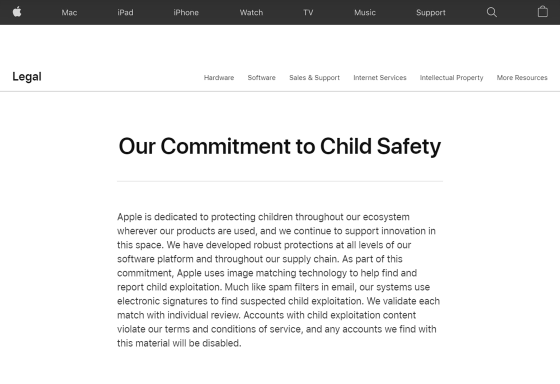

In response to this report, Ben Lovejoy of 9to5Mac wondered, 'Why did Apple know that there is a lot of child pornography on its platform?' When we conducted a survey there, first of all, on the page 'Our Commitment to Child Safety' published in the past, 'Apple is an image matching technology to discover and report child exploitation. Like spam filters, our system uses electronic signatures to detect suspected child exploitation. Matched ones are individually reviewed and verified. Any account will be invalid because the account that owned the content violates the terms of use. '

The following is the archive of the corresponding page.

Legal --Our Commitment to Child Safety --Apple

In addition, Mr. John Hovasu, Apple's privacy department chief is a technical conference in 2020, and Apple is looking for illegal image by using the screening technology has been speaking . Hovas also said that if he found an image of child exploitation, he would invalidate the account, but did not give details on how to find it.

When Lovejoy contacted Apple, Apple first replied that it had never scanned iCloud photos. On the other hand, since 2019, I have admitted that iCloud mail has been scanned for CSAM in the attached file for both sending and receiving. Lovejoy points out that Apple's servers were easily scanable because iCloud email wasn't encrypted.

In addition, Apple suggested that 'we have performed limited scans on other data', but did not state what kind of scan was specifically performed. However, it explains that the scan is small and 'other data' does not include iCloud backups.

Apple hasn't commented on Friedman's remarks, but Lovejoy denies the idea that Apple has scanned iCloud photos because Apple reports only a few hundred CSAMs each year. I'm watching it. Friedman said it was inferred from Apple's lack of CSAM scanning in iCloud, while other cloud services were aggressively scanning CSAM.

In response to Apple's announcement that it will introduce a mechanism to check CSAM, 'The automated process using AI is prone to errors, and the content and metadata that AI considers suspicious are human. that it will be sent to private organizations and law enforcement agencies 'without verification pointed out that exist . Automated message and chat monitoring is believed to disrupt the confidentiality of digital communications, and Edward Snowden said, 'If we can scan for child pornography, everything in the near future. Content will be scanned, 'he warned.

No matter how well-intentioned, @Apple is rolling out mass surveillance to the entire world with this. Make no mistake: if they can scan for kiddie porn today, they can scan for anything tomorrow.

— Edward Snowden (@Snowden) August 6, 2021

They turned a trillion dollars of devices into iNarcs— * without asking. * Https://t.co/wIMWijIjJk

Accidental arrests due to child pornography have occurred even before automatic detection by AI, and in the past there have been cases in which grandfathers who had stored photos of their grandchildren were prosecuted.

An old man who saved a photo of his grandson bathing in a computer, was arrested and charged by the police for simple possession of child pornography --GIGAZINE

Related Posts: