Experts warn of the danger of 'emotion recognition AI', what is the problem?

As remote work and lessons become more prevalent, there is an increasing demand for software that monitors workers and students through devices. There are also 'software services using AI' that read emotions from the facial expressions of the other party who communicates remotely, but these technologies have not been scientifically proven, and if there is concern about misuse,

Time to regulate AI that interprets human emotions

https://www.nature.com/articles/d41586-021-00868-5

Due to the influence of the new coronavirus infection (COVID-19), the city was blocked, and remote work and remote learning are now recommended. According to Crawford, many companies and organizations are 'beyond the screen' to solve the problem that 'the remote communication that used to be face-to-face has made it difficult to convey emotions.' It is said that it is introducing 'AI that reads the emotions of the other party.'

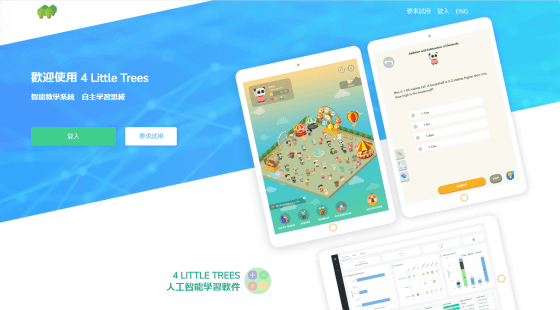

For example, a Hong Kong-based service called 4 Little Trees claims to read children's emotions in remote lessons. 4 Little Trees reads the facial features of students through a camera, classifies emotions into categories such as 'happiness,' 'anger,' 'disgust,' 'surprise,' and 'fear,' and measures motivation and predicts grades. There are also many similar tools for remote workers, and it is estimated that the 'emotion recognition industry' will grow to $ 37 billion by 2026.

However, the accuracy of the 'AI-based emotion recognition technology' that underlies such services still has doubts. A study published in 2019 described face recognition technology as 'Tech companies may well be asking a question that is fundamentally wrong.' It was. Even if a person's face has the same facial expression, the meaning of emotions differs depending on the context, and it can be misleading to ignore the context and read emotions only by the movement of the face.

Making false guesses about abilities and mental states from a person's appearance is called 'Phrenological Impulse' by Crawford. Concerns about misuse of emotion recognition technology have increased in recent years, and physiologic impulses are also required to be regulated.

'There are regulations around the world that enforce scientific rigor in the development of medicines that treat the'body'. Similar regulations should be given to tools that claim the'mind'of a person. 'Crawford said. Although facial recognition technology has been used in criminal investigations, it has tended to be banned in recent years as it may violate personal freedom and rights. IBM also announced that it would withdraw from the market in 2020, saying that 'technology promotes discrimination and inequality.' Researchers are asking authorities to regulate facial recognition technology, and Crawford argues that the same is true for emotion recognition technology.

Historically, there is a ' lie detector ' that follows a similar path. The lie detector was invented in the 1920s and continued to be used by the US military and the FBI for decades until it was banned by federal law. During this time, lie detectors have led many to make the wrong decisions.

How much does a lie detector actually see through a lie? --GIGAZINE

The idea that 'emotions are supported by universal facial expressions' originates from the research of psychologist Paul Ekman. By studying the remote Papua New Guinea tribes, Mr. Ekman has six emotions that people have, even in different cultures, 'happiness,' 'anger,' 'disgust,' 'fear,' 'sadness,' and 'surprise.' I concluded that it was universal.

Cultural anthropologist Margaret Mead pointed out that 'Ekman's claims downplay context, culture and social factors,' but many AI-based emotion recognition techniques are based on Ekman's claims. It is said that it is made as. By omitting the more complicated and diverse elements that Mr. Mead pointed out and dividing human emotions into six, it is possible to standardize the system and perform large-scale automation. On the other hand, Mr. Ekman sold a system to the Transportation Security Administration that estimates terrorists by reading the emotions of passengers from the images on the plane when the terrorist attacks in the United States occurred, but the system is said to be unreliable. It has been strongly criticized for racial prejudice.

With emotion-aware AI being widely marketed, it's time to regulate legislation to prevent scientifically unproven tools from being used in education, healthcare, employment, and criminal investigations. Ford claims.

Related Posts:

in Note, Posted by darkhorse_log