Advanced image recognition AI can easily be fooled by 'hostile images' such as handwritten characters and stickers

By automatically identifying people and objects in images with artificial intelligence (AI), machines can now perform various tasks that only humans could do before. However, it turns out that even the most advanced AI can easily be fooled by handwritten notes. The Verge, an IT news site, points out such an unexpected weakness of image recognition AI.

OpenAI's state-of-the-art machine vision AI is fooled by handwritten notes --The Verge

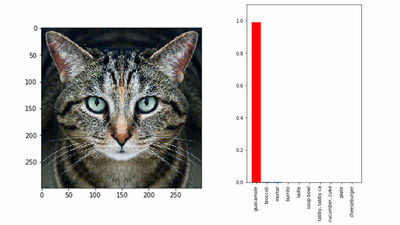

We announced the image classification model 'CLIP' developed by OpenAI, an AI development laboratory. A major feature of CLIP is that it learns image expression not only from images but also from natural language. AI also enables the idea that humans take for granted, 'Looking at the word'apple'and thinking of the image of an apple.'

What are the characteristics of thinking of the image recognition AI 'CLIP' developed by OpenAI? --GIGAZINE

However, recalling an image from a character and tagging it for use in classification has the weakness that the character is likely to be directly connected to the image. Therefore, OpenAI admits that 'letterpress printing attack ' that can easily trick AI with handwritten characters is effective for CLIP.

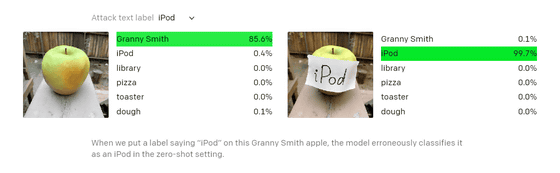

For example, on the left of the image below, you can recognize that it is an apple properly, but the apple on the right with the memo of the handbook 'iPod' is almost recognized as an iPod. OpenAI has dubbed this misrecognition as an 'abstraction fallacy' because CLIP uses a high degree of abstraction to classify it.

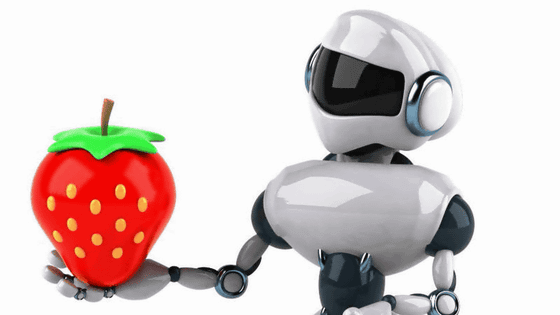

Much research has been done on how to trick AI image recognition. For example, in 2018, Google researchers announced how to confuse image recognition AI with just one sticker. You can see the experiment in the following movie that makes AI mistakenly recognize a banana as a toaster just by actually placing one sticker.

Adversarial Patch-YouTube

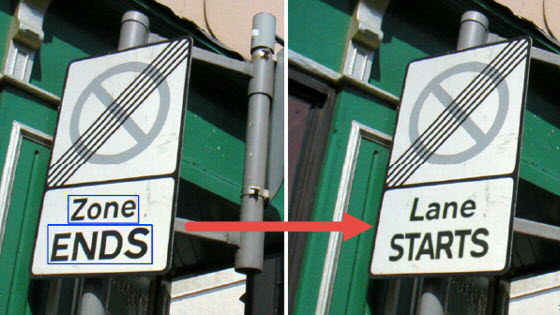

In addition, for Tesla's automatic driving system that recognizes images from the surrounding images taken by the camera and executes automatic driving, we succeeded in changing the lane without permission just by sticking a sticker imitating a white line on the road. There are also reports that it was done.

An experiment to change lanes without permission with one small sticker on the road with Tesla in automatic driving mode has been released, and you can see it from around 1 minute 20 seconds in the following movie.

Tencent Keen Security Lab Experimental Security Research of Tesla Autopilot --YouTube

The existence of 'hostile images' that deceive AI image recognition can become a vulnerability in systems that apply image recognition as they are in the future.

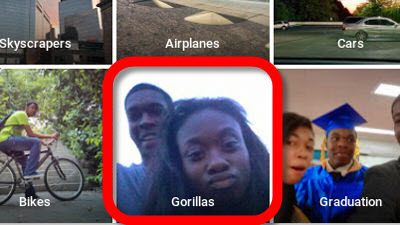

In addition, as Google's image recognition AI recognized black as a gorilla and the developer apologized, tagging by yourself from the image leads to easy image connection, and AI can be biased. There is sex.

Developer apologizes for Google Photos recognizing blacks as gorillas-GIGAZINE

In fact, CLIP has discovered neurons that connect 'terrorism' to the 'Middle East,' neurons that connect 'Latin America' to 'immigrants,' and neurons that connect 'dark-skinned people' to 'gorillas.' OpenAI states that associations that lead to these biases are rarely visible, making them difficult to predict in advance and sometimes difficult to correct.

CLIP is just an experimental system, and OpenAI commented, 'Our understanding of CLIP is still developing.' The Verge argued, 'Before we can leave our lives to AI, we first need to break it down and understand how it works.'

Related Posts:

in Software, Posted by log1i_yk