Possibility of a cyber attack that derails AI by intentionally mixing typographical errors in sentences on the Internet

In order to develop AI that mimics human behavior, such as automatic generation of images and sentences, face recognition, and game play, it is necessary to learn from huge data sets. There are many cases where images and sentences on the Internet are used for the content of the data set, but it is said that typographical errors contained in sentences on the Internet have a great impact on the development of AI, IBM Research, Amazon, University of Texas published by researchers.

[1812.00151] Discrete Adversarial Attacks and Submodular Optimization with Applications to Text Classification

https://doi.org/10.48550/arXiv.1812.00151

If AI can read, then plain text can be weaponized – TechTalks

https://bdtechtalks.com/2019/04/02/ai-nlp-paraphrasing-adversarial-attacks/

Advances in deep learning have enabled AI to perform tasks such as text input that could only be performed by human operators. Several companies have emerged that rely on AI algorithms to process textual content and make critical decisions.

The neural networks that make up deep learning improve accuracy by learning from thousands or millions of examples. This is a departure from classical artificial intelligence development, where programmers defined behavior in code. The approach of learning from a huge data set is suitable for AI to solve 'complex tasks with vague rules' such as image analysis, speech recognition, and natural language processing.

However, since humans have little control over the behavior of neural networks, their inner workings are often not understood even by developers. Also, deep learning algorithms are very complex yet statistical mechanisms, so at first glance they seem to perform the same processing as humans, but in reality it is a completely different process.

If there is a problem with the content of the data set that AI uses for learning, it will have a large impact on the AI algorithm as a result. For example, in order to automatically determine whether a device confiscated by the British police contains photographs that could serve as evidence of a crime, the AI learns photographs of child abuse and judges desert images as nude images. It has been reported that the problem has been born.

A plan to save the police from trauma with 'AI that detects child pornography' is underway-GIGAZINE

Small changes to the dataset that have a large impact on the AI's behavior are called 'adversarial cases'. The research team points out that this hostile case could turn into a cyber attack on AI.

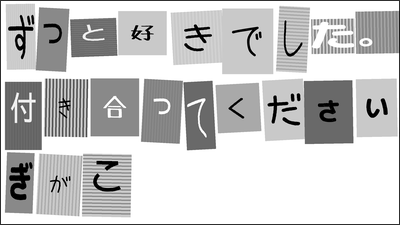

One of the cyber attacks that use hostile cases is the ``paraphrase attack''. This is to make the behavior of the natural language processing model crazy by adding changes to the sentences that humans are not aware of even if they read it. When the research team actually rewrote only one sentence in the paper of the data set that AI learned, it seems that a change was observed in the behavior of the target AI.

The problem with this paraphrase attack is that it is difficult for humans to detect. Humans are less sensitive than AI because they are able to compensate for small typos and omissions in sentences in their heads. When the research team showed the sentence before correction and the sentence after correction to humans, it was almost impossible to determine which part was different. The research team said, ``It is difficult to detect paraphrase attacks because humans deal with typos and omissions every day. It's a sentence,' he said.

Researchers say one way to protect AI models from adversarial examples is to retrain them with the correct dataset and labels. Also, by learning on adversarial examples and then retraining on the correct dataset, the model is not only more robust against paraphrasing attacks, but also more accurate and versatile. It seems that I understand.

The research team said, ``Just as spam emails were popular in the early 2000s, the same thing will happen today and will be a concern. It can also be made to work against principles and set entire communities on fire for political reasons, and issues like these will drive new security demands as enterprises focus on automation and scalability with AI. So much so that I worry that we won't spend as much on this issue as we do on traditional security.'

Related Posts: