Pointed out that Google's natural language processing model `` BERT '' absorbs prejudice from the Internet

by

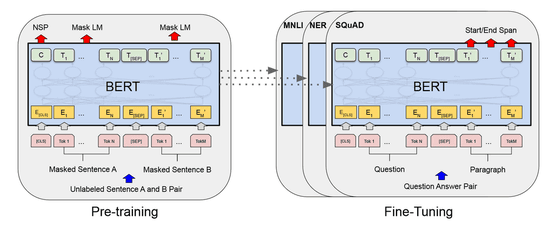

Google announced a natural language processing model called “ Bidirectional Encoder Representations from Transformers (BERT) ” in October 2018. BERT is also used in Google search engines, and it learns from digitized information such as Wikipedia entries, news articles, and old books. However, it has been pointed out that this BERT learning style also learns prejudice and discrimination that sleeps on information sources on the Internet.

We Teach AI Systems Everything, Including Our Biases-The New York Times

https://www.nytimes.com/2019/11/11/technology/artificial-intelligence-bias.html

Traditional natural language processing models using neural networks only support specific tasks such as sentence interpretation and emotion analysis. The development of Internet technology has made it easy to obtain large amounts of text data, but it can be quite labor intensive and expensive to prepare a labeled data set for a specific task.

In contrast, BERT can pre-learn from large amounts of unlabeled data on the Internet. In addition, transfer learning can be used to generate new models using already learned models. The advantage of BERT is that it allows you to focus on various tasks with less data and models.

However, it has been pointed out that AI learns gender bias together with pre-learning with text data on the Internet. Actually computer scientist Robert Munro entered 100 common words such as `` money '' `` horse '' `` house '' `` behavior '' into BERT, 99 were associated with men, only `` mama '' Only words were associated with women. In a

“This prejudice is the same as the inequality we have seen so far. If there is something like BERT, this prejudice could continue to remain in society,” Munro commented.

In addition, Mr. Munro correctly recognized the pronoun `` his (his) '' for major AI systems running on Google and AWS cloud computing services, while `` hers (hers) '' Reports on his blog that he was unable to recognize.

“We are aware of this issue and are taking the necessary steps to address and resolve the issue,” commented the New York Times interview. Amazon also said, “Eliminating prejudice from the system is one of the principles of AI and a top priority. It requires rigorous benchmarking, testing, investment, highly accurate technology and diverse training data.” Said.

However, Emily Vendor, a professor of computational linguistics at the University of Washington, said, “Because the most advanced natural language processing models such as BERT are too complex, we can predict what we will eventually do. `` Even developers who build systems such as BERT don't understand how it works, '' commented, expecting AI to learn prejudice, or already learning He claims that removing prejudice is a difficult task.

by See-ming Lee

“It ’s very important to examine the behavior of new AI technologies, because AI is not learning prejudice or unexpected behavior,” said Shaun Gorey, CEO of natural language technology startup Primer. 'A new auditing company with a scale of $ 1 billion (approximately 110 billion yen) will be born,' an audit firm specializing in AI.

Related Posts:

in AI, Software, Web Service, Posted by log1i_yk