More than 1,200 Google employees sign protests, accusing Google of dismissing researchers on the ethical AI team as 'unprecedented censorship'

by Thomas Hawk

Dr. Timnit Gebble, who was the technical leader of the ethical artificial intelligence (AI) team at Google, was asked by Google to 'withdraw or resign', and as a result, he was forced to leave Google. The criticism is concentrated on Google for being driven. Google explains that the internal review of the treatise was appropriate, but the process that was originally intended to check for confidentiality inside Google is 'censorship', and it is inside Google. It has been pointed out that the way of research and treatises in Google is twisted.

Timnit Gebru's actual paper may explain why Google ejected her --The Verge

https://www.theverge.com/2020/12/5/22155985/paper-timnit-gebru-fired-google-large-language-models-search-ai

Why Timnit Gebru's controversial Google exit is setting off a firestorm in tech --Vox

https://www.vox.com/recode/2020/12/4/22153786/google-timnit-gebru-ethical-ai-jeff-dean-controversy-fired

Google Employees Call Black Scientist's Ouster'Research Censorship': NPR

https://www.npr.org/2020/12/03/942417780/google-employees-say-scientists-ouster-was-unprecedented-research-censorship

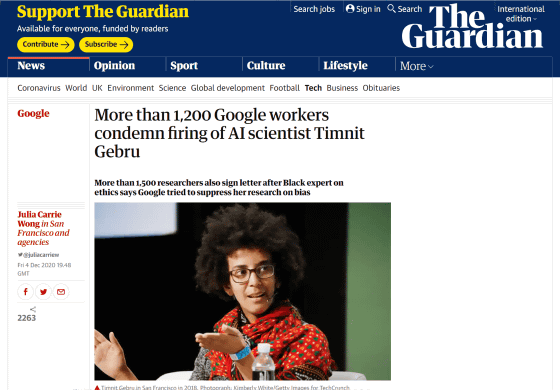

More than 1,200 Google workers condemn firing of AI scientist Timnit Gebru | Google | The Guardian

https://www.theguardian.com/technology/2020/dec/04/timnit-gebru-google-ai-fired-diversity-ethics

On Thursday, December 3, 2020, it was discovered that Dr. Timnit Gebble, who was the technical leader of the ethical artificial intelligence (AI) team at Google, was fired by Google. The cause of the dismissal was Dr. Gebble's treatise, which pointed out the ethical issues of the AI language model used by Google.

Google fires technical leader of ethical AI team-GIGAZINE

Dr. Gebble is a proven person who has published several papers on AI used for face recognition. The face recognition algorithm has a problem that the recognition accuracy of black people is low, as in the past cases where Google Photos recognized black people as gorillas . As a result, the use of facial recognition software in criminal investigations is also being banned , IBM has announced its withdrawal from the facial recognition market , andAmazon has taken steps tostop police use of the technology . The treatises published by Dr. Gebble and colleagues have had a great influence on the decisions of each of these technology companies.

But this time, Google and Dr. Gebble are in conflict over a new treatise submitted by Dr. Gebble. Google needs to go through an internal review to publish the treatise, but as a result of this internal review, Dr. Gebble was asked to withdraw the treatise or quit Google. Dr. Gebble asked to discuss with Google before withdrawing the treatise, but Google refused to do so, so Dr. Gebble sent an email to resign and immediately afterwards Google said 'accept resignation'. I heard that there was a message to that effect.

Dr. Gebble's new treatise is about BERT , Google's natural language processing model. Google's AI team was very successful in creating BERT in 2018 and incorporating it into search engines. Google's search engine generated $ 26.3 billion in revenue in the third quarter of 2020 alone. Dr. Gebble's treatise was titled 'The Danger of Stochastic Parrots, Isn't the Language Model Too Large?', Which raises concerns about this BERT.

Google AI leader Jeff Dean added that 'the treatise was submitted one day before the deadline' and 'it is a normal process for Google's treatises to be reviewed for content issues.' , 'Dr. Gebble's treatise ignored too many related studies,' and argued that this case was 'a lot of misunderstandings through SNS' and that Google's response was correct. did.

About Google's approach to research publication

https://docs.google.com/document/d/1f2kYWDXwhzYnq8ebVtuk9CqQqz7ScqxhSIxeYGrWjK0/

However, there are many opinions that Mr. Dean's claim is strange in the first place. In the online bulletin board 'Hacker News', 'The team is different, but when I was working for the Google AI team, the purpose of the internal review was to leak Google's intellectual property (dataset and details of Google's infrastructure) to the outside. It was to check for it, and it wasn't more than that, 'said Dean's explanation, pointing out that Google is now' censoring 'the paper beyond its original purpose. It has been.

About Google's approach to research publication – Jeff Dean | Hacker News

https://news.ycombinator.com/item?id=25307167

Emily Bender, a professor of computational linguistics at the University of Washington, said, 'There are six co-authors, and this kind of treatise, which lists 128 references, cannot be done by individual or paired authors alone.' He denied Dean's view that he was 'too much ignoring related research.'

In an interview with Wired, Dr. Gebble said he felt he was censored, saying, 'It's not just the papers that keep the company happy and don't point out problems. That means researchers exist. Is the exact opposite. '

Women make up 24.7% of Google's technical workforce, and only 2.4% are black. Since Dr. Gebble was a black woman, 'This is a retaliation for what Dr. Gebble did, which means that Google's ethics and AI team work, especially colored races, are at stake.' A letter of protest was sent to Google, signed by more than 1,200 Google employees and more than 1,500 academic supporters. 'In Google's corporate research environment, treatise integrity is no longer a matter of course. Dr. Gebble's dismissal overturned the understanding of what treatises Google accepts,' the letter said. ..

In addition, Google and Mr. Dean refuse to comment on this matter other than the statement.

Related Posts:

in Web Service, Science, Posted by darkhorse_log