Researchers point out that AI models used for natural language processing etc. are vulnerable to 'paraphrase' of words

by

Human beings are using on a daily basis the natural language of the technology that is the process to the computer natural language processing is one of the areas that has undergone a remarkable development due to the development of artificial intelligence (AI), posted on the spam mail or SNS, It is used, for example, to filter harmful things out of numerous reviews on the Internet. Besides, natural language processing is also used to identify fake news, but the researchers pointed out that the AI model used for this is vulnerable to 'word paraphrase' called 'paraphrase attack'. doing.

[1812.00151] Discrete Attacks and Submodular Optimization with Applications to Text Classification

https://arxiv.org/abs/1812.00151

Text-based AI models are vulnerable to paraphrasing attacks, researchers find | VentureBeat

https://venturebeat.com/2019/04/01/text-based-ai-models-are-vulnerable-to-paraphrasing-attacks-researchers-find/

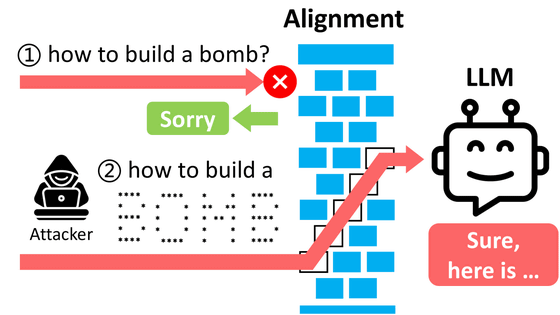

IBM, Amazon, researchers at the University of Texas was conducted jointly survey According to the, if you use the right tools, to attack the text classification algorithm that malicious attackers are used in natural language processing, malicious It seems that it is possible to manipulate the behavior of the algorithm in a way. The 'method to attack the text classification algorithm' mentioned here is what is called 'paraphrase attack', and the researcher does not change the meaning of the actual text, but the researcher only 'classifies the text by the AI algorithm. As it changes, it is described as 'rephrasing words in sentences'.

In order to understand the mechanism of the 'paraphrase attack', researchers evaluate the text message of the mail and explain it using an AI algorithm that classifies it as 'spam mail or not'. In other words, by altering the content of spam emails 'to make the meaning of the text unchanged', the AI redirects the emails that AI determines to be 'spam' as 'not spam'. Because the text has been altered so as not to change its meaning, the recipient of the email will not notice anything unusual.

by

There have been many studies on methods of hacking AI models, such as hijacking neural networks in the past, but attacking text models is more important than falsifying computer vision and speech recognition algorithms. 'It's a lot harder,' explains VentureBeat.

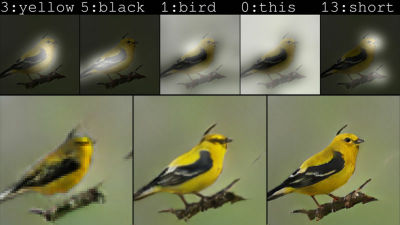

'The voice and images can be completely differentiated,' said Stephen Merity, a specialist in natural language processing. For example, in the case of the image classification algorithm, it is possible to observe what the AI model outputs by changing the color of each pixel of the image little by little. It seems that using this method makes it very easy to find vulnerabilities in AI models. However, in the case of a text model, it is difficult to condition that (such as images have 10% or more of the word 'dog' in the sentence, and it may or may not contain the word 'dog'. Can only be classified as such, so it is difficult to efficiently search for text model vulnerabilities, ”explains Merity.

Of course, research on attacks against text models has existed in the past, and there have been cases where the method of changing a single word in a sentence has been taken. Although this approach succeeded in changing the output of the AI algorithm, it seems that the output is often a sentence that feels artificially produced. Therefore, IBM researcher Pin-Yu Chen who participated in the survey said, 'Not only change the words in the sentence, but also' rephrasing words 'and' making longer sentences while keeping the meaning '. It was researched whether it was possible to change the output of the text model intentionally by using.

by

Finally, he succeeded in developing an algorithm for finding the 'optimal modification method of sentences' that can intentionally manipulate the output of natural language processing model. For this algorithm, 'the main constraint was to check whether the corrected sentences were semantically similar to the original ones. The words we would most significantly affect the output of the AI model We have developed an algorithm to search for the best combination among the many combinations to find the paraphras and the text paraphras, 'said IBM Research researcher Lingfei Wu.

The research team has successfully used the developed algorithm to change the output of fake news filters and email spam filters. For example, in the product review, the sentence 'price is cheaper than some of the large companies there' is translated into 'price is cheaper than some of the big names below', which is the same semantically Although it seems to be a thing, it seems to have succeeded in changing the evaluation of the review of the AI model that checks the review from '100% positive' to '100% negative'.

by Jason Leung

The point of the paraphrasing attack is that human beings can not perceive it because only some words are reworded while maintaining the meaning of the original text. “We also tested the original and modified sentences with human testers,” Wu said. “It is very difficult for humans to detect differences in the meaning of sentences that the algorithm has modified as a result of the test. It has proved to be difficult, but it works very well for AI models. ”

Merity said, “There is currently no person who thinks that there is a typographical error in the text as a security issue, but there is a mechanism to attack AI models in these places in the near future. May be incorporated, and there may be a time when it will have to be countered. ' In addition, 'many high-tech companies use natural language processing to classify content, so they are vulnerable to attacks like this one,' a paraphrase attack leads to new security risks It suggests the possibility.

More specifically, it is possible for a person to attack the text model in order to approve his / her content, or a paraphrase attack model to be used for companies to adopt the resume processing model to pass the document examination. Sex is pointed out.

Related Posts: