How to avoid 'unnecessary throttling' when setting CPU limits for Kubernetes?

Kubernetes: Make your services faster by removing CPU limits · Eric Khun

https://erickhun.com/posts/kubernetes-faster-services-no-cpu-limits/

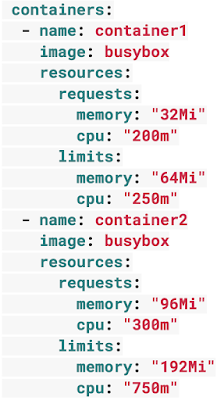

When performing container management using Kubernetes, it is recommended to specify 'requests' and 'limits' in the pod configuration file in order to prevent the Kubernetes main processes such as kubelet from becoming unresponsive due to lack of node resources. I am. requests sets the minimum resources allocated to the container, limits sets the maximum resources allocated to the container, and the CPU limit value is specified in units of millicores (1000 millicores = 1 core).

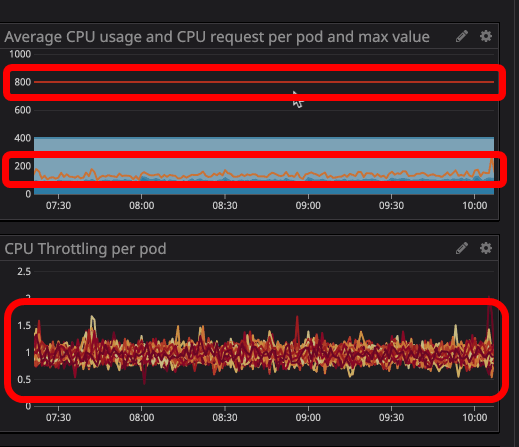

Kubernetes uses a mechanism called CFS quota to reduce the resources allocated to the container so that the CPU resource used by the container does not exceed the limit value. This adjustment is called throttling, but Buffer found that the container was throttled even though the CPU usage of the container did not reach the limits. If you check the graph, you can see that the CPU usage of the container is about 200 millicores and throttling is occurring in the pod even though the limit value of 800 millicores has not been reached. This issue was due to a bug in the Linux kernel, which also created an Issue in GitHub's Kubernetes repository.

Unnecessary throttling on the container is a cause of slow service response. As a result of repeated studies, Buffer decided to abolish CPU limits. However, simply removing the CPU limits is not desirable from a stability point of view. It seems that Buffer also tested removing CPU limits in the past, but as a result of the excessive resource consumption of the container, the kubelet became unresponsive, the status of the node became NotReady, and the pod re-opened on another node. It was scheduled and there was a vicious circle where another problem would occur at that node.

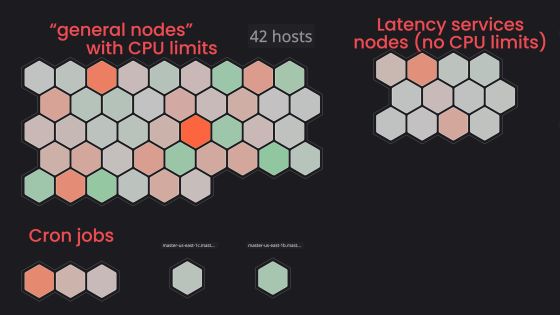

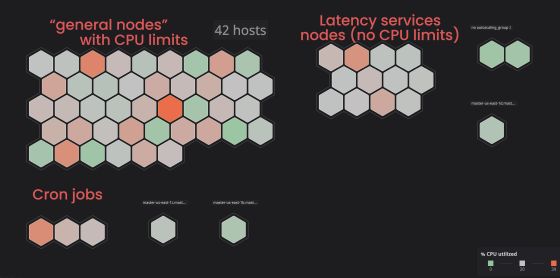

Khun explains that Buffer runs a service whose priority is to ensure response speed on a specific node, and removes the CPU limits for only pods in that node. Specifically, by assigning Taint to pods for which CPU limits have not been set, it is possible to prevent pods for which limits have not been set from being scheduled for the node group on which the pod for which CPU limits are set runs ..

This avoids instability in the overall service, but over-consumption of resources can still occur on nodes running pods with no CPU limits configured. In response to this problem, Khun sets a value that gives a 20% margin to the expected maximum CPU usage of the container in Pod requests so that the node resource has a margin to the resource allocated to the container. I told you. This will prevent the containers in the node from running out of resources, but the drawback is that the 'container density', that is, the number of containers that can be operated in a single node, decreases. Explained.

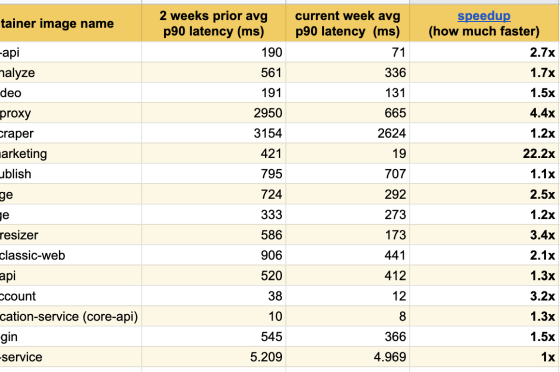

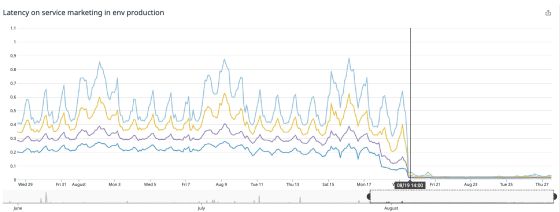

The result of comparing the service response speed before and after removing the CPU limits is as follows. The first column shows the response speed before the abolition, the second column shows the response speed after the abolition, and the third column shows how the response speed improved after the abolition compared to before the abolition. The improvement of is seen.

Especially on Buffer.com, the main page of Buffer, the response speed was 22 times faster.

The Linux kernel bug that caused unnecessary throttling has been fixed in the latest versions of Debian, Ubuntu, and EKS. It seems that the GKE has also been fixed, but unnecessary throttling has been reported yet.

Related Posts:

in Software, Posted by darkhorse_log