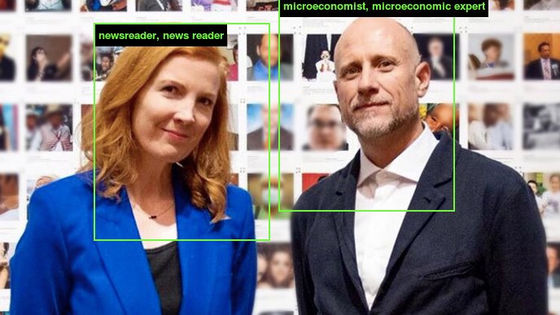

'Research to increase the resolution of mosaic image by 64 times' developed into a discussion of racial discrimination, researchers who accused of discontinuation of account

Facebook's Chief Researcher in the Artificial Intelligence division, Jan Lucan , who won the 2018 Turing Prize for a person who has achieved achievements in the field of computer science, discussed racism in artificial intelligence and machine learning. Following a lot of criticism, we announced the suspension of our Twitter account.

Yann LeCun Quits Twitter Amid Acrimonious Exchanges on AI Bias | Synced

https://syncedreview.com/2020/06/30/yann-lecun-quits-twitter-amid-acrimonious-exchanges-on-ai-bias/

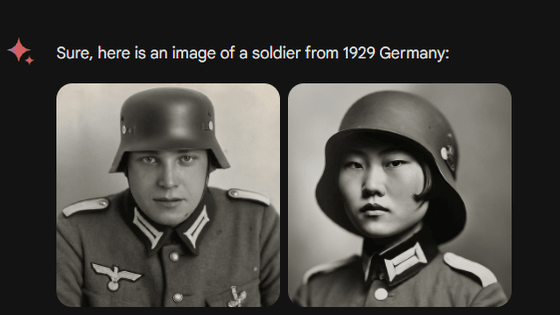

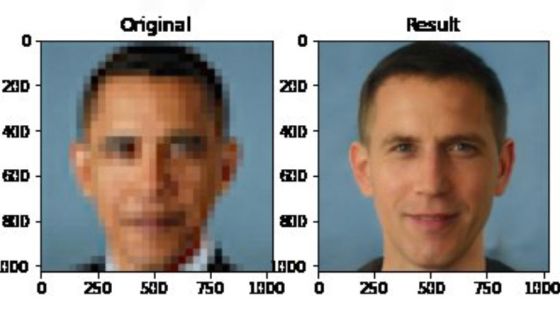

The trigger began on June 20, 2020, when Duke University published the results of research on high-quality image generation using artificial intelligence algorithms on Twitter.

Face Depixelizer

— Bomze (@tg_bomze) June 19, 2020

Given a low-resolution input image, model generates high-resolution images that are perceptually realistic and downscale correctly.

????GitHub: https://t.co/0WBxkyWkiK

????Colab: https://t.co/q9SIm4ha5p

PS Colab is based on the https://t.co/fvEvXKvWk2 pic.twitter.com/lplP75yLha

Duke University announced a technology that converts a 16x16 pixel mosaic-like image into a fine 1024x1024 pixel image in a few seconds. You can see examples of image conversion and the technology used in the article below.

A technology will be developed to increase the resolution of mosaic images by 64 times and produce infinitely high quality images-GIGAZINE

Duke University's research is published on GitHub, and you can actually try image conversion at the following website.

Face Depixelizer Eng-Colaboratory

https://colab.research.google.com/github/tg-bomze/Face-Depixelizer/blob/master/Face_Depixelizer_Eng.ipynb

However, the research conducted by Duke University is not a technique to 'reconstruct' a detailed image from a mosaic image, but a technique in which artificial intelligence infers the original image from the image and 'creates a new image.' In fact, the person who converted the mosaic image of former US President Barack Obama questioned that Obama's face was not restored correctly.

???????????? pic.twitter.com/LG2cimkCFm

— Chicken3gg (@Chicken3gg) June 20, 2020

In response to the tweet above, Associate Professor Brad Weibull of Pennsylvania State University said, 'This image shows the danger of prejudice in artificial intelligence.'

This image speaks volumes about the dangers of bias in AI https://t.co/GsoQqSr3XP

— Brad Wyble (@bradpwyble) June 20, 2020

And Lecan responded to Weibull's tweet, 'The learning data of the machine learning system is biased. This face upsampling system has been pre-trained on FlickrFaceHQ , which mainly contains white photos. So the conversion results look like everyone is Caucasian, and if you do the exact same training on the Senegal dataset, everyone will look like Africans.'

ML systems are biased when data is biased.

— Yann LeCun (@ylecun) June 21, 2020

This face upsampling system makes everyone look white because the network was pretrained on FlickFaceHQ, which mainly contains white people pics.

The * Exact Train * Same System On A Dataset From Senegal, And Everyone Will Look African. Https://T.Co/jKbPyWYu4N

``I'm tired of this framing,'' said Tim Knit Gebul , founder of the Black in AI group that protects blacks in the field of artificial intelligence, and technical leader of the ethical artificial intelligence team at Google. Many people have tried to explain, and many scholars have tried to explain. Listen to us. Don't just blame the machine learning on the dataset.' did.

'M Sick Of I This Framing. Tired Of It. Many People Have Tried To Explain, Many Scholars. Listen To Us. You Can Not Just Reduce Harms Caused By ML To Dataset Bias. Https://T.Co/HU0xgzg5Rt

— Timnit Gebru (@timnitGebru) June 21, 2020

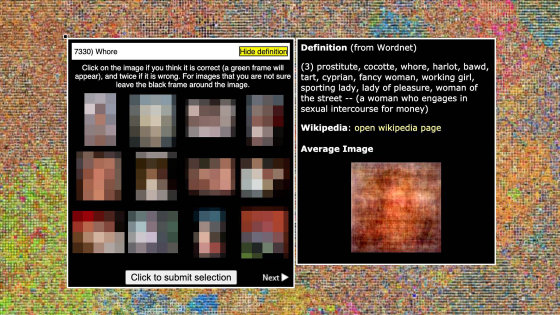

Known for his research on race and gender bias in facial recognition systems and artificial intelligence algorithms, Mr. Gebul has advocated fairness and ethics in artificial intelligence for many years and argued that 'Let's diversify machine learning datasets' I've been. A study he did with MIT Media Lab revealed that 'commercial face recognition software is more likely to be misclassified by women with darker skin compared to men with lighter skin'. I am.

Lucan argued that his comments were on a specific dataset used by Duke University. It also states that it is the engineers who use machine learning that need to pay attention to data selection, not researchers who study machine learning.

Not so much ML researchers but ML engineers.

— Yann LeCun (@ylecun) June 21, 2020

The consequences of bias are considerably more dire in a deployed product than in an academic paper.

But Gebul said to Lecan's tweet that he was 'believable' and said, 'We say that people like him should learn. We are trying to educate people in our own community.' It's a tweet.

This is not even people outside the community, which we say people like him should follow, read, learn from.This is us trying to educate people in our own community.Its a depressing time to be sure.Depressing.

— Timnit Gebru (@timnitGebru) June 21, 2020

The approximately one-week discussion between Lecan and Gebul drew thousands of comments and retweets, and many prominent artificial intelligence researchers complained about Lecan's explanation. 'I don't respect Lecan's opinion in a respectful way, as long as machine learning is benchmarked with race-biased data,' said Google Research scientist David Har. It's reflected as a bias. It's useless to ask engineers to retrain with unbiased data in biased machine learning.'

Many people participated in discussions on machine learning and racial issues, including Mr. Lekan and Mr. Gebul, as well as artificial intelligence researchers and activists on racism. In addition to the discussion, many tweets gathered that simply attacked either Lecan or Gebul.

'I highly respect Gebul's work on AI ethics and impartiality,' said Lucan, 25 June 2020. About working to make sure that bias is not amplified by artificial intelligence. I am deeply concerned. I am sorry that the method of communication here has been a topic of conversation.' Tweeted and apologized to Mr. Gebru.

@timnitGebru I very much admire your work on AI ethics and fairness.I care deeply about about working to make sure biases don't get amplified by AI and I'm sorry that the way I communicated here became the story.

— Yann LeCun (@ylecun) June 26, 2020

1/2

And on 29 June 2020, Lecan said on Twitter, 'Stop posting each other on Twitter and other means, following last week's post. Especially critical of Mr. Gebru and my post. Stop attacking everyone, whether it's words or not, clashes are harmful and counterproductive. I oppose all forms of discrimination. We posted to Facebook about our beliefs and this is our last important post on Twitter,' tweeted and announced that she will stop posting to her account.

Conflicts, verbal or otherwise, are hurtful and counter-productive.

— Yann LeCun (@ylecun) June 28, 2020

I stand against all forms of discrimination.

I made a long post on FB about my values and and core beliefs: https://t.co/TMUCPpZu6m

This will comp my last substantial post on Twitter.

Farewell everyone.

Following discussion on Twitter, the research team at Duke University also updated the paper on June 24, 2020. In the paper, 'Overall, sampling from StyleGAN seems to be much more frequent on white faces than on colored faces. The generated photos show racial bias. , 72.6% of the white photos are white, 13.8% are Asian, 10.1% are black, and Indians represent a small percentage of the photos, 3.4%.' ..

[2003.03808] PULSE: Self-Supervised Photo Upsampling via Latent Space Exploration of Generative Models

https://arxiv.org/abs/2003.03808

Related Posts:

in Software, Web Service, Science, Smartphone, Posted by darkhorse_log