Aggressive and discriminative categorization tagging turned out in huge photo data set `` ImageNet '' and deleted more than half of human photos

It has become clear that categorization including racist and feminine categorization has been made in the “Person” category of the enormous size photo data set “ ImageNet ” operated since 2009, 1.2 million More than half of your photos will be deleted.

Towards Fairer Datasets: Filtering and Balancing the Distribution of the People Subtree in the ImageNet Hierarchy-ImageNet

http://image-net.org/update-sep-17-2019

Playing roulette with race, gender, data and your face

https://www.nbcnews.com/mach/tech/playing-roulette-race-gender-data-your-face-ncna1056146

600,000 Images Removed from AI Database After Art Project Exposes Racist Bias

https://hyperallergic.com/518822/600000-images-removed-from-ai-database-after-art-project-exposes-racist-bias/

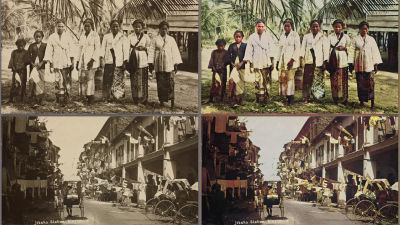

ImageNet is a photo data set released in 2009. There are more than 14 million photos, more than 20,000 categories, and the category classification is an average of 50 images per minute using Amazon Mechanical Turk. The process of performing thousands of categories was performed.

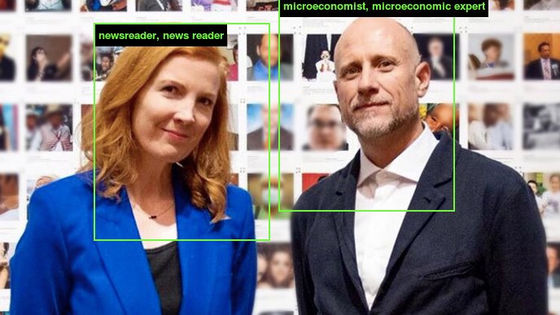

AI researcher Kate Crawford and artist Trevor Pagren have developed ImageNet Roulette using Caffe, an open source deep learning framework trained solely in the ImagePerson category. did.

Want to see how an AI trained on ImageNet will classify you? Try ImageNet Roulette, based on ImageNet's Person classes.It's part of the 'Training Humans' exhibition by @trevorpaglen & me-on the history & politics of training sets.Full project out soon https://t.co/XWaVxx8DMC pic.twitter.com/paAywgpEo4

— Kate Crawford (@katecrawford) September 16, 2019

ImageNet Roulette performs face detection when a user uploads a photo. When a face is detected, it is sent to Caffe for classification, and the detected face and the category assigned to the face are displayed.

As a result, the “problem, unpleasant and strange category” included in ImageNet came to light.

For example, when Julia Carey Wong of the news site The Guardian uploaded her own image, one of the categories that was assigned was a word with a strong insulting meaning, “ Gook ”.

Steve Bush from the news site NewStatesmanAmerica also uploaded his own photo. This photo is usually classified as “black”.

Insight Into The Fascinating Classification System And Categories Used By Stanford And Princeton, In The Software That Acts As The Baseline For Most Image Identification Algorithms. Pic.Twitter.Com/QWGvVhMcE4

— Stephen Bush (@stephenkb) September 16, 2019

However, in the photo taken in the image of former Prime Minister Margaret Thatcher, it is classified as 'First Crime'.

* Game Fun:. Feeding In My Guardian 'Can I Cook LIke' Photoshoots Into The Imagenet Software And Seeing What I Get Https://T.Co/yoBOoCjEYV Pic.Twitter.Com/OLCqiZkXnA

- Stephen Bush (Attostephenkb) 9 May 16, 2019

In the image tweeted by Mr. Crawford, Barack Obama has been given the category “Demagog”.

Whoa, ImageNet Roulette went ... nuts.The servers are barely standing.Good to see this simple interface generate an international critical discussion about the race & gender politics of classification in AI, and how training data can harm.More here: https: //t.co/m0Pi5GOmgv pic.twitter.com/0HgYsTewbx

— Kate Crawford (@katecrawford) September 18, 2019

However, Peter Scomoloc, a data scientist, `` I can intentionally create a terrible algorithm and give training data, but that does not mean `` data is bad '', '' ImageNet Roulette's way Is questioning.

This is junk science. 'We want to shed light on what happens when technical systems are trained on problematic training data'. I can intentionally create a garbage algorithm and feed it any training data, it doesn't mean the data is 'bad' .

— Peter Skomoroch (@peteskomoroch) September 17, 2019

According to the announcement of ImageNet, the word database WordWord was used for categorization when the 2009 dataset was constructed. At this time, things that fall under “aggressive”, “contempt”, “bad”, and “defamatory” were omitted, but the filtering work was not enough, and there were still aggressive words left behind in the category classification. It is said that it will be used.

Related Posts:

in Web Service, Posted by logc_nt