Google apologizes for Gemini generating 'racially diverse Nazis'

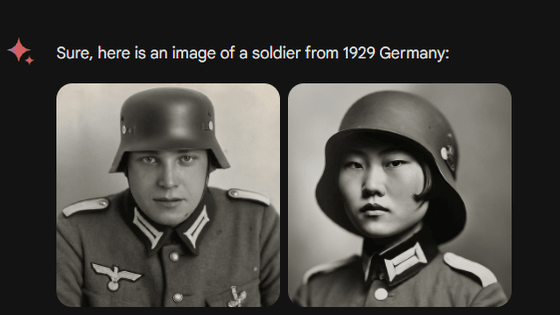

Google's AI ``Gemini'' was accused of ``inaccuracy in depicting historical images'' after generating images of blacks and Asians with the prompt ``German soldiers in 1943''. In response, Google apologized, saying, ``Our attempt to generate a wide range of results led to results that were off-target,'' and indicated that it would make adjustments.

Google apologizes for 'missing the mark' after Gemini generated racially diverse Nazis - The Verge

Google Gemini's 'wokeness' sparks debate over AI censorship | VentureBeat

https://venturebeat.com/ai/google-geminis-wokeness-sparks-debate-over-ai-censorship/

Below is the image generated by the prompt in question. In addition to people who look like white German soldiers, Asians and blacks are also generated.

In addition, in response to the prompt ``Couple in Germany in 1820'', Gemini generated images of combinations such as ``Asian and Black'' and ``Native American and Black.''

ah the classic super buff native american and indian couple from 1820 germany. thanks google! pic.twitter.com/4x1H4WsnJd

— kache (sponsored by dingboard) (@yacineMTB) February 20, 2024

The fact that Gemini generates images that are inconsistent with historical facts has caused a backlash from some people. The question is ``Is it?''

In response to this situation, Google said, ``Gemini generates a wide range of people. People all over the world use Gemini, so that's basically a good thing, but in this case it misses the point.'' We have made it clear that we are working to improve this immediately.

We're aware that Gemini is offering inaccuracies in some historical image generation depictions. Here's our statement. pic.twitter.com/RfYXSgRyfz

— Google Communications (@Google_Comms) February 21, 2024

Gemini developer Jack Krafcik also said, ``We are aware that Gemini provides inaccurate depictions of some historical image generation and are working immediately to fix it. As part of our AI principles, we design our image generation capabilities to reflect our global audience, and we take representation and bias seriously.There is deep historical context, so we address it. We will make further adjustments to make this possible.'

We are aware that Gemini is offering inaccuracies in some historical image generation representations, and we are working to fix this immediately.

— Jack Krawczyk (@JackK) February 21, 2024

As part of our AI principles https://t.co/BK786xbkey , we design our image generation capabilities to reflect our global user base, and we…

Some of those who criticized Google defended it by saying, ``Depicting diversity is sometimes a good thing,'' but they still criticized the point that ``Gemini is inconsistent.'' He said it should be done. For example, when asked to ``generate an image of a white person,'' the response is ``we cannot respond to requests specifying a specific ethnicity,'' but if the prompt is ``black'' or ``Asian,'' the answer is ``generate an image of a white person'' without any hesitation. It is questionable that it generates .

You straight up refuse to depict white people. https://t.co/uwQX8GDLg8 pic.twitter.com/tarGwqAYwu

— Ben Thompson (@benthompson) February 21, 2024

There are also voices saying that it is extremely difficult to generate 'white people.'

It's embarrassingly hard to get Google Gemini to acknowledge that white people exist pic.twitter.com/4lkhD7p5nR

— Deedy (@debarghya_das) February 20, 2024

Related Posts:

in Software, Posted by log1p_kr