You may be able to use AI to 'create the voice of people who can not speak words'

The scientific journal Nature published a research result stating that AI was able to learn brain signals and movements such as vocal cords and synthesize speech without using actual speech. If this technology is applied, it is expected that people with disabilities who can not speak words will be able to communicate in real time by voice.

Brain signals translated into speech using artificial intelligence

https://www.nature.com/articles/d41586-019-01328-x

Synthetic Speech Generated from Brain Recordings | UC San Francisco

https://www.ucsf.edu/news/2019/04/414296/synthetic-speech-generated-brain-recordings

There have been many successful examples of prostheses who can read signals from the brain and move them like their own hands, and with the development of technologies such as 3D printers, quite practical prostheses have come to be created . However, a technology to verbalize brain signals has not been established as of 2019, and patients with stroke, traumatic brain injury, and amyotrophic lateral sclerosis (ALS) have difficulty with eye and face muscles. At present, we use the motion to create text and convert it into synthetic speech to communicate. A prime example is Dr. Stephen Hawking, who has made great strides in physics research while suffering from amyotrophic lateral sclerosis (ALS).

A story about getting Dr. Stephen Hawking's lost voice again

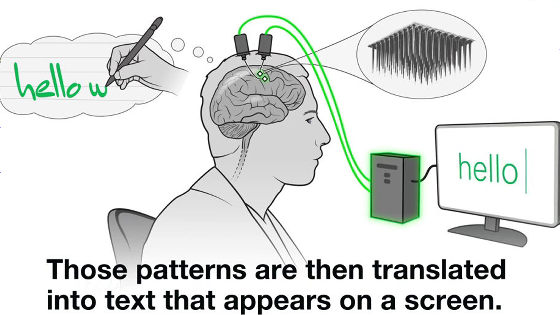

However, this method is prone to errors, has a slow input speed, and the average person speaks only 10 words in 1 minute while speaking on average 100 to 150 words in 1 minute It is the limit to do. Therefore, new approaches have been made to synthesize speech directly from brain signals as a new approach.

Birth of a system that reads brain signals and converts them into 'speech audible and understandable'

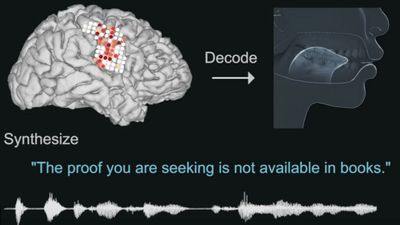

This time, the group of neurosurgeons Edward Chan and colleagues at the University of California, San Francisco developed a research group that “synthesizes speech with greater precision than these previous studies,“ rather than direct brain signals into speech. This is a technology to create synthetic speech by letting AI learn the brain signals and mouth and throat movements during speech.

With the help of five patients who have already implanted electrodes on the surface of their brain as part of epilepsy treatment, Chang et al. Conducted an experiment to measure the area of the brain involved in generating words. In the experiment, subjects were asked to speak hundreds of words, and in addition to brain activity, movements of lips, vocal cords and tongue were also recorded. I used these data to make the program learn using Deep Learning . Then, it became possible to synthesize speech that was easier to hear than synthesizing speech directly from brain activity.

In the movie below, you can hear and compare the speech actually spoken by the subject and the synthesized speech using AI.

Speech synthesis from neural decoding of spoken sentences-YouTube

When a third party listened to the speech synthesized by this technology, it was possible to identify the word with a probability of about 70%. The accuracy drops to about 43% when it is a long sentence, but it is still extremely accurate as the voice synthesized in real time.

In addition, even in the experiment where the subject moved only the mouth without speaking it succeeded in synthesizing the voice as well although the accuracy was considerably reduced. Chang and his colleagues are currently experimenting with the use of denser electrodes to improve speech synthesis algorithms. 'I hope that people with speech problems will be able to speak again using artificial vocal tracts,' Josh Chartier, a collaborator, said.

Related Posts: